A recent paper submitted to the arXiv* server introduced “TrackFlow: Multi-Object Tracking with Normalizing Flows” - a principled technique to learn association costs using conditional density estimation. This novel probabilistic view addresses the limitations of typical fusion rules.

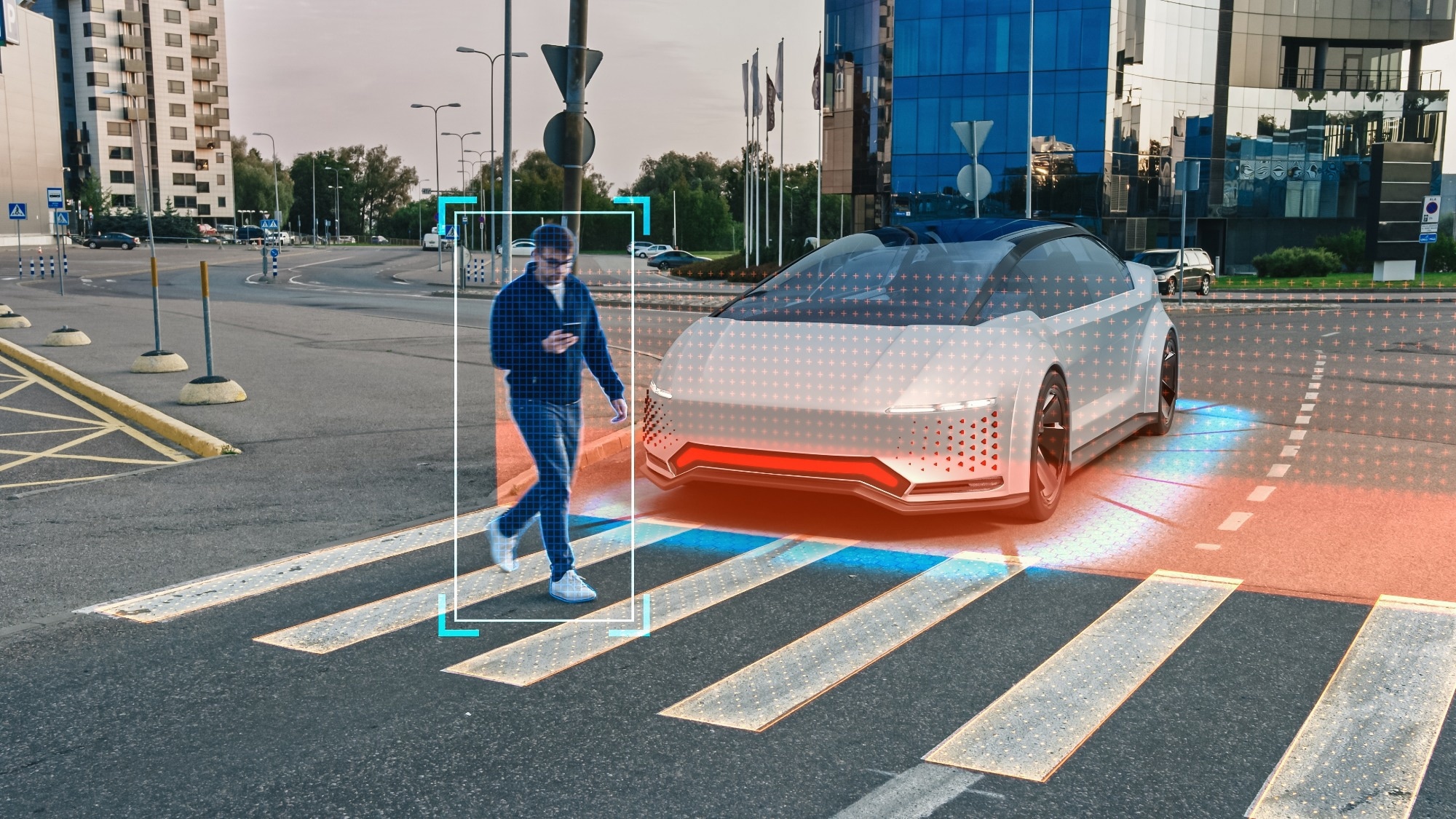

Study: TrackFlow: Revolutionizing Multi-Object Tracking with Probabilistic Fusion. Image credit: Gorodenkoff/Shutterstock

Study: TrackFlow: Revolutionizing Multi-Object Tracking with Probabilistic Fusion. Image credit: Gorodenkoff/Shutterstock

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

This approach models cost as likelihoods from a conditional generative model called TrackFlow. By training on actual data, TrackFlow adapts fusion to particular scenes and captures nuanced cost interactions. Experiments demonstrate consistent gains by integrating TrackFlow into state-of-the-art trackers. This work provides a data-driven methodology for sensor fusion that could be generalized across diverse perception tasks.

Background

Multi-object tracking is an essential computer vision capability with applications across autonomous systems, surveillance, and more. The goal is to accurately locate objects such as pedestrians within video footage and maintain their identities consistently across frames. One leading paradigm is tracking-by-detection, where object detectors first identify bounding boxes, and then these detections are associated with target tracks over time. However, a persistent challenge is appropriately combining various perceptual cues like visual appearance, motion patterns, and 3D spatial location into a meaningful association cost between detections and tracks.

Existing techniques typically rely on hand-designed formulas using weighted sums or heuristic rules to merge different costs. However, these simplistic integration strategies have significant limitations. The arbitrary choice of weights requires extensive tuning on separate validation sets needing additional labeled data. Moreover, any single set of hyperparameters is unlikely to generalize across diverse real-world scenarios, which can vary dramatically in factors like camera motion, lighting, weather, and object density. Most critically, conventional fusion rules assume costs are independent, neglecting complex interactions between modalities.

To overcome these pitfalls, the authors of the present study propose “TrackFlow,” an innovative approach using normalizing flows to estimate the probability that a candidate detection-to-track association is correct based on the multiple input cost cues. Their elegant probabilistic formulation inherently accounts for dependencies between costs and adapts fusion to particular scenes. Experiments demonstrate that this principled fusion technique consistently improves state-of-the-art trackers on significant benchmarks.

Modeling Association Costs

The critical insight underlying TrackFlow is recasting the association cost between detection and a track as the negative log-likelihood based on a learned conditional density estimator. Given a track’s recent past observations, TrackFlow outputs the likelihood of a candidate detection’s various displacements - such as the difference between predicted and actual 2D image motions or camera distances. By training on valid real-world associations, TrackFlow learns to emphasize and combine costs based on the scene context, capturing complex interactions neglected by conventional weighted fusion rules.

This novel probabilistic view of association costs inherently addresses several fundamental pitfalls plaguing typical cost functions used for data association in tracking. Hand-selecting weights requires extensive trial-and-error tuning on separate validation sets. Moreover, any single set of hyperparameters will fail to generalize across diverse tracking scenarios with different camera motions, weather, densities, etc. Most critically, weighted fusion inherently assumes costs are independent, completely overlooking dependencies between modalities. By instead learning a model of the joint distribution over costs from actual data, TrackFlow provides a principled, adaptive approach to cost integration.

Architecture and Training Methodology

TrackFlow’s architecture comprises a series of invertible layers based on normalizing flows, a powerful family of deep generative models. Normalizing flows enables flexible density estimation by transforming a simple base distribution into a complex target distribution via an invertible mapping. In TrackFlow’s case, this complex distribution is the conditional likelihood function modeling associations’ costs. By directly parameterizing the joint density, TrackFlow avoids assuming costs are independent. The model is conditioned on past track positions to provide temporal context and visual features capturing scene-level information.

TrackFlow is trained via maximum likelihood estimation to approximate the accurate distribution of costs from valid associations present in actual tracking data. Extensive experiments demonstrated TrackFlow’s ability to yield well-calibrated, applicable association costs despite being trained solely on synthetic tracking datasets like MOTSynth. The authors observed that additional fine-tuning on real-world benchmarks can further improve adaptation. The probabilistic outputs proved reliable for diverse association algorithms.

Significant Performance Improvements

The researchers performed extensive experiments analyzing TrackFlow’s impact on significant tracking benchmarks, including MOT17, MOT20, and MOTSynth. They integrated TrackFlow into various state-of-the-art tracking pipelines by replacing hand-designed cost functions with the learned model’s probabilistic outputs. Associations were solved using the Hungarian algorithm on the resulting cost matrices.

Remarkably, incorporating TrackFlow consistently improved performance across multiple leading trackers on all benchmark datasets. For instance, MOTSynth TrackFlow increased the ByteTrack detector’s identity precision by 1.4 percentage points. To better analyze the potential of probabilistic costs, the researchers also evaluated an “oracle” version using ground truth 3D positions. This upper bound configuration further substantially boosted trackers’ performance, improving identity precision by up to 9.6 percentage points. Critically, clear benefits hold across videos categorized as easy, moderate, and hard, highlighting TrackFlow’s broad applicability to diverse, realistic scenarios.

Future Outlook

This research provides a principled, data-driven approach to fuse costs from various sensors by learning the distribution of valid associations. In future work, the method could incorporate modalities like lidar, thermal cameras, or Wi-Fi signals to enhance multi-object tracking. Additional investigation into scene-adaptive conditioning and fine-tuning on actual data beyond synthetic datasets could improve generalization. An exciting direction for research is joint optimization of the costs alongside the fusion model to maximize tracking performance. This study helps enable reliable multi-object perception for crucial real-world applications such as autonomous driving, robotics, and surveillance by advancing tracking-by-detection.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Mancusi, G., Panariello, A., Porrello, A., Fabbri, M., Calderara, S., & Cucchiara, R. (2023, August 22). TrackFlow: Multi-Object Tracking with Normalizing Flows. ArXiv.org. https://doi.org/10.48550/arXiv.2308.11513, https://arxiv.org/abs/2308.11513