In a recent article published in the journal Sensors, researchers introduced a new method for measuring the attitude of a scaled aircraft model during dynamic wind tunnel testing. Their technique uses computer vision for effective and accurate estimation, without relying on visual markers.

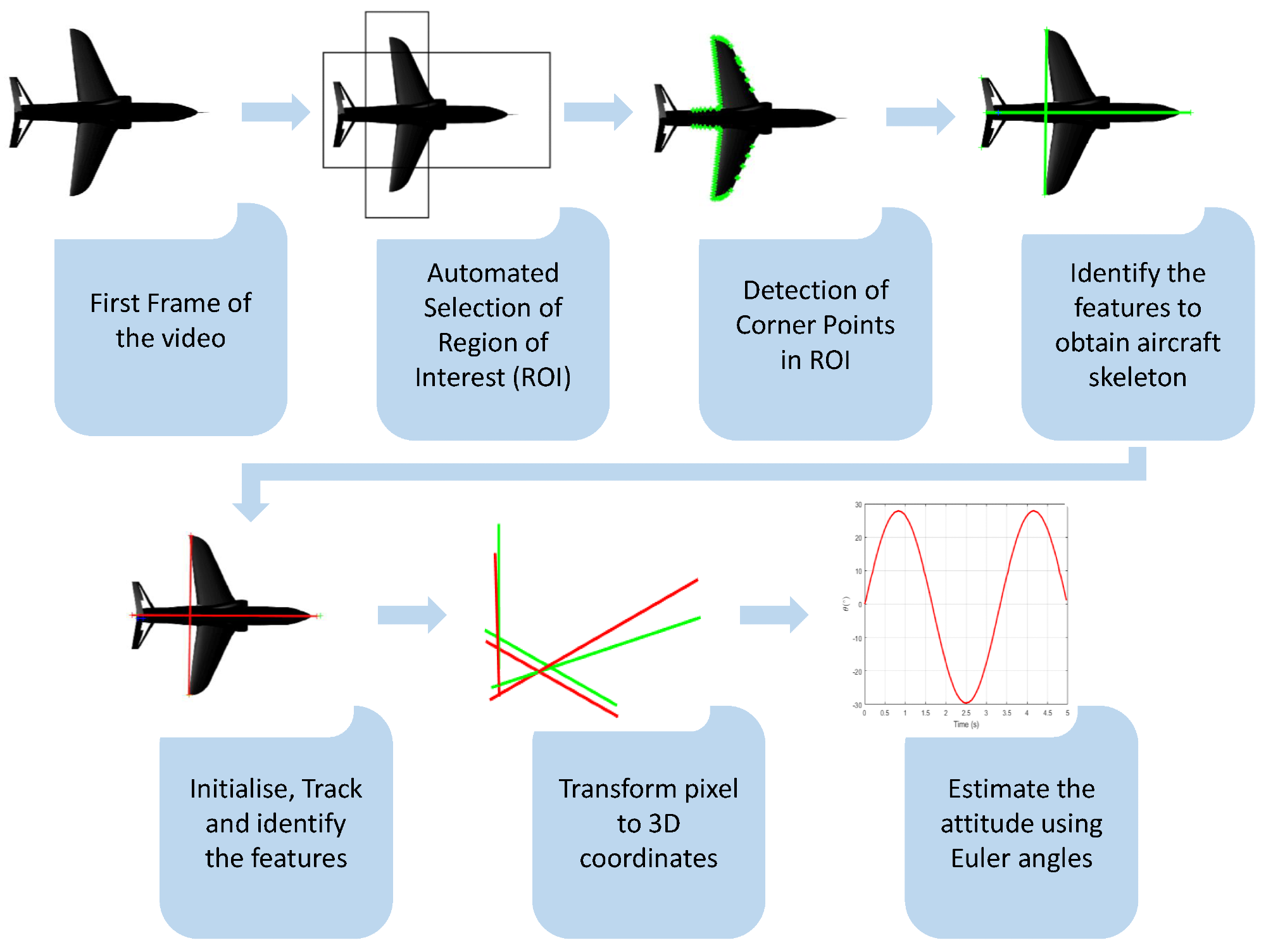

Flow chart showing the process for attitude estimation. Image Credit: https://www.mdpi.com/1424-8220/24/12/3795

Flow chart showing the process for attitude estimation. Image Credit: https://www.mdpi.com/1424-8220/24/12/3795

Background

Dynamic wind tunnel testing is crucial for studying the flight dynamics and aerodynamics of aircraft models, especially in non-linear flight conditions with high angles of attack and unsteady aerodynamics. Traditional methods for measuring forces and moments, like balances and gimbals, use support mechanisms that limit the model's free motion and differ from real flight conditions.

Off-board sensors, such as cameras, can provide real-time data and measure the model's attitude, which is essential for determining orientation and angular velocities. However, most camera-based methods for attitude measurement depend on visual markers on the model, which can cause errors due to occlusion, lighting, and calibration issues. Additionally, placing markers can change the model's aerodynamic characteristics and restrict their use with different aircraft designs.

About the Research

In this paper, the authors used computer vision techniques in a systematic process that included feature detection, feature tracking, three-dimensional (3D) reconstruction, and attitude estimation. For feature detection, they applied Harris corner detection to the region of interest in the initial frame from both top and side views to identify corner points on the aircraft model, such as the nose tip, wingtips, and tail tips, defining the aircraft skeleton and body axes. During feature tracking, the Kanade-Lucas-Tomasi (KLT) algorithm tracked these corner points across subsequent frames to capture the model's motion.

For 3D reconstruction, the study developed a customized transformation method from 2D to 3D coordinates using an orthogonal stereo setup, camera projection matrices, and triangulation techniques. This method provided complete 3D coordinates of the aircraft skeleton from both views. For attitude estimation, they used quaternions to determine the relative orientation between the initial and current frames, converting these to Euler angles and rates using trigonometric functions.

Furthermore, the method was validated using a 3D computer-aided design (CAD) simulation of a 1/12 sub-scale BAE Hawk fighter aircraft model, imported into MATLAB Simscape to model and simulate the aircraft's motion. Additionally, a single-degree-of-freedom experiment was conducted where the pitch angle varied sinusoidally while the roll and yaw angles remained fixed at zero.

Realistic data from an onboard sensor, comprising an inertial measurement unit (IMU) and a potentiometer gimbal, was generated to facilitate sensor fusion. An extended Kalman filter (EKF) integrated data from both the onboard and off-board sensors, providing a fused estimate of the state vector, which included the Euler angles and gyroscope biases.

Research Findings

The outcomes were compared with the ground truth data obtained from the Simscape simulation. The root-mean-square errors (RMSE) of the Euler angles and rates were calculated to quantify the accuracy of the method. The results showed that the novel method achieved high accuracy and robustness for attitude estimation, with RMSE values of 0.0101 rad/s, 0.0036 rad/s, and 0.0361 rad/s for the roll rate, yaw rate, and pitch rate respectively. It also exhibited an accuracy of 98.08% for the pitch rate, which is the most important parameter for longitudinal motion, and demonstrated a 45.41% improvement in accuracy over an earlier direction cosine matrix method.

The EKF state estimation also achieved high accuracy, with RMSE values of 0.0262 rad/s, 0.0090 rad/s, and 0.0034 rad/s for the pitch rate, roll rate, and yaw rate, respectively. It showed an improved accuracy of 98.6% for the pitch rate, indicating its efficiency over the implementation of the direction cosine method.

Furthermore, a sensitivity analysis was performed to evaluate the impact of small perturbations in the identification of the aircraft skeleton points on the Euler angles. The analysis indicated that the pitch angle is most sensitive to changes in the position of the nose tip and the midpoint of the horizontal stabilizer, while the roll and yaw angles are less sensitive to changes in the position of the wingtips and the vertical stabilizer tip. This indicates that the accuracy of the method can be enhanced by improving the detection and tracking of these points.

Conclusion

The novel technique demonstrated robustness and effectiveness in estimating the attitude of aircraft models. It showed promise for applications in critical fields such as air traffic control tower surveillance, marine operations, drone identification, classification, and missile dynamics tracking.

The technique's adaptability to different aircraft configurations and wind tunnel setups was notable as it did not rely on physical markers or specific camera orientations. Future work could further enhance its capabilities to test multiple degrees of freedom and various flight modes, including short-period and phugoid modes, utilizing a novel three-degree freedom rig currently under development.