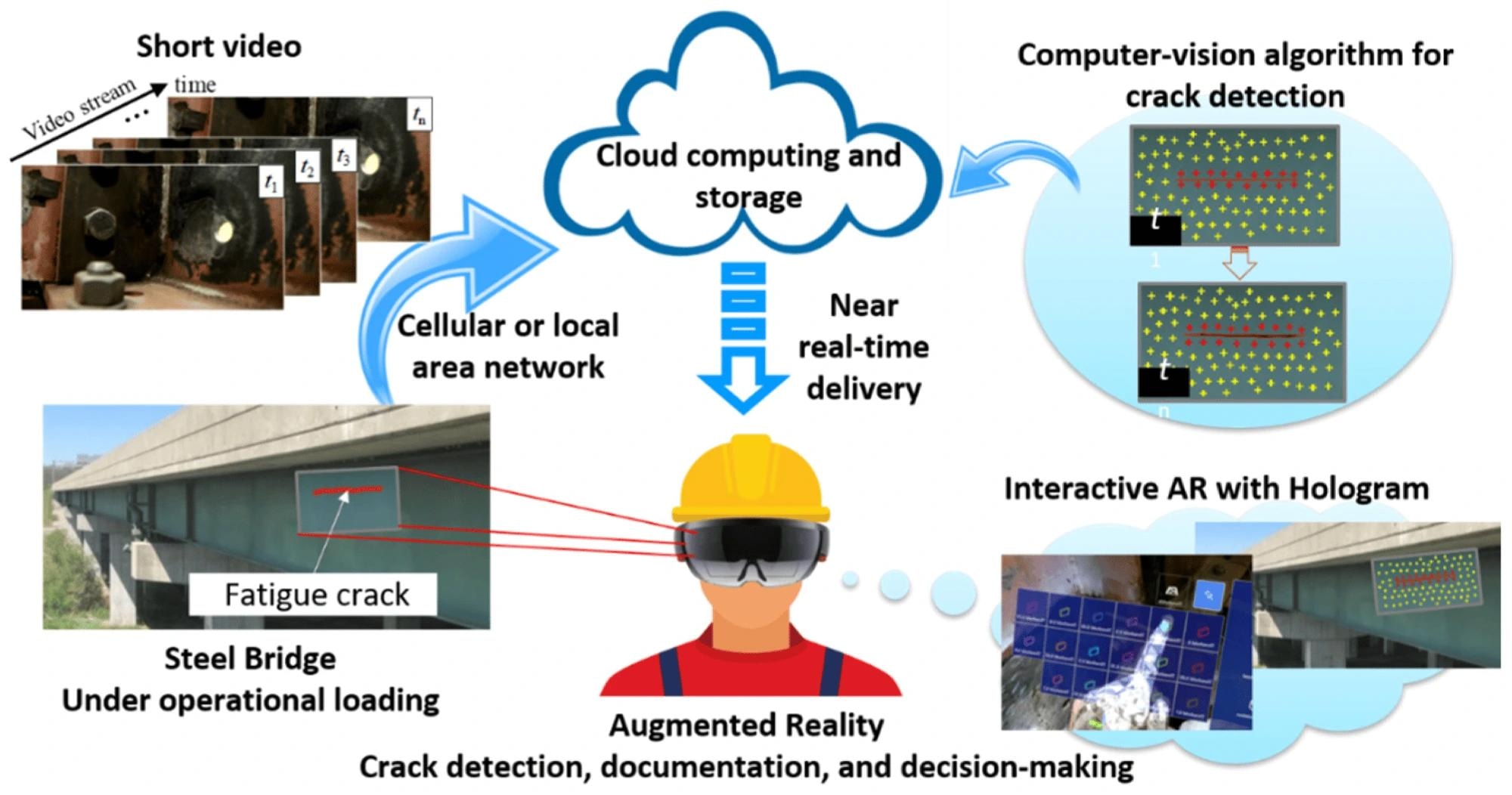

The method employs a computer vision algorithm to analyze structural surface motion from AR headset videos, tracking feature points and measuring distance variations for efficient crack detection without camera motion compensation. Results are displayed as holograms on the bridge surface in an AR environment, aiding inspectors with enhanced visualization and decision-making. This approach shows significant potential for real-world applications validated through laboratory tests.

Past steel bridge fatigue crack inspection work has primarily relied on human vision, guided by the Federal Highway Administration (FHWA) and state transportation guidelines. However, visual inspections show significant limitations, with inspectors only achieving 50% detection rates for 26 mm cracks and 90% for 113 mm cracks.

More reliable detection methods are crucial given that 42% of United States (U.S.) bridges are over 50 years old and vulnerable to fatigue cracks. Image processing and artificial intelligence (AI) based techniques often struggle to differentiate real cracks from similar features like corrosion marks. AR offers a promising solution, enhancing crack detection with additional visual information and interactive tools. Although AR devices have limited computational power, they can use wireless connections for real-time data processing.

Efficient AR-Based Crack Detection

The proposed human-centered bridge inspection process begins by creating a local area network (LAN) using a Wi-Fi router, connecting an AR headset, such as the HoloLens 2 (HL2), to a server computer that runs the crack detection algorithm and stores data. Using the AR headset's camera, the bridge inspector records a short video of a fatigue-crack-prone region. This video is automatically transmitted to the server via the LAN, where it is processed for crack detection. Alternatively, a cellular network can connect to a remote server for data processing and storage.

An ROI is selected from the first video frame on the server, and distinctive feature points are tracked throughout the video. These features are grouped into local circular regions (LCRs) to calculate distances between feature point pairs. Significant distance variations, indicating fatigue cracks, are identified by analyzing the distance history of feature point pairs within the LCRs. This method enhances computational efficiency and enables near-real-time results by eliminating the need for global motion compensation (GMC).

A range of threshold values is applied to determine the optimal threshold value for crack detection, producing various candidate detection results. These results are converted into holograms and overlaid onto the real-world view of the structure via the AR device. An interactive virtual menu allows the inspector to toggle through threshold values and select the optimal one, enhancing crack detection and aiding in informed decision-making during the inspection.

The proposed human-centered bridge inspection process empowered by AR and computer vision.

The proposed human-centered bridge inspection process empowered by AR and computer vision.

Enhanced Crack Detection

The proposed crack detection algorithm, adapted from previous work, identifies crack-induced surface motion under fatigue loading by analyzing discontinuities caused by the opening and closing of cracks in recorded video. Feature points with high-intensity gradients are detected using the Shi–Tomasi algorithm and tracked across video frames using the Kanade–Lucas–Tomasi (KLT) tracker.

Unlike methods requiring GMC, this approach tracks distance changes between feature point pairs, significantly enhancing computational efficiency and enabling near-real-time detection.

By evaluating LCRs around feature points, the algorithm identifies significant distance variations indicating cracks, even amidst global camera movements. The results are presented as holograms in an AR environment, allowing inspectors to select optimal threshold values for accurate crack detection interactively. They are visualized as clusters of highlighted feature points. This method improves computational efficiency and enhances crack detection accuracy, facilitating more informed decision-making in field applications.

Quantifying Crack Detection

The study presents a comprehensive methodology for quantifying crack detection outcomes using vision-based approaches. It introduces a novel distance-based algorithm that tracks feature points to identify crack-induced surface motion in recorded videos.

By employing density-based spatial clustering of applications (DBSCAN) for clustering, the method accurately segments crack regions based on detected feature points. Evaluation metrics such as intersection over union (IOU) assess detection accuracy against ground truth boundaries. Results demonstrate improved performance over previous displacement-based methods, showcasing higher IOU scores and more precise crack localization in both 2D and 3D video scenarios.

Fatigue Load Impact

The study conducted a parametric investigation using vision-based approaches into the influence of fatigue load levels on crack detection accuracy. Videos captured from two distinct viewpoints were analyzed across 10 fatigue load cases, ranging from 0.9 to 22.2 kN.

Results indicated varying IOU scores, with higher scores correlating to increased load levels, demonstrating enhanced capability in crack detection as load intensity rose. The method proved consistent in detecting cracks with openings exceeding 0.5 mm, showcasing robust performance across different load scenarios in 2D and 3D video analyses. Additionally, integrating an AR environment facilitated efficient inspection, allowing inspectors to visualize and assess crack detection results in near-real-time, thereby enhancing inspection efficiency and effectiveness.

Conclusion

In summary, the paper introduced an advanced methodology for human-centered bridge fatigue crack inspection by integrating computer vision and AR. The approach utilized video feature tracking from a moving camera to detect fatigue cracks in near-real-time, empowering AR-enabled decision-making during inspections. It analyzed differential surface motion through feature points, successfully detecting and localizing fatigue cracks without requiring GMC.

Experimental validation on various specimens confirmed superior accuracy and computational efficiency compared to traditional methods, supported by IOU metric quantification and parametric studies on fatigue load impact. The developed AR environment, featuring cloud database integration, hologram generation, and interactive tools, demonstrated its potential for enhancing field inspection capabilities.