Pigeon eggs are rich in nutrients and have various health benefits, making them a high-quality nutritional product. However, pigeon eggshells are soft and prone to breakage, which affects the production efficiency and quality of pigeon egg breeding. To prevent the loss of pigeon eggs, fake eggs are often used to replace the real ones after they are laid, and then the real eggs are transferred to incubation equipment. Therefore, the rapid and accurate identification of pigeon eggs is crucial for the management of pigeon egg production.

Computer vision technology has been widely used for detecting various types of poultry eggs, such as chicken, duck, and goose eggs. However, research on pigeon egg detection is scarce, and most existing methods focus on accuracy rather than efficiency and lightweightness, which are important factors for deploying models on mobile platforms or robots. Moreover, the complex environment of pigeon coops, such as dust, feces, feathers, and occlusion, poses challenges for the detection of pigeon eggs.

About the Research

In the present paper, the authors developed the YOLOv8-PG egg detection model, based on the advanced YOLOv8 algorithm, which is versatile in supporting a range of computer vision tasks such as object detection, instance segmentation, and object tracking. The study enhances the YOLOv8 model across four key dimensions: the backbone network, neck network, loss function, and upsampling.

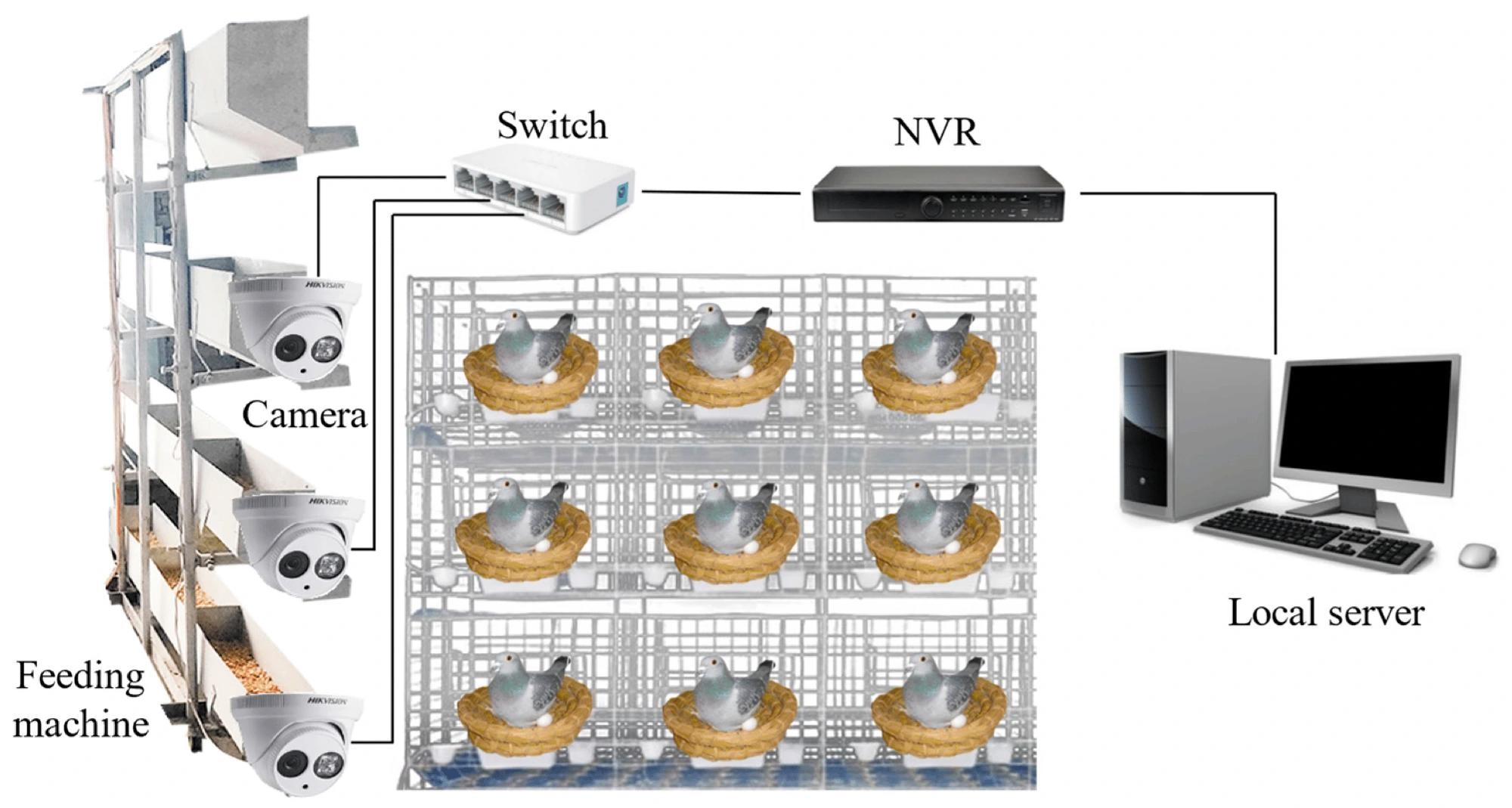

Image data acquisition hardware platform.

Image data acquisition hardware platform.

The backbone network, pivotal in feature extraction from input images, underwent significant refinement. The researchers replaced the bottleneck module within the cross-stage partial (CSP) bottleneck with the fasternet block, incorporating an efficient multi-scale attention (Fasternet-EMA) block. This integration not only reduced the model's parameter count and computational load via partial convolution but also enhanced its focus on essential features while mitigating interference from complex backgrounds.

Similarly, adjustments were made to the neck network, which harmonizes features from various scales. The CSP bottleneck was replaced with a 2 convolutions (C2f) module, leveraging the fasternet block to achieve streamlined processing. Additionally, Dysample, a dynamic upsampler based on point sampling, was introduced to enhance feature map resolution and improve detection capabilities, particularly for low-resolution and small-target objects.

The refinement extended to the loss function, responsible for quantifying disparities between predicted output and ground truth. The paper adopted the exponential moving average slide loss (EMASlideLoss), optimizing the SlideLoss function via the exponential moving average (EXPMA) approach. This adaptation addressed sample imbalance by assigning varying weights to samples of varying difficulty, thereby enhancing the model's robustness through parameter sliding averages.

Furthermore, the study established a comprehensive database of pigeon eggs from the Silver King breed, with multiple angles and labels for real and fake eggs. It then trained and tested the YOLOv8-PG model on this dataset, comparing its performance with other object detection models such as faster region-based convolutional neural network (Faster R-CNN), YOLO version 5 small (YOLOv5s), YOLO version 7 (YOLOv7), YOLO version 8 nano (YOLOv8n), and YOLO version 8 small (YOLOv8s).

Evaluation metrics, including F1-score, mean average precision (mAP), model parameters, and giga floating-point operations per second (GFLOPs), were employed to assess the YOLOv8-PG model's efficacy. Additionally, ablation experiments were conducted to validate the impact of each enhancement on the YOLOv8n model's performance.

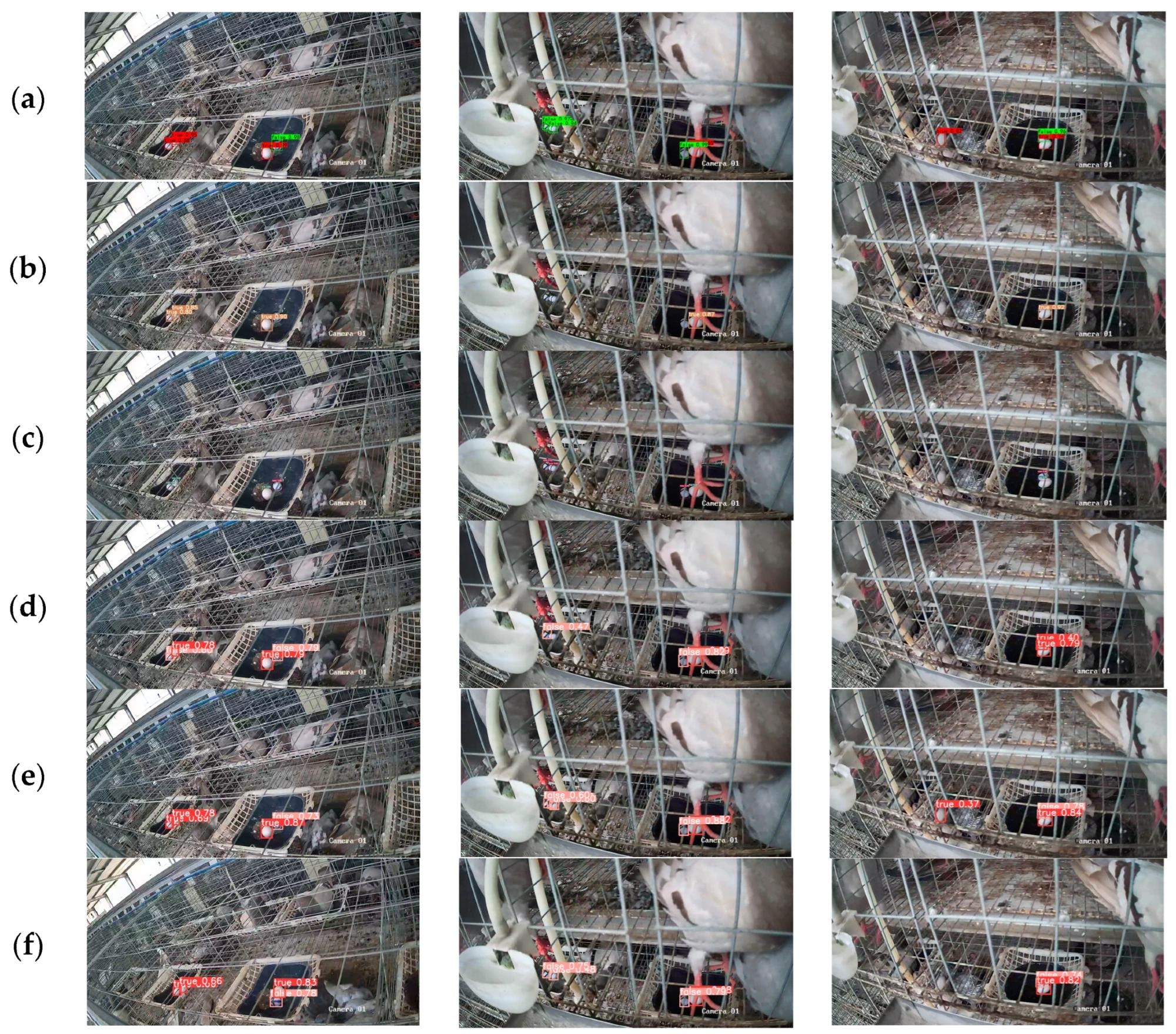

Real and fake pigeon egg-detection results of different models: (a) Faster R-CNN (b) YOLOv5s (c) YOLOv7 (d) YOLOv8n (e) YOLOv8s (f) YOLOv8n-PG.

Real and fake pigeon egg-detection results of different models: (a) Faster R-CNN (b) YOLOv5s (c) YOLOv7 (d) YOLOv8n (e) YOLOv8s (f) YOLOv8n-PG.

Research Findings

The outcomes showed that the YOLOv8-PG model achieved exceptional performance metrics, including an F1-score of 98.3%, a mAP50 of 99.33%, a mAP75 of 89.46%, and a mAP50-95 of 70.42%, surpassing many other models in terms of accuracy. Specifically, it has a parameter count of 2.318M and a GFLOPs value of 6.4G, which is significantly lower than competing models, highlighting its efficiency and lightweight nature.

Compared to the baseline YOLOv8n model, the novel model exhibited improvements across various metrics, enhancing the F1-score by 0.76%, the mAP50 by 0.14%, the mAP75 by 4.45%, and the mAP50-95 by 1.56%, while reducing the parameter count by 24.69% and the GFLOPs value by 22.89%.

Moreover, the paper visually presented the detection results and heat maps generated by the YOLOv8-PG model, demonstrating its precise focus on the pigeon egg area while effectively minimizing background noise. Additionally, the analysis of AP values under different intersection over union (IoU) thresholds underscored the model's effectiveness for both real and fake pigeon eggs, particularly under high IoU thresholds.

Furthermore, the authors highlighted that the YOLOv8-PG model could be deployed on embedded devices or mobile platforms, such as Jetson and Raspberry Pi, due to its high accuracy and low computational complexity. Additionally, they suggested that the model could be extended to other poultry egg detection tasks, such as chicken, duck, and goose eggs, through adjustments to the dataset and network structure.

Conclusion

In summary, the novel approach proved to be effective in automatically detecting and collecting pigeon eggs in complex environments, thereby resulting in a reduction in the breakage rate and labor cost associated with pigeon egg breeding. However, the researchers acknowledge several limitations and challenges, including the potential bias of the pigeon egg dataset and the necessity for model conversion and acceleration to facilitate deployment on edge devices or mobile platforms.

Moving forward, they suggest that future work could focus on enhancing the model's robustness and generalization ability, as well as evaluating its performance on embedded devices or mobile robotic platforms.

Journal reference:

- Jiang, T.; Zhou, J.; Xie, B.; Liu, L.; Ji, C.; Liu, Y.; Liu, B.; Zhang, B. Improved YOLOv8 Model for Lightweight Pigeon Egg Detection. Animals 2024, 14, 1226. https://doi.org/10.3390/ani14081226, https://www.mdpi.com/2076-2615/14/8/1226.