In a recent paper published in the journal Scientific Reports, researchers introduced a novel method named semi-automatic labeling of categories attention style-based generative adversarial network (SACA-StyleGAN) to generate and semi-automatically annotate cast thin section images of tight oil reservoirs using deep learning techniques. This approach aims to address the scarcity of section images and insufficient datasets, which have hindered the intelligent identification of thin section components.

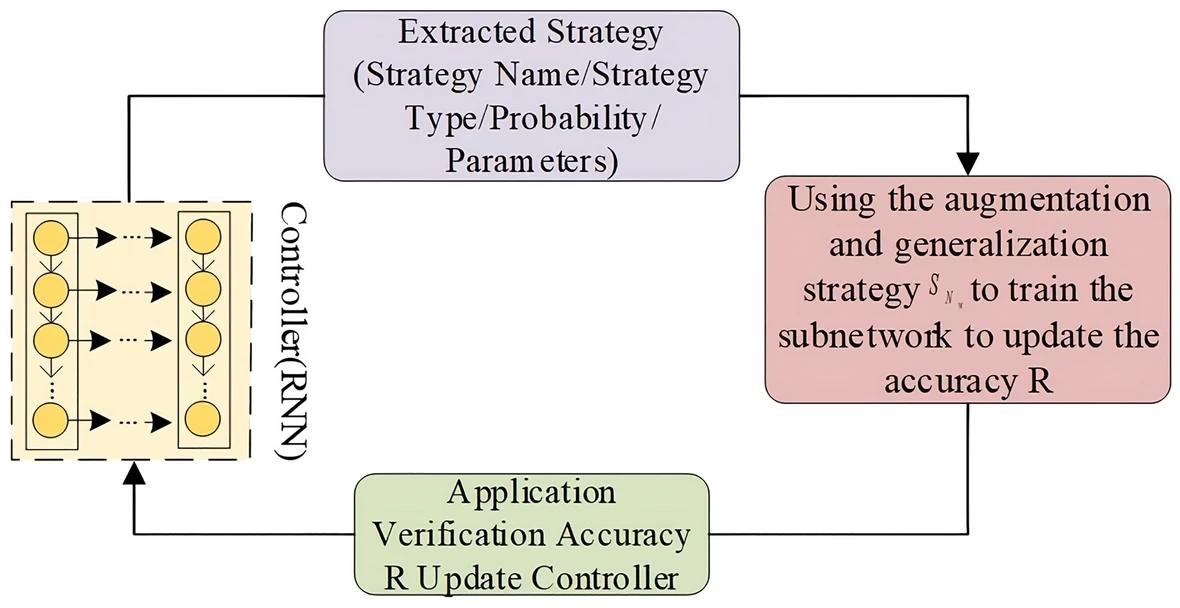

Search strategy for selecting image augmentation operations from Augment strategy space. Image Credit: https://www.nature.com/articles/s41598-024-63430-z

Search strategy for selecting image augmentation operations from Augment strategy space. Image Credit: https://www.nature.com/articles/s41598-024-63430-z

Background

Cast thin sections are essential for evaluating the properties of oil reservoirs, providing insights into formation characteristics, rock types, pore structures, and permeability. However, their creation is complex and expensive, leading to a shortage of available images. Moreover, the traditional annotation process, relying on geological experts, is labor-intensive and time-consuming.

To overcome these challenges, researchers have explored data augmentation techniques, including data transformation methods and GANs. Despite their promise, these methods encounter difficulties in achieving data diversity, image quality, and generating high-resolution images, especially for thin sections with numerous fine particles, underdeveloped pores, and data imbalances.

About the Research

In this study, the authors introduced SACA-StyleGAN, integrating data transformation, oversampling, and a data annotation technique to effectively generate high-quality and diverse thin-section images and complete the preliminary annotation of each component. Their algorithm comprises three main components: data construction, image generation, and a semi-automatic annotation module. They applied this method to the Fuyu reservoir in Sanzhao Sag.

Data construction involves augmenting the original thin section image dataset to meet the data quantity requirements of the StyleGAN network, thereby enhancing sample size and diversity for training the deep learning model. A strategy space and search algorithm were designed to select and apply various image transformation operations, such as rotation, scaling, and distortion, to the original images.

Image generation employs a modified version of StyleGAN, a GAN that produces realistic and high-resolution images. A category attention mechanism was integrated into the original StyleGAN network to enhance the model's focus on relevant information about different components and pores in the thin section images, thereby improving image quality.

Semi-automatic annotation utilizes a semi-automatic labeling mechanism (SALM) to label the components and pores in the generated images. The SALM converts the red-green-blue (RGB) pixel values of the images to luma or brightness, blue projection, and red projection (YUV) color space and compares them with the main distribution intervals of each component and pore to determine their edge position coordinates. It then encodes the images and generates a JavaScript object notation (JSON) annotation file, which can be fused with the images for analysis.

Methodology Evaluation

The authors conducted experiments to evaluate their method's performance in terms of image quality and annotation efficiency. These experiments assessed image sharpness, distortion, and annotation accuracy. Their method was compared with five similar image generation algorithms: StyleGAN, cycle-consistent GAN (CycleGAN), deep convolutional GAN (DCGAN), Wasserstein GAN with gradient penalty (WGAN-GP), and least squares GAN (LS-GAN).

Three metrics, including Inception Score (IS), Frechet Inception Distance (FID), and Kernel Inception Distance (KID), were utilized to measure the sharpness, diversity, and authenticity of the generated images. Additionally, the Peak Signal-to-Noise Ratio (PSNR) and Multi-Scale Structural Similarity Index (MS-SSIM) were used to evaluate image distortion. The method was also compared with manual annotation results of geological experts, using accuracy to assess annotation effectiveness.

Research Findings

The outcomes demonstrated that the new method achieved impressive results, including the highest IS value of 2.42, the lowest FID value of 23.85, and the lowest KID value of 0.04. Additionally, it attained the highest PSNR value of 21 dB and an MS-SSIM value of 95%, indicating its capability to generate high-quality and realistic images.

Moreover, the technique achieved an average annotation accuracy of 95.6%, signifying its ability to accurately annotate the components and pores in the thin section images. Furthermore, it proved highly efficient, annotating 500 images in just 3.5 minutes, which is much faster than manual annotation, which takes about 10 hours.

Applications

The novel technique holds significant promise across various fields including oil and gas exploration, reservoir evaluation, petrology, mineralogy, and geology. It can efficiently generate and annotate thin-section images of tight oil reservoirs, offering a bunch of reliable data for tasks such as reservoir characterization, pore structure analysis, and permeability prediction.

This approach reduces dependence on geological experts for thin section annotation, thereby saving both time and costs associated with thin section production and labeling. Furthermore, its applicability extends beyond tight oil reservoirs to other rock types like carbonate, sandstone, and shale. By enhancing efficiency and accuracy in thin section image recognition, it stands to revolutionize geological analysis and exploration practices.

Conclusion

In summary, the novel methodology demonstrated effectiveness in generating and labeling thin-section images of tight oil reservoirs. By semi-automating the annotation process, it effectively reduced the time and resources required for manual annotation. This presented a promising solution to address the limitations of existing data augmentation techniques. Moving forward, future research should focus on enhancing the diversity and realism of generated images, optimizing the annotation module, and extending the method to other types of rock-thin sections.

Journal reference:

- Liu, T., Liu, Z., Zhang, K. et al. Research on the generation and annotation method of thin section images of tight oil reservoir based on deep learning. Sci Rep 14, 12805 (2024). DOI: 10.1038/s41598-024-63430-z, https://www.nature.com/articles/s41598-024-63430-z.