In a paper published in the journal Remote Sensing, researchers introduced a novel approach for remote sensing image dehazing by addressing the limitations of transformer networks and combining them with convolutional neural networks (CNN) to capture long-range dependencies and local contextual information.

Study: LCEFormer: Enhancing Remote Sensing Image Dehazing. Image Credit: BEST-BACKGROUNDS/Shutterstock

Study: LCEFormer: Enhancing Remote Sensing Image Dehazing. Image Credit: BEST-BACKGROUNDS/Shutterstock

The local context-enriched transformer (LCEFormer) method incorporates a CNN-based adaptive local context enrichment module (ALCEM) into the transformer architecture. This integration enhances local context modeling while preserving global structure information.

Experiments on dehazed image dataset (DHID), enhanced remote sensing image (ERICE), and remote sensing image dataset (RSID) datasets demonstrate that LCEFormer outperforms existing methods, achieving state-of-the-art performance in hazy scene restoration, surpassing dynamic, collaborative inference learning (DCIL) in both peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) on the DHID dataset.

Background

In prior image dehazing research, there has been a shift toward deep learning (DL) methods, especially CNNs and transformers. CNN-based approaches like grid dehaze and multi-scale boosting dense network (MSBDN) excel in spatial information capture, while transformer-based methods like uformer focus on long-range dependencies. Hybrid models like trinity-net combine CNN and transformer strengths. Despite transformer successes, challenges remain in computational costs and local detail preservation. Recent works have addressed these issues through patch-level attention and modulation techniques.

Proposed LCEFormer Methodology

The study presented the proposed LCEFormer for remote sensing image dehazing, describing the key block—the LC transformer block (LCTB)—and the loss function utilized in the framework. The overall pipeline of LCEFormer illustrates a U-shaped architecture comprising stacked LCTBs. The hazy remote sensing image is subjected to a convolution operation to generate intermediate features for processing through four-level encoders, each comprising stacked LCTBs. Features undergo downsampling to reduce size between two-level encoders, while stacked LCTBs are utilized in decoders for feature recovery. The features are then processed to restore the haze-free image through convolution operations.

The analysts introduced LCTB to extract local context and global structural information by integrating an ALCEM into the transformer block's self-attention module and feed-forward network. ALCEM enhances the extraction of local contextual information to preserve image details during dehazing. It comprises splitting operations, convolution layers, and element-wise multiplications. It captures intricate local context information effectively by enriching input features.

The locally enhanced attention (LEA) module, integrated with ALCEM, enhances the local contextual information of the query, key, and value vectors within the transformer block, improving the recovery of local details. The local continuous-enhancement feed-forward network (LCFN) addresses the limitations of existing feed-forward networks by incorporating ALCEM. It consists of convolution layers and ALCEM, which expand and reduce feature channels to preserve vital local context information for dehazing.

Finally, the loss function used for training LCEFormer combines the Charbonnier and SSIM losses. The Charbonnier loss measures the pixel-wise difference between predicted and ground-truth images, while the SSIM loss quantifies image similarity and combines appropriate balance factors to train the network effectively.

Remote Dehazing Datasets

Three datasets for remote image dehazing, namely DHID, ERICE, and RSID, were employed in the experimentation phase. These datasets provide a diverse range of images for evaluation. The DHID dataset consists of 14,990 dense, hazy remote sensing images, with 14,490 in the training set and 500 in the test set, all at a resolution of 512×512 pixels. ERICE comprises 1600 images for training and 400 for testing, derived from the RICE dataset. RSID, a real-world dehazing benchmark, incorporates 900 images from the RSID dataset and 860 from the satehaze1k dataset for training, with an additional 100 RSID images for testing.

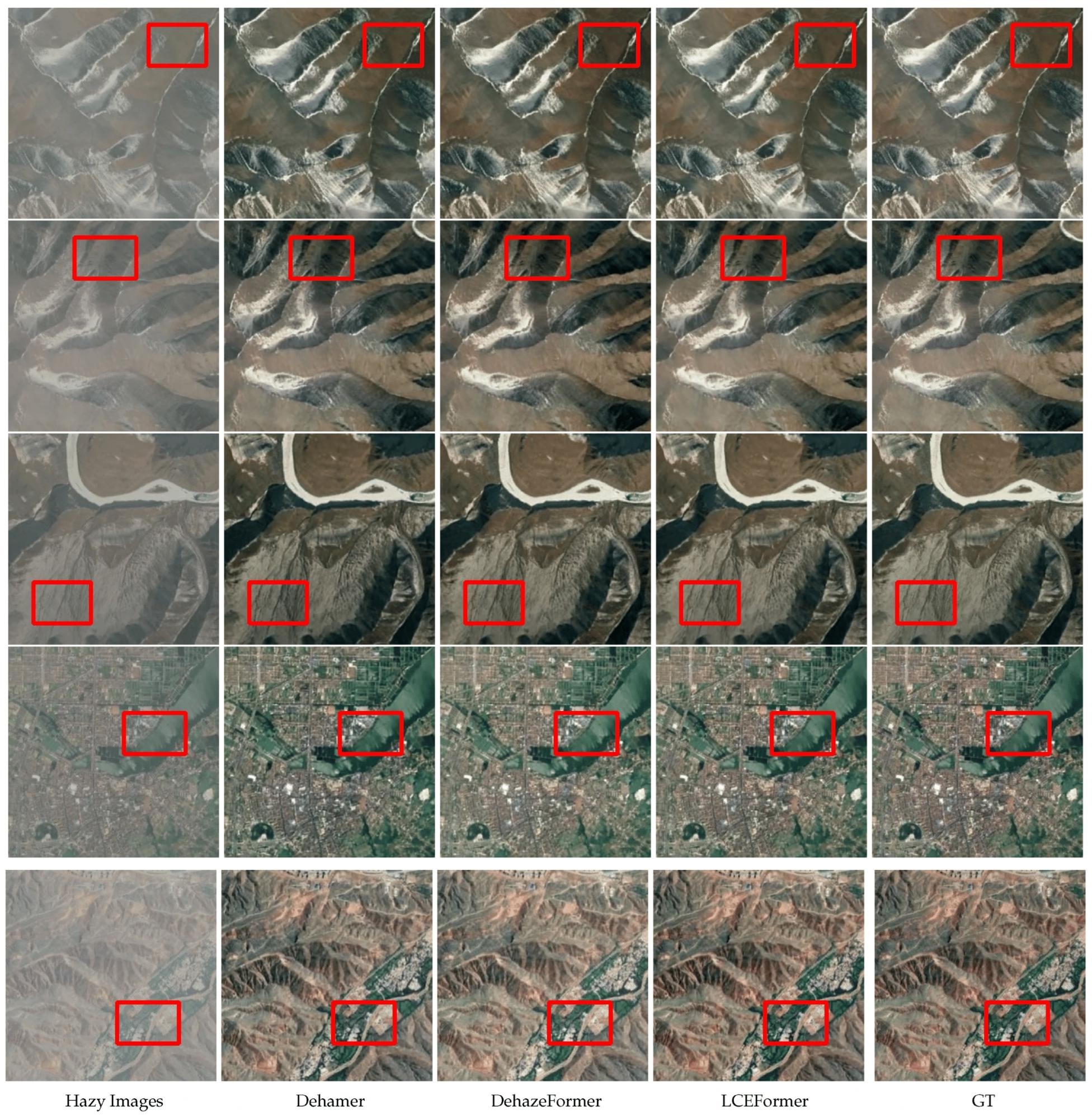

Dehazing results on the DHID dataset.

Dehazing results on the DHID dataset.

Evaluation metrics, including PSNR and SSIM, are employed across all experiments to assess dehazing performance. PSNR quantifies pixel-wise differences between predicted and ground-truth images, while SSIM measures image similarity. Both metrics are crucial for evaluating dehazing effectiveness.

Training details involve end-to-end training on two NVIDIA RTX 3090 graphics processing units (GPUs), employing a mini-batch size of 16 images per GPU. Data augmentation techniques enhance training diversity, including random rotations within 45-degree intervals. The Adam with weight decay fixing (AdamW) optimizer is employed, with a weight decay of 10−3 and an initial learning rate of 1×10−3.

The number of LCTBs for the 4-level encoder stage of LCEFormer is specified as follows: N0=1, N1=2, N2=2, N3=2. The decoder stage mirrors the encoder architecture. Model training iterations and image preprocessing techniques vary for each dataset. DHID images are randomly cropped from the original 512×512 resolution to 256×256 during training, while ERICE images remain unchanged. RSID incorporates images at two resolutions—512×512 and 256×256—each processed accordingly during training.

Dehazing results on the ERICE dataset.

Dehazing results on the ERICE dataset.

Conclusion

In summary, the article presented the development of the LCEFormer to address fog in remote sensing images. The LCEFormer architecture consisted of stacked LCEFormer blocks, forming a U-shaped dehazing framework. Further, an adaptive ALCEM was introduced to imbue the transformer blocks with local contextual insight, effectively extracting multi-scale features and integrating them gated.

Along with incorporating an LEA mechanism, this enhancement supplemented long-range information with local context. LCFN was designed to facilitate a more robust flow of local context information. The team rigorously experimented with three distinct datasets—DHID, ERICE, and RSID—to validate the efficacy of the proposed LEA and LCFN. Both quantitative and qualitative assessments affirmed the superiority of the LCEFormer over existing methods for remote sensing image dehazing tasks.

Article Revisions

- Apr 23 2024 - Addition of two images from the paper.