LLMPhy redefines problem-solving, blending advanced AI reasoning with physics simulation to tackle real-world challenges in object dynamics and stability.

Research: LLMPhy: Complex Physical Reasoning Using Large Language Models and World Models

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

In an article recently submitted to the arXiv preprint* server, researchers at Mitsubishi Electric Research Labs (MERL) introduced a zero-shot optimization framework for combining large language models (LLM) for physical reasoning, LLMPhy. Using the TraySim dataset, they addressed the challenge of predicting object dynamics post-impact on a tray.

LLMPhy combined the program synthesis capabilities and world knowledge of LLMs with physics engines to estimate system parameters and predict object stability, achieving state-of-the-art results in physical reasoning tasks involving both continuous and discrete optimization problems.

Background

Recent LLMs capture vast knowledge from multimodal data, which supports their use in complex problem-solving tasks, including code generation and optimization. LLMs have been explored in areas like robotic planning and physical reasoning, but their application in intricate physics-based tasks remains limited.

Traditional models struggle with physical reasoning as they cannot directly measure unobservable physical attributes, such as friction and damping, needed for accurate predictions. While recent research has utilized physics engines for simulations, prior works have only applied them in simplistic scenarios or without iterative optimization feedback loops.

To address these limitations, this paper introduced LLMPhy, a framework combining LLMs with non-differentiable physics engines to tackle complex physical reasoning tasks. Using the novel TraySim dataset, LLMPhy estimated physical parameters and predicted object stability under impact. This two-phase approach leveraged the program synthesis abilities of LLMs for dynamic scene understanding, enabling iterative optimization in both continuous and discrete settings.

Unlike prior works, LLMPhy established a closed feedback loop between the LLM and the physics engine, allowing LLM-generated programs to refine predictions with simulator feedback. The paper demonstrated that this LLM-physics engine synergy provided superior convergence rates and accuracy compared to existing methods.

Proposed Framework

Current LLMs struggle with applying physical principles directly to problem-solving, mainly due to their lack of interaction with dynamic scenes to estimate physical parameters. While training models explicitly for these tasks would require vast computational resources, LLMPhy overcame this by using advanced physics simulators as interactive tools to enhance LLMs' reasoning abilities.

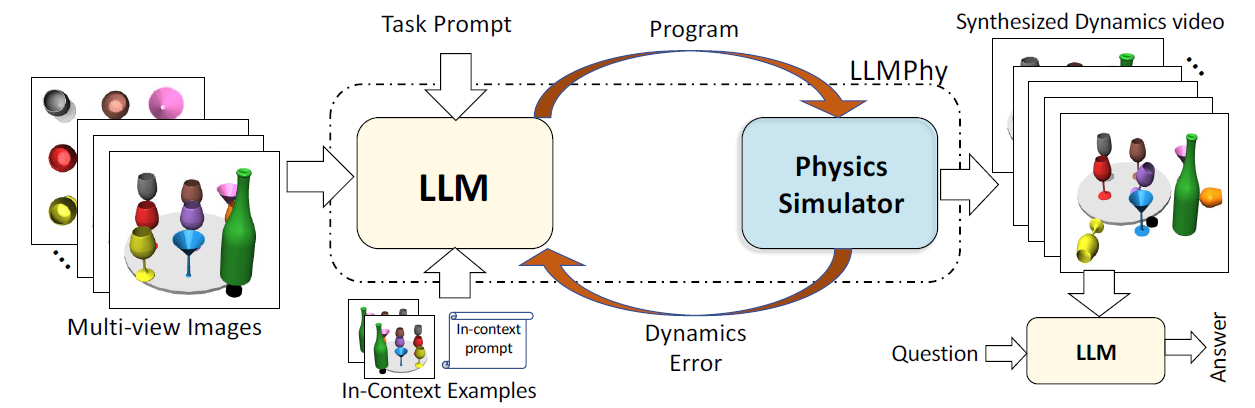

LLMPhy operated in two phases. In Phase 1, the LLM generated and refined programs to estimate key physical parameters (such as friction, mass, stiffness, and damping) by aligning simulated trajectories with observed object dynamics in a video. In Phase 2, the LLM used the inferred parameters to simulate scene dynamics, allowing it to predict outcomes in various setups. Throughout, the simulator provided iterative error feedback to adjust the LLM’s program and parameter estimates, forming an iterative black-box optimization approach.

LLMPhy bridged the gap between linguistic and physical reasoning capabilities by combining LLMs with simulation engines, enabling models to solve highly intricate physics-based tasks that mimic real-world scenarios. This approach not only reduced dependency on labeled data but also paved the way for more computationally efficient and physics-informed artificial intelligence.

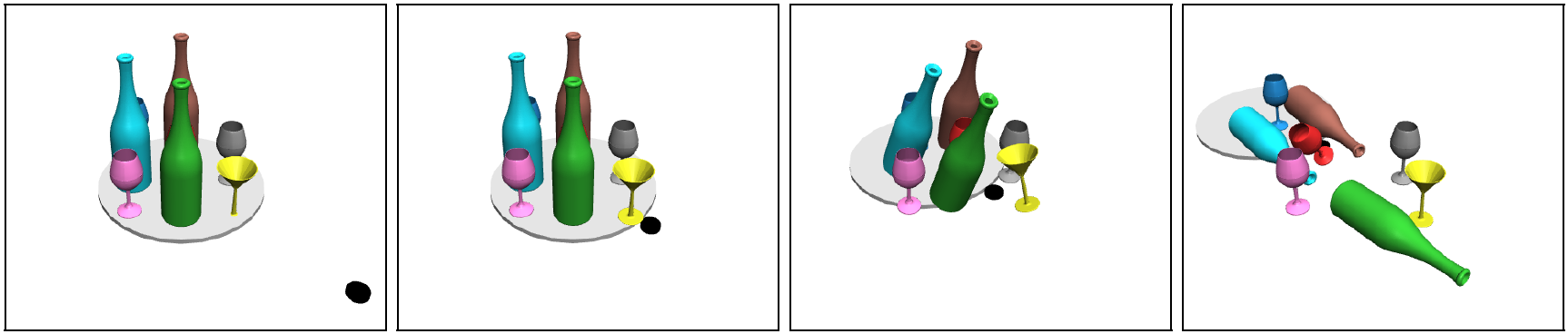

Frames from an example dynamical sequence in our TraySim dataset. The left-most frame shows the first frame of the scene with many objects on the tray, which will be impacted by a black pusher (right-bottom). The subsequent frames show the state of the system at the 25th, 50th, and 200th time steps (each step is 0.01s). Our task is for a model to reason through the system's dynamics and predict the stability of each object on the tray at the end of the episode in a zero-shot manner when provided as input only in the first frame of the sequence.

Performance Evaluation and Optimization

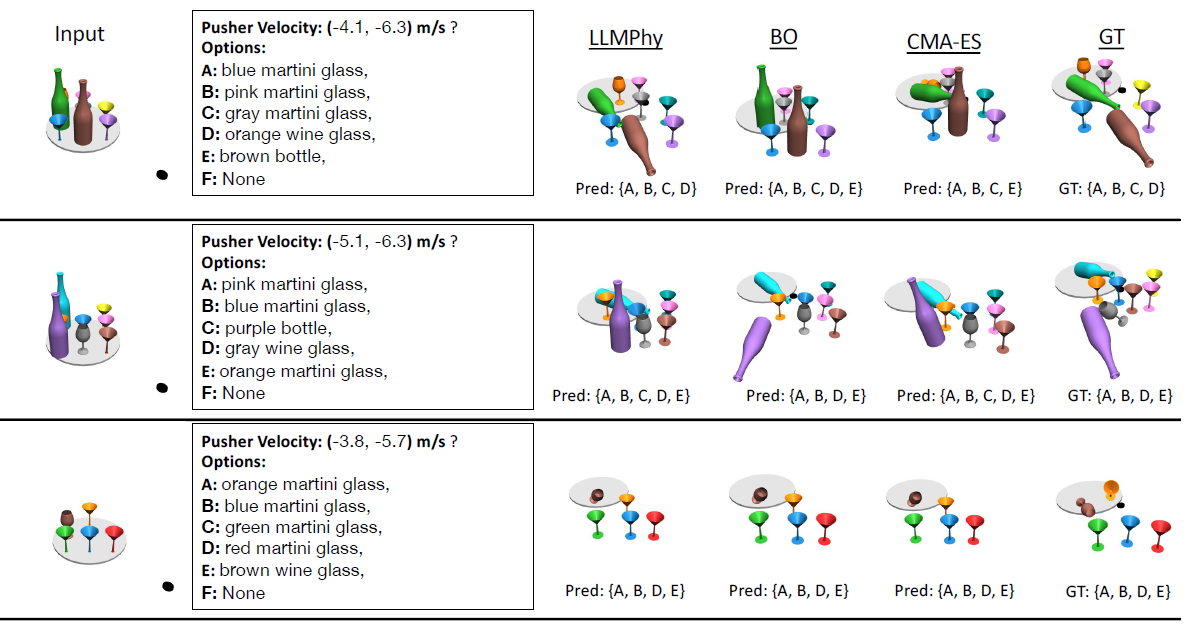

The LLMPhy framework utilized the TraySim dataset, created with MuJoCo physics engine, to simulate object interactions based on Newtonian physics. In this environment, objects like wine glasses and bottles were placed on a tray with randomized attributes, and their trajectories, stability, and forces were recorded from multiple camera views.

Each simulation tested object stability, with 100 unique sequences, containing objects identified by color, type, and location. Physical parameters such as friction and damping varied within preset ranges, while simulations ran for 200 time steps to observe object movement under different impact conditions.

Illustration of the key components of LLMPhy and the control flow between LLM, physics simulator, and the varied input modalities and examples.

LLMPhy integrated two phases of LLMs to optimize scene accuracy. In phase 1, the OpenAI o1-mini model predicted physics parameters based on object trajectories and example contexts. In phase 2, GPT-4o3, a vision-and-language model, determined object positioning from multiview images, generating code to recreate the scene layout and iteratively adjusting based on feedback. The model demonstrated fast convergence, completing up to 30 iterations in phase 1 and five in phase 2, optimizing trajectory and visual accuracy.

Evaluated against Bayesian optimization (BO) and covariance matrix adaptation evolution strategy (CMA-ES), LLMPhy achieved 65.1% accuracy with ground truth data, outperforming prior methods. Object localization accuracy reached 68.7% for color and location matches, with feedback improving overall accuracy. The results showed that LLMPhy converged significantly faster than BO and CMA-ES and adapted well to physical and layout adjustments, demonstrating its strength in complex physical prediction tasks through continuous and discrete optimization approaches.

Conclusion

In conclusion, the authors introduced LLMPhy, a zero-shot optimization framework combining LLMs with physics engines for predicting object dynamics. Using the TraySim dataset, LLMPhy estimated physical parameters and predicted object stability post-impact on a tray. It leveraged the capabilities of LLMs in program synthesis and physics understanding, with iterative optimization loops to improve predictions.

The framework outperformed existing methods, achieving higher accuracy and faster convergence in physical reasoning tasks. LLMPhy demonstrated the potential of LLMs in solving real-world complex physics-based problems without the need for extensive model training, suggesting a new approach to leverage implicit knowledge in LLMs for optimization tasks.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Cherian, A., Corcodel, R., Jain, S., & Romeres, D. (2024). LLMPhy: Complex Physical Reasoning Using Large Language Models and World Models. ArXiv.org DOI:10.48550/arXiv.2411.08027, https://arxiv.org/abs/2411.08027