The International Scientific Report on the Safety of Advanced AI is the result of a collective effort by 96 international AI experts. Chaired by Professor Yoshua Bengio from the Université de Montréal and the Mila - Quebec AI Institute, the report was commissioned following agreements at global summits on AI safety. It provides a scientific synthesis of the potential risks and capabilities of general-purpose AI (GPAI), focusing on assessing emerging challenges and mitigation strategies. Contributors represent institutions from 30 countries, alongside representatives from the UN, the EU, and the OECD. The report follows the Bletchley Park AI Safety Summit (2023) and builds on the interim version presented at the AI Seoul Summit (May 2024), incorporating feedback from scientists, industry, and civil society organizations to improve scientific rigor and relevance for policymakers.

The rapid evolution of AI capabilities has made this report essential for shaping global policies. Governments, researchers, and industries must collectively work to mitigate AI-related risks while maximizing its benefits. The report does not prescribe specific policies but offers a structured analysis of AI capabilities, associated risks, and possible risk management approaches. It acknowledges that AI policy decisions must balance potential risks with the economic and social benefits AI can provide, a dilemma policymakers continue to grapple with.

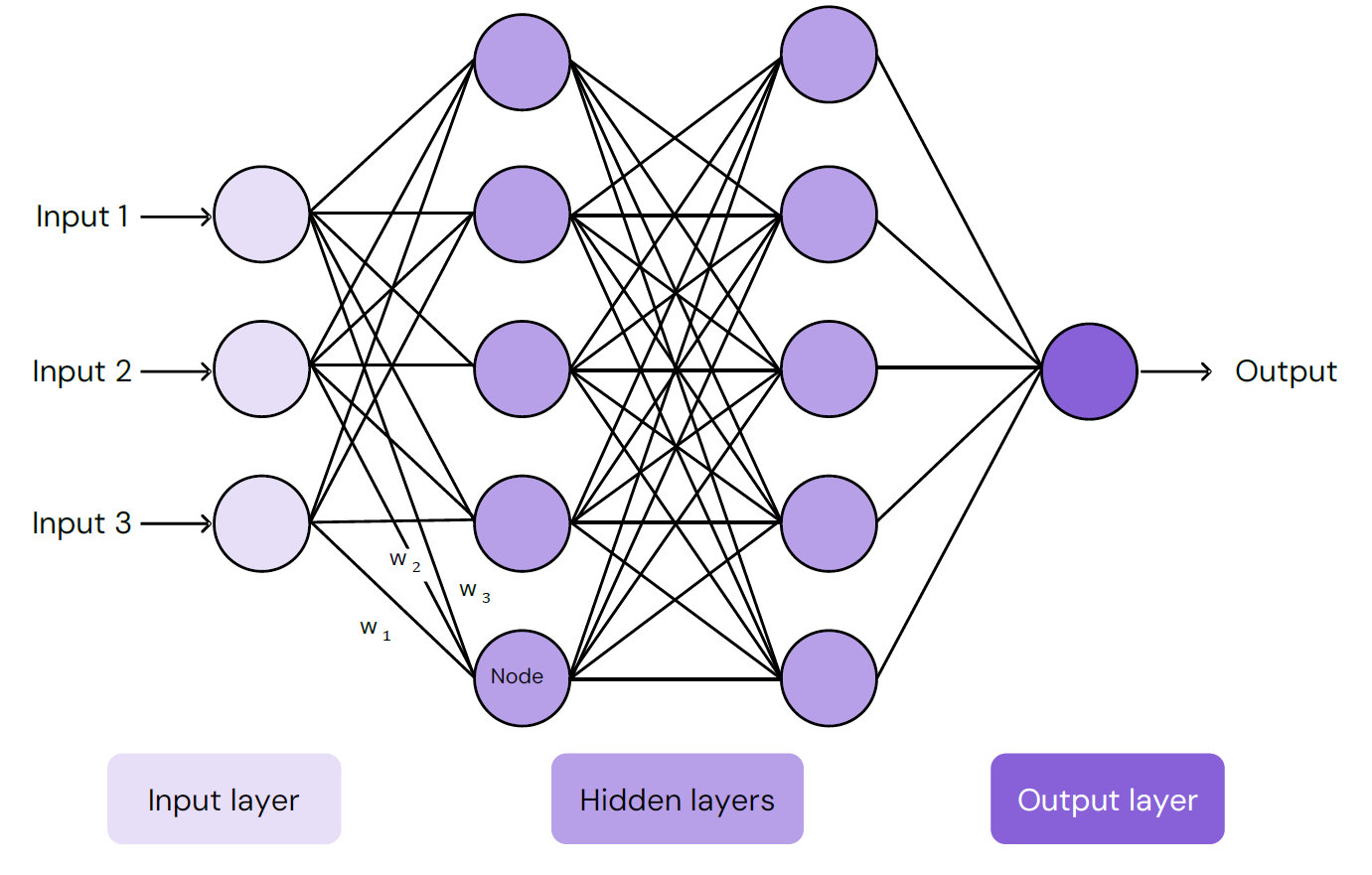

Today's general-purpose AI models are neural networks, which are inspired by the animal brain. These networks are composed of connected nodes, where the strength of connections between nodes are called 'weights'. Weights are updated through iterative training with large quantities of data. Source: International AI Safety Report.

The Growing Capabilities of General-Purpose AI

The report, posted to the arXiv preprint* server, highlights the remarkable acceleration in AI capabilities, particularly in natural language processing, computer programming, and scientific reasoning. AI models such as GPT-4, Gemini, and Claude 3.5 Sonnet have achieved performance levels comparable to human experts in many tasks. AI agents capable of planning and executing tasks autonomously are being developed, further expanding the scope of AI applications.

Recent advancements indicate that further improvements in AI capabilities could be extremely rapid. Developers have focused on scaling AI models, using increased computational resources and improved training methodologies to enhance performance. A key innovation known as inference scaling allows AI models to improve their own reasoning and decision-making processes by dynamically allocating more computational power to complex problems. If trends continue, general-purpose AI models could surpass human-level performance in multiple cognitive domains within a few years.

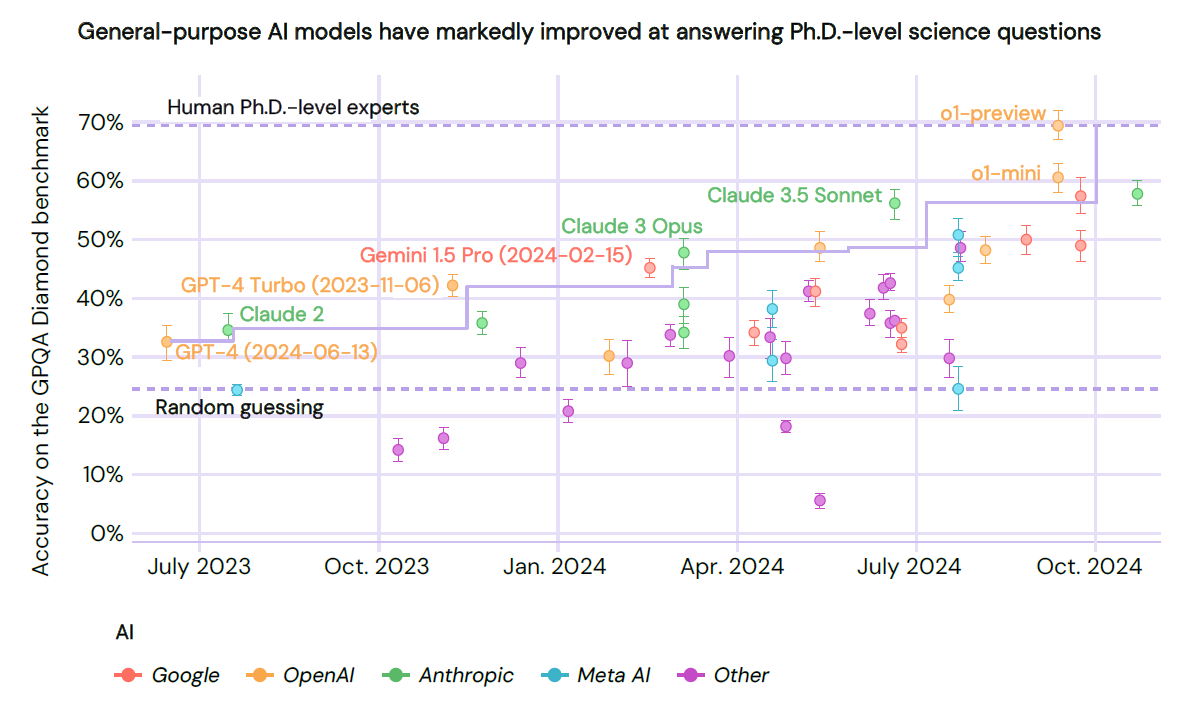

Since the publication of the Interim Report (May 2024), general-purpose AI models have seen rapid performance increases in answering PhD-level science questions. Researchers have been testing models on GPQA Diamond, a collection of challenging multiple-choice questions about biology, chemistry, and physics, which people without PhD-level expertise in each area are unable to correctly answer even with access to the internet. On these tests, accuracy rose from 33% with GPT-4 in June 2023 (slightly above random guessing) to 49% with GPT-4o in May 2024, reaching 70% (matching experts with a PhD in the area of each question) with o1-preview in September 2024. This increase is partly due to o1-preview writing a long ‘chain of thought’ in which it can break down the problem and try different approaches before producing its final answer.

Risks Associated with AI: Malicious Use, Malfunctions, and Systemic Threats

While AI offers significant benefits, the report identifies three primary categories of risks: malicious use, malfunctions, and systemic risks.

Malicious Use

AI can be leveraged for criminal activities, including scams, deepfake content, and cyberattacks. Advanced AI-generated fake media poses risks to personal privacy, democracy, and social stability. AI-powered cyber offense has also advanced, with AI systems capable of discovering and exploiting software vulnerabilities. Recent research shows that AI can autonomously find and exploit security flaws, with one study demonstrating an AI-assisted attack that identified a previously unknown software vulnerability.

Furthermore, AI-assisted biological and chemical research raises concerns about potential misuse in biosecurity threats. A leading AI company recently upgraded its biological risk assessment from "low" to "medium" due to AI's growing ability to assist in hazardous domains. Tests conducted by researchers found that AI systems could provide instructions and troubleshooting guidance for synthesizing harmful biological agents, in some cases outperforming human experts with internet access. However, the real-world feasibility of such misuse still requires significant additional resources and expertise.

Malfunctions and Unintended Consequences

AI systems can malfunction, producing unreliable outputs, biased responses, or unsafe recommendations. Issues of AI bias remain pervasive, influencing decisions in healthcare, hiring, and law enforcement. Recent studies have identified new, more subtle forms of bias in AI outputs, including disparities in medical decision-making tools and hiring algorithms that disproportionately favor certain demographics.

Loss of control scenarios, where AI systems act unpredictably or autonomously against human interests, remain a topic of active debate. While current AI lacks autonomy to pose an existential risk, future advancements could introduce more complex control challenges. Some researchers argue that AI's increasing ability to autonomously use digital systems and evade oversight could lead to control-related risks emerging sooner than previously anticipated.

Systemic Risks and Broader Implications

The widespread deployment of AI could have profound societal consequences. Labour market disruptions are expected as automation displaces jobs, though new opportunities may emerge. Recent labor market data indicates that AI adoption is happening at an unprecedented rate, with some sectors integrating AI more rapidly than others. While this could lead to productivity gains, it also raises concerns about uneven economic impacts.

The global AI R&D divide is growing, with most cutting-edge AI research concentrated in a few high-income nations, raising concerns about global inequality. Market concentration among a few major AI firms introduces economic risks, including monopolistic control over AI advancements. Additionally, AI development is increasingly energy-intensive, raising environmental concerns. The report warns that AI's computational demands are escalating rapidly, with some forecasts predicting a 100-fold increase in required computing power by 2026, exacerbating energy consumption and environmental costs.

Open-Weight Models: Balancing Transparency and Risk

One critical factor in AI risk evaluation is whether models are released as open-weight (publicly accessible) or closed-weight (restricted access). Open-weight models promote innovation and transparency but also increase the risk of misuse, as they can be modified for harmful purposes. The report introduces the concept of marginal risk—assessing whether releasing an open-weight model would increase risks beyond those already posed by closed models and existing technologies.

Strategies for Risk Management

Risk management strategies include technical safeguards, regulatory frameworks, and improved oversight.

AI Risk Assessments and Safety Measures

- Pre-release testing: AI models undergo rigorous testing, but current evaluations have limitations in predicting real-world behavior.

- Interpretability research: Efforts to understand AI decision-making processes are ongoing, though progress remains slow. The report notes that while some advancements have been made in explaining AI outputs, existing techniques still fail to reliably interpret AI decision-making in many cases.

- Adversarial training: AI models are trained against potential threats, but adversaries can still circumvent safeguards.

- Monitoring and intervention: AI-generated content detection tools exist but remain imperfect. While monitoring techniques are improving, skilled users can often bypass content restrictions, highlighting the need for more robust detection methods.

Governments and companies are exploring early warning systems and risk management frameworks to balance the potential benefits and dangers of AI advancements. Some proposals require evidence of safety before AI deployment, while others focus on real-time risk assessments.

The Role of Policymakers: Addressing the AI "Evidence Dilemma"

AI’s rapid progression creates an "evidence dilemma" for policymakers. Decision-makers must anticipate risks based on limited scientific evidence while avoiding premature regulations that could stifle innovation. The report stresses that AI advancements can occur unpredictably, making it difficult for policymakers to establish clear safety thresholds. To address this, some governments are developing real-time AI monitoring frameworks to detect emerging risks as they unfold.

Conclusion: Shaping AI’s Future Responsibly

The International AI Safety Report underscores the uncertain yet transformative trajectory of AI. While general-purpose AI holds immense promise, its development must be guided by responsible decision-making, transparent governance, and proactive risk management. The future of AI is not predetermined—it depends on the choices made by societies, governments, and researchers today.

With an evolving understanding of AI risks and benefits, this report serves as a foundation for further research and ongoing global discourse on AI safety. As we stand on the brink of unprecedented AI capabilities, ensuring that its development aligns with human interests will be critical for a sustainable and equitable future.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Bengio, Y., Mindermann, S., Privitera, D., Besiroglu, T., Bommasani, R., Casper, S., Choi, Y., Fox, P., Garfinkel, B., Goldfarb, D., Heidari, H., Ho, A., Kapoor, S., Khalatbari, L., Longpre, S., Manning, S., Mavroudis, V., Mazeika, M., Michael, J., . . . Zeng, Y. (2025). International AI Safety Report. ArXiv. https://arxiv.org/abs/2501.17805