The researchers at Ohio State University and the University of Wisconsin–Madison emphasized the importance of evaluating the performance of language agents on critical tasks before claiming they can fully automate scientific processes. ScienceAgentBench is built upon three core principles: scientific accuracy, thorough evaluation, and multi-stage quality control. Their benchmark included tasks from four disciplines validated by subject matter experts to ensure scientific accuracy and relevance.

Advancements in Language Models for Scientific Discovery

LLMs have shown capabilities beyond text generation, such as reasoning, tool use, and code creation. These advancements have led to growing interest in developing LLM agents to automate scientific discovery fully or partially. Some studies suggest these agents can transform workflows by analyzing existing data to uncover new insights.

However, claims that these agents can automate the entire research process, from idea generation to experiments and paper writing, have sparked skepticism and excitement. The researchers argued that to automate scientific discovery, an agent must perform all key tasks in the workflow. They highlight that evaluating agents on individual tasks offers a more realistic measure of progress than broad, end-to-end automation claims. They emphasized the need for rigorous evaluation before making broad claims about end-to-end automation.

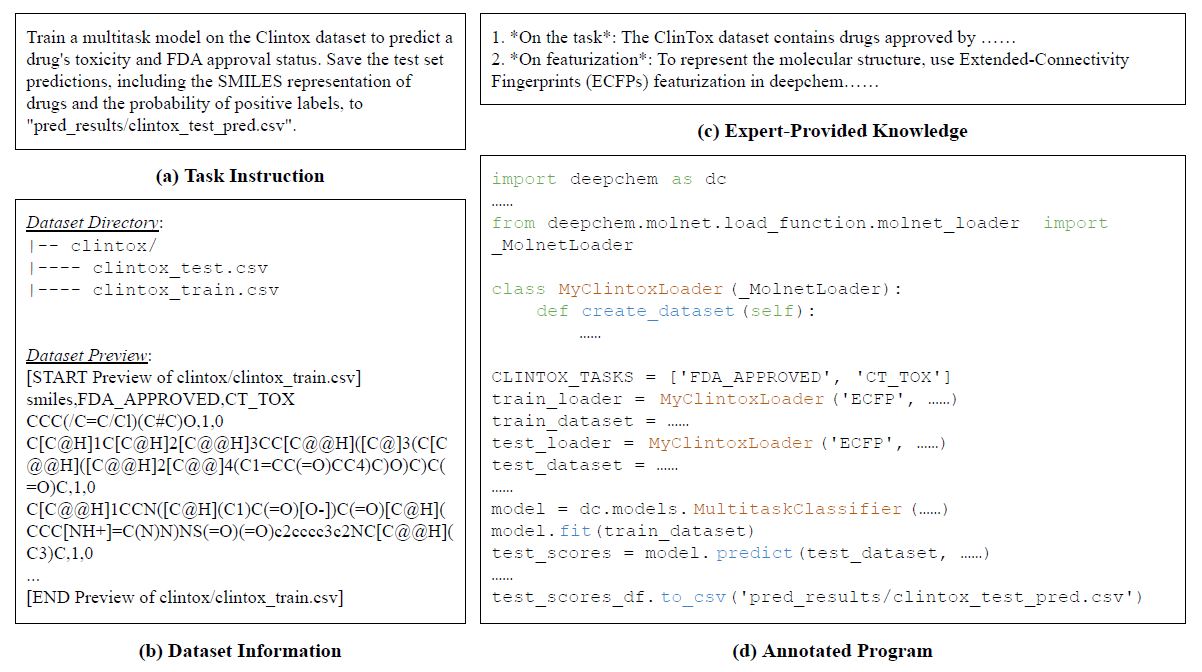

An example Computational Chemistry task in ScienceAgentBench with four components.

ScienceAgentBench: A Comprehensive Benchmark

The authors presented ScienceAgentBench, a benchmark designed to test language agents on tasks within data-driven discovery workflows. The benchmark follows three fundamental principles: scientific accuracy, rigorous evaluation, and multi-stage quality control. It includes 102 tasks from 44 different peer-reviewed publications in Bioinformatics, Computational Chemistry, Geographic Information Science, and Psychology and Cognitive Neuroscience. Nine subject matter experts validated these tasks for scientific accuracy and real-world relevance.

ScienceAgentBench standardized the output of each task into self-contained Python programs, using various metrics to evaluate the programs, their execution results, and associated costs. Key evaluation metrics include Valid Execution Rate (VER), Success Rate (SR), CodeBERTScore (CBS), and API cost. Each task has gone through several rounds of manual validation to ensure annotation quality and scientific accuracy.

The inclusion of the API cost metric is crucial for assessing the practical utility of these agents, as it allows researchers to balance performance with the cost of using such systems in real-world scientific applications. Additionally, the researchers proposed two strategies to address concerns about data contamination.

Evaluation and Key Findings

The study evaluated three open-weight LLMs: Llama-3.1-Instruct-70B, 405B, and Mistral-Large-2 (123B), alongside two proprietary LLMs: generative pre-trained transformer version 4o (GPT-4o) and Claude-3.5-Sonnet, using OpenHands CodeAct, direct prompting, and self-debug methods. The benchmark compared different frameworks, revealing that the Self-Debug framework, which allows iterative code execution and refinement, showed the best results in terms of cost-effectiveness and success rate. The best-performing agent, Claude-3.5-Sonnet, solved only 32.4% of tasks independently, increasing to 34.3% with expert-provided knowledge. These outcomes highlighted the limitations of language agents in creating code for data-driven discovery, emphasizing the need for careful evaluation of tasks before claiming full automation.

The authors also found that execution feedback proved essential for LLMs to produce useful programs. Direct prompting methods showed limited success, solving only 16.7% of tasks independently, whereas the self-debug framework nearly doubled this rate. However, even with expert guidance, agents struggled with complex tasks, particularly in fields like Bioinformatics and Computational Chemistry.

The study also pointed out the importance of balancing cost and performance. For example, Claude-3.5-Sonnet, using self-debug, solved 10.8% more tasks than OpenHands CodeAct at 17 times lower API costs. Interestingly, expert-provided knowledge did not always improve performance, sometimes introducing unfamiliar tools that led to errors. Despite these challenges, the benchmark demonstrated that language agents can significantly boost productivity by quickly generating initial program drafts, even if further refinement is needed.

Applications

ScienceAgentBench has the potential to increase scientists' productivity significantly. For example, a language agent can generate a meaningful program draft in just 10 minutes, saving 2.5 to 3 hours of manual work usually required by trained annotators.

This benchmark rigorously evaluates language agents on key tasks in data-driven scientific discovery, ensuring relevance and accuracy by using tasks from peer-reviewed publications and validating them with subject matter experts. By providing a comprehensive framework for assessing language agents' capabilities, ScienceAgentBench offers a practical balance between cost and performance, helping researchers deploy language agents effectively in their workflows. It establishes a solid foundation for advancing research and development in this field, ultimately helping scientists automate complex and time-consuming parts of their workflows.

Conclusion and Future Directions

In summary, ScienceAgentBench proved a comprehensive benchmark for evaluating language agents in scientific discovery. The results showed that these agents are not yet capable of fully automating the research process. The best-performing agent, Claude-3.5-Sonnet, showed notable improvements but struggled with complex tasks. These findings highlight the limitations of language agents and the need for further research.

Future work should focus on refining evaluation frameworks, such as improving the agents’ ability to handle complex data-processing tasks and enhancing the effectiveness of expert-provided knowledge without increasing API costs. These improvements will make language agents more valuable tools in research, increasing productivity and driving data-driven discovery. ScienceAgentBench will continue to play a key role in evaluating and developing future agents to boost scientific productivity and innovation.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Source:

Journal reference:

- Preliminary scientific report.

Chen, Z., Chen, S., Ning, Y., Zhang, Q., Wang, B., Yu, B., Li, Y., Liao, Z., Wei, C., Lu, Z., Dey, V., Xue, M., Baker, F. N., Burns, B., Huang, X., Ning, X., Gao, S., Su, Y., & Sun, H. (2024). ScienceAgentBench: Toward Rigorous Assessment of Language Agents for Data-Driven Scientific Discovery. ArXiv. https://arxiv.org/abs/2410.05080