With a dynamic system that refines object counts during image generation, this breakthrough method solves a persistent challenge in AI, offering faster and more accurate results in generating images with the correct number of objects.

Research: Iterative Object Count Optimization for Text-to-image Diffusion Models

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

In an article recently submitted to the arXiv preprint* server, researchers proposed a novel solution to address the challenge of accurately generating a specified number of objects in text-to-image models. They introduced an optimization technique that uses a counting loss derived from a counting model. This approach enhanced accuracy by dynamically adjusting hyperparameters and modifying the text conditioning embedding, offering a plug-and-play solution to improve object counting in generated images with minimal additional training.

Background

Image generation models have significantly advanced, producing high-quality images through conditioning mechanisms. These models have wide applications, such as three-dimensional (3D) modeling, image editing, and improving classifiers. However, accurately generating a specific number of objects based on textual input remains a challenge.

When asked to depict a precise number, current models often generate more or fewer objects than requested. Previous efforts in visual question answering and text-image matching also faced this limitation, requiring specialized mechanisms to address counting challenges. While methods like classifier guidance and diffusion processes have been explored, they often rely on curated datasets, which limits their scalability and adaptability.

This paper introduced a novel solution that iteratively optimizes image generation with a counting loss based on object potentials. They addressed gaps by allowing for a dynamic adjustment of counting parameters during the image generation process. The proposed method leveraged pre-trained models and incrementally refined the counting accuracy, enabling precise object generation.

Additionally, the approach provided a zero-shot, plug-and-play solution that integrated seamlessly into existing generation models, filling the gaps left by previous work. Importantly, the method demonstrated significant improvements in counting accuracy without the need for additional fine-tuning or custom datasets.

We propose a plug-and-play optimization of object counting accuracy of a text-to-image model based on detection models.

Optimizing Object Count in Text-to-Image Models

The method outlined a strategy to steer text-to-image models by adjusting embeddings of a newly introduced "counting token." The process involved optimizing the counting token through an iterative diffusion model to control the number of objects generated in an image. The key aspect of the model involved generating images from random noise using a denoising diffusion process and fine-tuning them based on a target number of objects.

A differentiable counting function, such as contrastive language–image pre-training (CLIP)-count, was employed to achieve accuracy. This function estimated object counts within generated images. The counting loss was minimized by comparing the estimated and desired object counts. A detection model, such as you-only-look-once (YOLO), was used to dynamically adjust the scaling factors of the potential maps to improve accuracy.

To ensure the overall image quality, a semantic loss using CLIP matching was introduced to ensure that the generated objects remained true to their intended class. The optimization process was completed by updating the embeddings of the counting token until the required accuracy was reached, with recent fast diffusion models allowing optimization in fewer steps, resulting in efficient outcomes.

Results and Benchmark Evaluation

The authors introduced a benchmark for evaluating object counting models, utilizing the few-shot object counting task (FSC)-147 dataset containing 147 categories. The team generated 3,674 samples, with 25 examples for each object class, ranging from one to 25 objects. Two detection methods, CLIP-count and YOLO, were employed to assess accuracy.

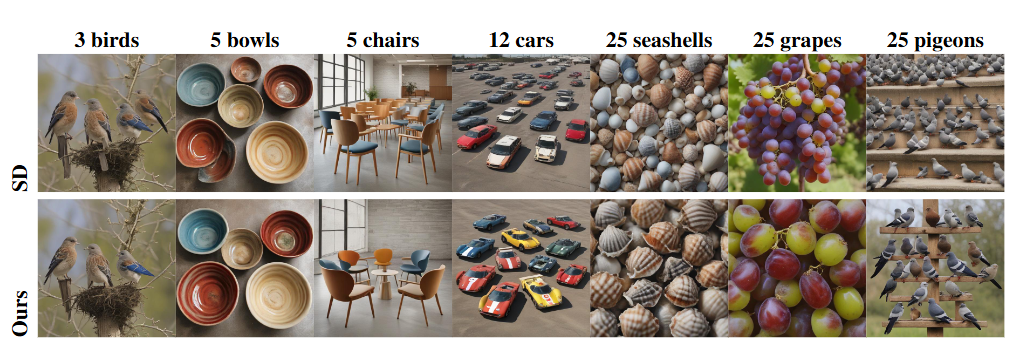

In comparison, the proposed method, which used static and dynamic scaling, outperformed baseline models like ControlNet and stable diffusion (SD). With a mean absolute error (MAE) of 4.13 using CLIP-count, the static scaling method achieved a significantly lower error than the 15.6 error of SD. For YOLO-based detection, dynamic scaling reduced errors, improving accuracy in counting generated objects.

Human annotators confirmed the method’s superior performance in depicting the correct object count and naturalness of images. The model was chosen 88% of the time for object count accuracy and 79.7% for image naturalness. Furthermore, the study highlighted the flexibility of the method, with trained tokens demonstrating robust performance across different object categories. Notably, in-domain token reuse performed slightly better than out-of-domain. The authors highlighted the novel ability to fine-tune image generation models for precise object counting, addressing a key challenge in generative artificial intelligence (AI).

Conclusion

In conclusion, the researchers developed a novel approach to enhance object count accuracy in text-to-image models using a counting loss mechanism derived from a counting model. Their method dynamically adjusted hyperparameters and modified text conditioning embeddings, optimizing object counting during image generation.

By refining object count estimates iteratively, this approach improved accuracy without needing additional optimization, showing clear improvements over previous methods. It also enabled the reuse of counting tokens across different classes, offering flexibility. This method addressed common issues in generative models, such as spatial relationships and compositional challenges, advancing plug-and-play techniques for object counting in AI-generated images.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Source:

Journal reference:

- Preliminary scientific report.

Zafar, O., Wolf, L., & Schwartz, I. (2024). Iterative Object Count Optimization for Text-to-image Diffusion Models. ArXiv. DOI: abs/2408.11721, https://arxiv.org/abs/2408.11721