In a paper published in the journal Electronics, researchers developed a smart pricing policies (SPP) module for individual users and mobile network operators (MNOs) within a multi-MNO blockchain radio access network (B-RAN). The module aimed to determine resource-sharing prices efficiently by employing deep reinforcement learning (DRL) in a reverse auction model. MNOs acted as agents competing to offer the most competitive prices based on user demands.

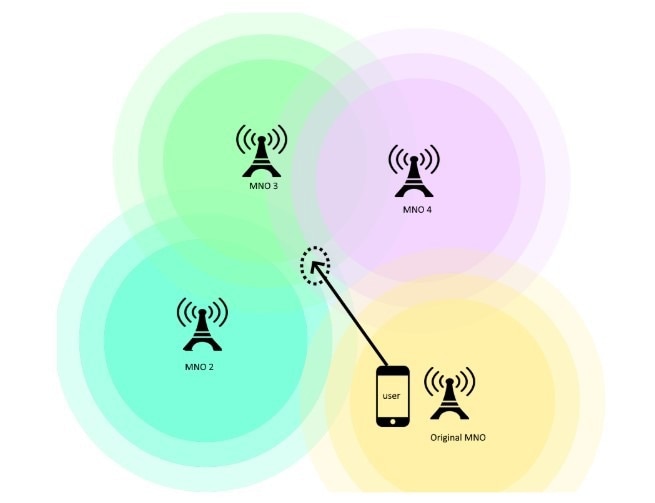

Indicative state where a user is moving towards a temporary MNO. Image Credit: https://www.mdpi.com/2079-9292/13/13/2488

Indicative state where a user is moving towards a temporary MNO. Image Credit: https://www.mdpi.com/2079-9292/13/13/2488

Results indicated that combining DRL with reverse auction theory effectively addressed pricing and bidding challenges, enabling administrators to dynamically adjust strategies based on network conditions and requirements, enhancing overall system optimization and financial incentives for MNOs.

Background

Past work has extensively explored key technologies and theoretical foundations crucial to understanding the research. These include blockchain and its applications in B-RAN, which enhance reliability and security in mobile networks. Additionally, game theory techniques such as Stackelberg games, reverse auction theory, Cournot oligopoly, and the Bertrand model have been pivotal in analyzing strategic interactions among telecommunication providers and data vendors.

Optimizing Mobile Network Coverage

In developing the SPP module for 5G and beyond networks, a significant challenge arises when users move into areas lacking coverage from their primary MNO. The solution employs flexible network sharing and roaming agreements among MNOs to address this. It allows alternative providers with coverage in specific locations to take over, ensuring temporary, uninterrupted service for users.

Moreover, the approach introduces the concept of user-provided networks, enabling individuals with adequate connectivity to share their network access instantly, especially where traditional infrastructure is impractical. It necessitates careful consideration of device interoperability, security, privacy, economic implications, and regulatory aspects of dynamic provider switching.

The core functionality of the SPP module revolves around selecting a temporary MNO when a user's original MNO lacks coverage. This selection process involves an orchestrated mechanism that identifies all alternative MNOs capable of meeting the user's service level agreement (SLA) requirements. These MNOs provide bids within specified price ranges, creating a competitive and transparent environment. The SPP module then determines the winning MNO. It negotiates a contractual agreement that satisfies the original MNO and the temporary provider, ensuring seamless service continuity across different network infrastructures.

Efficient Bidding Mechanism

The experimental setup entailed meticulous parameters optimization for a scalable SPP model, specifically examining four agents with predetermined min and max prices while fine-tuning Q-Learning factors and the number of rounds. Four agents were used to simplify the demonstration, ensuring clarity in outcomes without the complexity of numerous variables.

Results showed low execution time even with 1000 agents, highlighting the model's efficiency. Players generally reached their optimal strategies after a few rounds, informing the system administrator when to end the bidding process for maximum efficiency and participation.

The multi-round bidding mechanism showed that agent four frequently won due to initial price settings, underscoring the importance of these parameters in maintaining fairness. By analyzing the impact of price limitations on success rates, administrators can adjust configurations for fair competition.

Unlike Stackelberg games, where bids vary significantly, this method promotes consistent and equitable bidding, ensuring a balanced competition environment vital for both large and small MNOs, encouraging widespread network participation, and maintaining robust competition and coverage.

Supporting the reverse auction theory, this model prioritizes fairness and network expansion, making it suitable for diverse participants, including small and individual MNOs. This approach contrasts with the oligopolistic nature of Stackelberg games, which focus on cost minimization. With customizable discount factors and termination criteria, the system's adaptability allows network administrators to fine-tune operations according to evolving needs. This flexibility ensures long-term viability, upholding principles of democracy and equality while optimizing network performance and sustainability.

A DRL approach optimized bidding strategies for network provisioning, surpassing traditional game theory methods like Stackelberg or Bertrand models in scalability and adaptability. Agents representing MNOs competed in a reverse auction, iteratively adjusting their bids based on feedback to maximize their competitive position and financial gain. The DRL algorithm underwent a training phase to fine-tune parameters, enabling agents to learn optimal strategies through trial and error. Empirical tests showed that bidding outcomes reached a saturation point after several rounds, indicating optimal pricing strategies. This iterative process ensured continual adaptation to changing network conditions, benefiting both MNOs and end-users in service quality and cost efficiency.

Conclusion

To sum up, the SPP module incentivized users to act as temporary MNOs, enhancing communication in areas with poor coverage. Research demonstrated that DRL was more scalable and adaptable than classic game theory. Incorporating dynamic programming into a DRL-based reverse auction allowed customizable settings for various contexts and seamless integration as a smart contract within blockchain technology. It ensured the system's adaptability and resilience. Ongoing work included adding variables such as MNO resources, evaluating execution duration, and ensuring equitable resource distribution to maintain fairness and efficiency.

Journal reference:

- Kaltakis, K., et al. (2024). A Reinforcement Learning-Based Reverse Auction Enforcing Smart Pricing Policies towards B5G Offloading Strategies. Electronics, 13:13, 2488. DOI: 10.3390/electronics13132488, https://www.mdpi.com/2079-9292/13/13/2488