In an article published in the journal Computer Speech and Language, researchers introduced three innovative deep learning models—multi-task pyramid split attention- densely convolutional networks (MPSA-DenseNet), pyramid split attention (PSA)-DenseNet, and multi-task (Multi)-DenseNet—for classifying English accents.

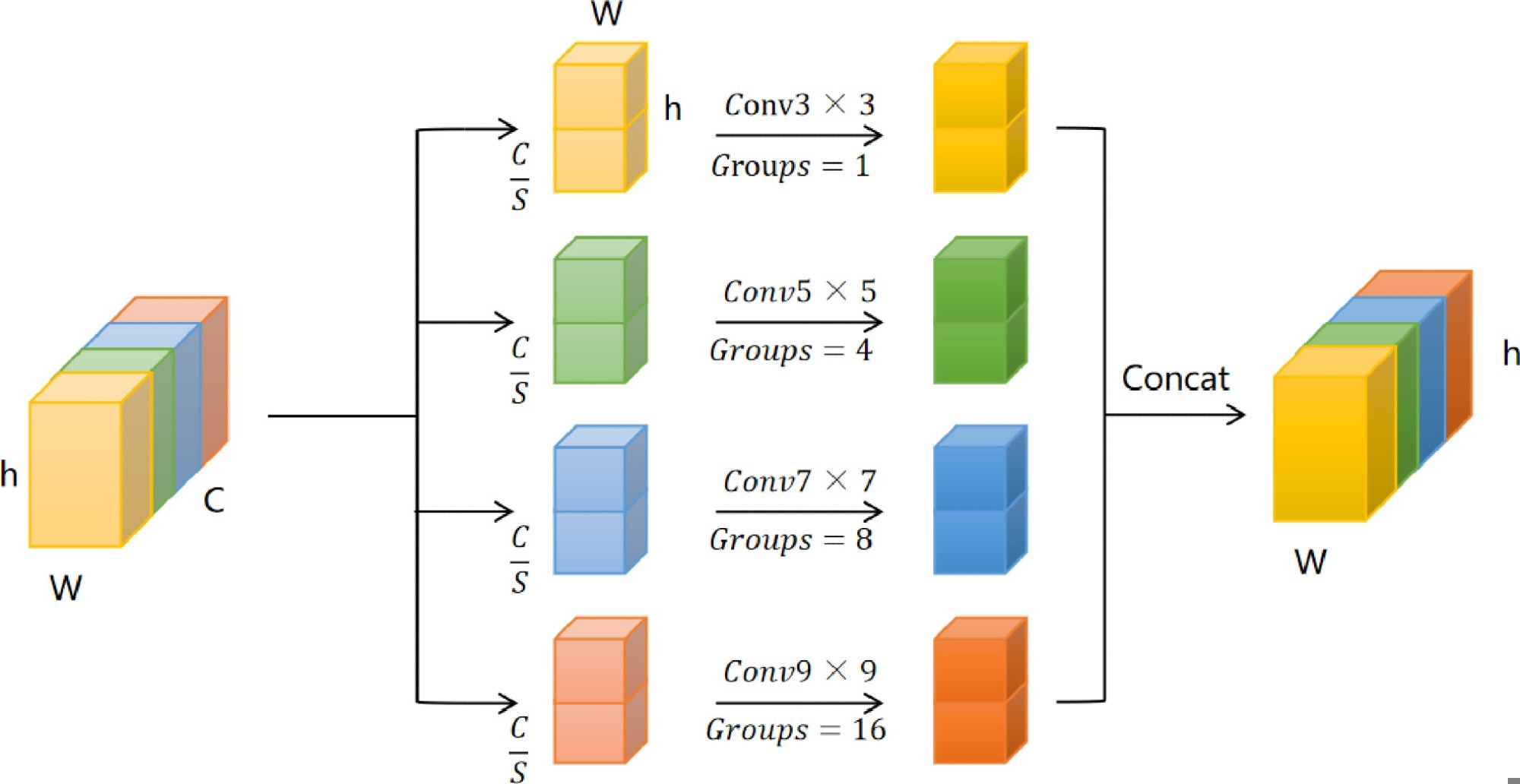

Structure of SPC Module refer to the EPSANet. Image Credit: https://www.sciencedirect.com/science/article/pii/S0885230824000597

Structure of SPC Module refer to the EPSANet. Image Credit: https://www.sciencedirect.com/science/article/pii/S0885230824000597

These models integrated multi-task learning and/or the PSA module attention mechanism with DenseNet. Applied to accents from both native and non-native English-speaking regions, the models, especially MPSA-DenseNet, significantly improved classification accuracy, outperforming previous models like DenseNet and efficient pyramid squeeze attention (EPSA).

Background

Accent recognition has become a significant focus in speech applications, particularly for enhancing automatic speech recognition (ASR) systems. Accents, shaped by upbringing and social background, complicate speech recognition due to regional variations in English. Traditional machine learning methods like support vector machines (SVM) and hidden Markov models (HMM) have been used for accent classification, with mixed results. Feature extraction methods such as Mel-frequency cepstral coefficients (MFCC) have shown good performance, but recent studies suggest that learning directly from waveforms can sometimes yield better results.

Deep learning models, including convolutional neural networks (CNN) and recurrent neural networks (RNN), have gradually replaced traditional methods, offering improved accuracy in accent classification. For example, combining deep neural networks (DNN) with CNNs has shown marked improvements. Additionally, techniques like transfer learning and multi-task learning have enhanced the ability to handle complex scenarios and overlapping sounds. Attention mechanisms have further refined classification accuracy by focusing on relevant features in the speech signals.

However, most research has focused on translating accented speech into correct content rather than classifying the accent itself. This paper introduced three innovative deep learning models—Multi-DenseNet, PSA-DenseNet, and MPSA-DenseNet—that integrated DenseNet, multi-task learning, and attention mechanisms to address this gap. These models aimed to improve accent classification accuracy, with significant implications for communication, language learning, and ASR technologies.

Experimental Setup and Methodology

In this study, the researchers developed and evaluated three deep learning models for English accent classification using the Common Voice Dataset.

- Data Collection and Preprocessing: The authors utilized a diverse dataset comprising five English dialects from England, the United States, Germany, Hong Kong, and India, totaling 26,955 samples. The data included auxiliary tasks for age and gender classification to improve model robustness. Audio files were standardized to a four-second duration and converted to spectrograms using MFCCs.

- Model Architectures: Multi-DenseNet combined DenseNet with multi-task learning, sharing parameters across tasks (age and gender) to enhance generalization and reduce overfitting. PSA-DenseNet integrated DenseNet with the PSA module to prioritize important input data regions, improving feature extraction and long-term dependency modeling. MPSA-DenseNet combined both multi-task learning and the PSA module, aiming to leverage their combined strengths for superior classification performance.

- Evaluation and Environment: Models were trained using compute unified device architecture (CUDA) 11.0 and the MXNET framework, with the softmax cross entropy loss function. Hyperparameters were consistently set across models, 50 epochs, a batch size of 32, and a learning rate of 0.0001. The researchers split the dataset into training, validation, and test sets (8:1:1 ratio) and used the F1 score to evaluate performance, addressing imbalanced data challenges.

The results demonstrated that integrating multi-task learning and attention mechanisms with DenseNet enhanced the model's ability to classify English accents accurately while maintaining computational efficiency.

Research Outcomes and Model Performance Analysis

MPSA-DenseNet outperformed all models, showing increased accuracy and reduced training loss with more epochs. Its multi-task learning approach also boosted gender and age classification accuracy. The normalized confusion matrix for MPSA-DenseNet showed strong performance, especially in recognizing the German accent with 99.0% accuracy. Misclassification was low, with the highest being 6.9% for England accents misclassified as American.

MPSA-DenseNet, PSA-DenseNet, and Multi-DenseNet were compared to six existing models, EPSA network (EPSANet), DenseNet, convolutional block attention module (CBAM)-CNN, long short-term memory (LSTM), residual neural network (ResNet), and MobileNetV2. MPSA-DenseNet led in all evaluation metrics (micro, macro, accuracy), while the other two proposed models still performed competitively.

Training peak memory usage revealed that attention-based models (PSA-DenseNet, EPSANet) consumed more memory due to additional computations. Multi-DenseNet showed significantly lower memory usage, benefiting from parameter sharing in multi-task learning. MPSA-DenseNet demonstrated efficient memory management with lower usage compared to other attention-based models. MPSA-DenseNet's stability was confirmed as it showed increasing micro and macro scores with larger training sets, indicating reliable performance.

In essence, MPSA-DenseNet's superior performance was attributed to its effective integration of DenseNet, multi-task learning, and attention mechanisms, which together enhanced feature extraction, generalization, and focus on relevant features.

Conclusion

In conclusion, the researchers introduced three advanced models—MPSA-DenseNet, PSA-DenseNet, and Multi-DenseNet—designed for English accent classification. By integrating DenseNet, multi-task learning, and the PSA module, these models, particularly MPSA-DenseNet, demonstrated superior accuracy compared to existing methods.

The results highlighted the effectiveness of combining these components for robust feature extraction and generalization. While recognizing the high memory demands, future research should explore more efficient architectures like CondenseNetV2. Overall, the proposed models showed great potential for accent recognition and could be adapted for various audio classification tasks.