In an article recently submitted to the ArXiv* server, researchers demonstrated the feasibility of fast text-to-three-dimensional (3D) object generation using a one-pass feedforward network.

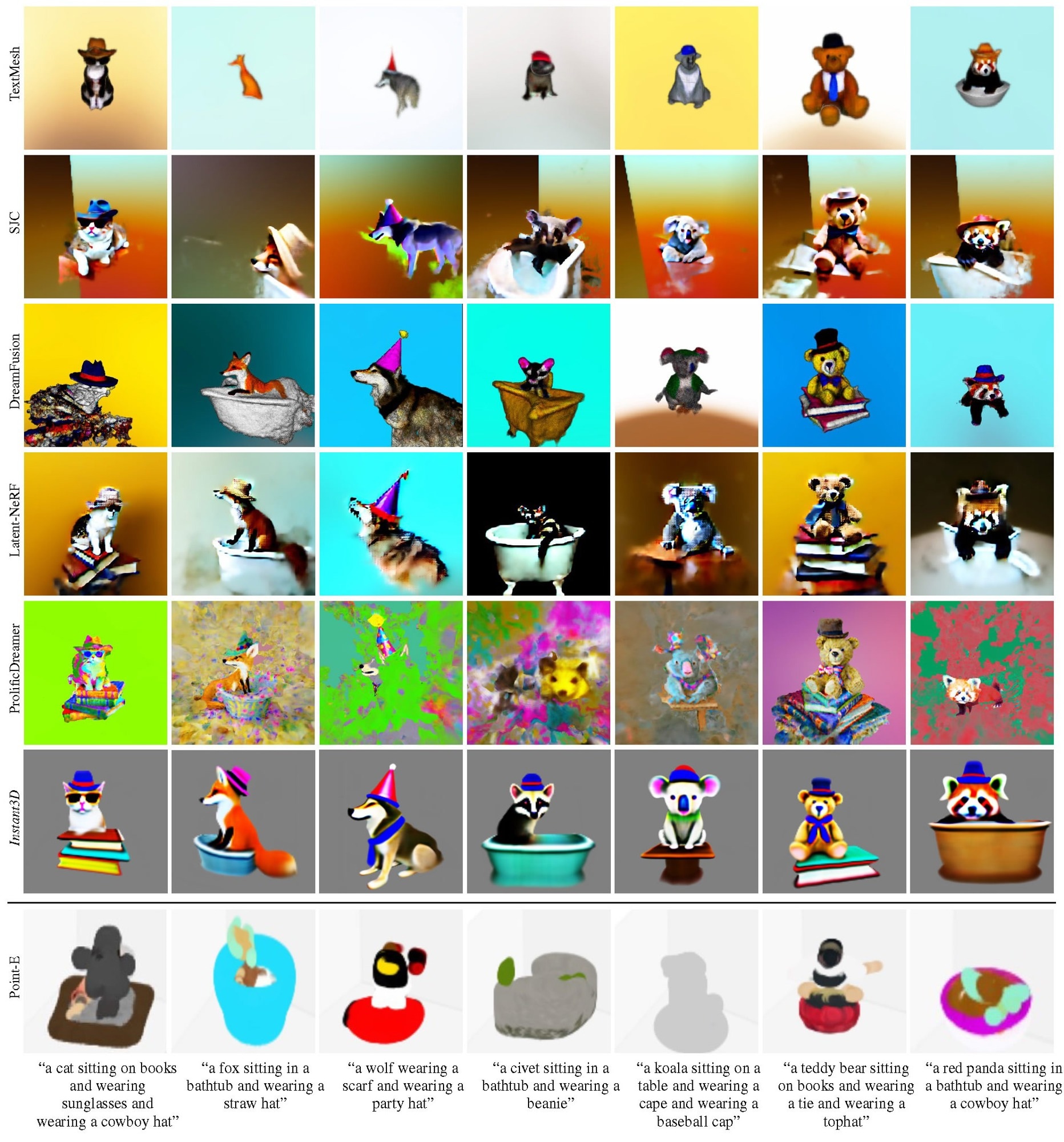

Study: Instant3D: Accelerating Text-to-3D Generation with Efficient Neural Networks. Qualitative comparison with the state-of-the-art methods including TextMesh, SJC, DreamFusion, Latent-NeRF, ProlificDreamer, and Point-E on the Animals dataset. The proposed Instant3D achieves higher quality results while being much more efficient than the baselines. Image credit: arxiv.org

Study: Instant3D: Accelerating Text-to-3D Generation with Efficient Neural Networks. Qualitative comparison with the state-of-the-art methods including TextMesh, SJC, DreamFusion, Latent-NeRF, ProlificDreamer, and Point-E on the Animals dataset. The proposed Instant3D achieves higher quality results while being much more efficient than the baselines. Image credit: arxiv.org

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Background

Text-guided 3D content generation has significant potential for different applications, such as virtual reality, animation, and film production. In recent years, significant and rapid advancements in this field have led to the development of several effective text-to-3D content generation methods, including ProlificDreamer, SJC, Latent-neural radiance field (NeRF), and DreamFusion. These methods primarily represent the target object using a randomly initialized NeRF and optimize the NeRF under two-dimensional (2D) diffusion priors guidance by leveraging a large-scale pre-trained text-to-image diffusion model.

Although they deliver impressive results, their reliance on an optimization-based learning paradigm, which requires thousands of iterations for optimizing a NeRF for every new input/each text prompt that leads to significant computational costs and substantially slow response speed for real-world text-to-3D systems, is a major limitation. These methods take approximately one hour or more to generate a single object. Thus, this repetitive and heavy training cost impedes their practical deployment. Moreover, these NeRFs cannot leverage the general 3D priors shared across objects as they are learned separately for various text inputs.

Although these issues can be addressed by training a diffusion model directly for 3D point cloud generation, the diffusion models need several iterative diffusion steps, which results in low efficiency.

The Instant3D framework

In this study, researchers proposed a novel framework for fast text-to-3D generation, designated as Instant3D. The framework can generate a realistic 3D object that accurately aligns with an unseen text prompt in less than one second within a single forward pass of a neural network/single run of a feedforward network.

Researchers achieved this exceptional speed by developing a new network that constructs a 3D triplane directly from a text prompt. The core innovation of Instant3D was the identification of strategies to effectively inject text conditions into the network.

The feedforward neural network was conditioned on the text prompt to produce a triplane representation that encapsulated the desired 3D object. Additionally, the score distillation sampling (SDS) loss was utilized to train the network.

The proposed Instant3D also incorporated a CLIP loss as researchers employed the CLIP image encoder to extract image features from the rendered image and utilized the CLIP text encoder class embeddings to represent the text prompt. Researchers defined the CLIP loss as the cosine distance between the text and image features to more closely align the rendered image with the input prompt.

Although the network was conceptually simple, designing this network was significantly challenging as the SDS loss provides weak supervision signals, which increases the difficulties of learning a precise connection between the 3D output and text condition for a common condition model. Thus, a more effective conditional model was necessary that can better absorb text information/inject text information into the network to alleviate the difficulty of learning the relation between the two different modalities, including 3D and text.

To overcome this challenge, researchers proposed an integrated solution combining three condition mechanisms, including style injection, token-to-plane transformation, and cross-attention. The integrated solution was also the core innovation of Instant3D, which ensured accurate alignment of the produced 3D output with the condition text prompt.

Moreover, researchers also proposed a simple and effective activation function designated as scaled-sigmoid for replacing the original sigmoid function in NeRF to accelerate the network convergence/training convergence by over ten times. Finally, an adaptive Perp-Neg algorithm was proposed to adjust its concept negation scales dynamically based on the severity of the Janus problem during training to effectively reduce the multi-head effect in 3D generation.

Experimental evaluation and findings

Researchers devised three benchmark prompt sets, including Animals, Portraits, and Daily Life, to comprehensively assess the proposed Instant3D framework. A qualitative comparison was performed by comparing the proposed framework with state-of-the-art text-to-3D methods, including ProlificDreamer, Latent-NeRF, DreamFusion, SJC, and TextMesh.

Results demonstrated that Instant3D generated 3D objects of much higher quality compared to those generated by other state-of-the-art methods/baselines. Additionally, the proposed framework effectively captured all elements in the prompts and the relationships between them.

However, objects generated by baseline approaches frequently missed crucial elements and had disorganized inter-item relationships. Researchers also compared Instant3D with Point-E, which can directly train a 3D diffusion model. Point-E only generated simple objects and displayed poor performance on complex text prompts as the method requires millions of 3D-text pairs that are unavailable to the public.

Visual examples from the Portraits, Daily Life, and Animals datasets displayed that the proposed Instant3D could produce high-quality text-to-3D results consistently with favorable multi-view consistency. Specifically, the superior performance of Instant3D on the challenging Daily Life dataset indicated its capability to effectively handle complex real-world text prompts.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Li, M., Zhou, P., Liu, J., Keppo, J., Lin, M., Yan, S., Xu, X. (2023). Instant3D: Instant Text-to-3D Generation. ArXiv. https://doi.org/10.48550/arXiv.2311.08403, https://arxiv.org/abs/2311.08403