Scientists have developed an AI model that can decode animal emotions with near-human precision, unlocking new ways to improve welfare, detect distress, and enhance conservation efforts. This innovation could forever change how we understand and care for animals.

Research: Machine learning algorithms can predict emotional valence across ungulate vocalizations. Image Credit: Dusan Petkovic / Shutterstock

Research: Machine learning algorithms can predict emotional valence across ungulate vocalizations. Image Credit: Dusan Petkovic / Shutterstock

"This breakthrough provides solid evidence that AI can decode emotions across multiple species based on vocal patterns. It has the potential to revolutionise animal welfare, livestock management, and conservation, allowing us to monitor animals' emotions in real-time," says Élodie F. Briefer, Associate Professor at the Department of Biology and last study author.

AI as a Universal Animal Emotion Translator

The researchers identified key acoustic indicators of emotional valence by analyzing thousands of vocalizations from ungulates in different emotional states. Changes in duration, energy distribution, fundamental frequency, and amplitude modulation were the most important predictors of whether an emotion was positive or negative. Remarkably, these patterns were somewhat consistent across species, suggesting that fundamental vocal expressions of emotions are evolutionarily conserved.

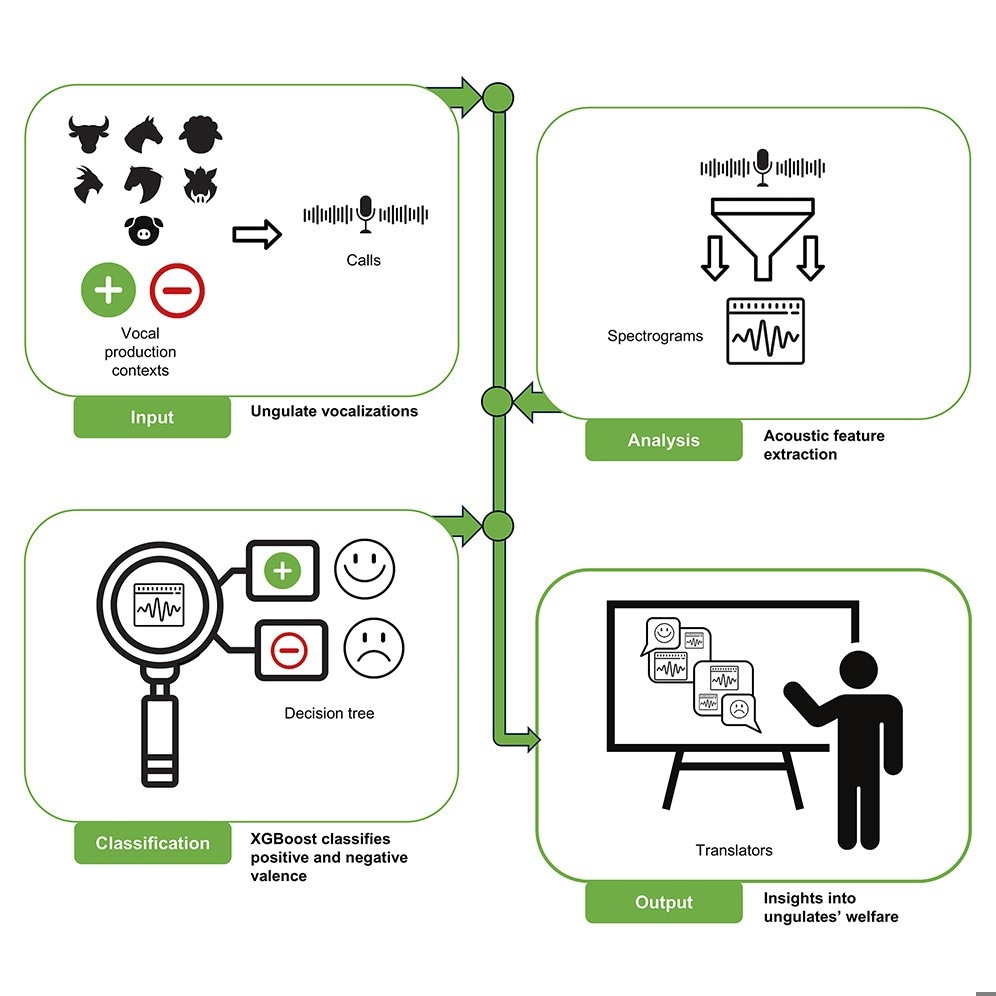

Graphical abstract

A Game-Changer for Animal Welfare and Conservation

The study's findings have far-reaching implications. The AI-powered classification model could be used to develop automated tools for real-time animal emotion monitoring, transforming how we approach livestock management, veterinary care, and conservation efforts. Èlodie F. Briefer explains:

"Understanding how animals express emotions can help us improve their well-being. If we can detect stress or discomfort early, we can intervene before it escalates. Equally important, we could also promote positive emotions. This would be a game-changer for animal welfare."

Key Scientific Findings

- High accuracy—The AI model classified emotional valence with an overall accuracy of 89.49%, demonstrating its ability to distinguish between positive and negative states.

- Universal acoustic patterns – Key predictors of emotional valence were consistent across species, indicating an evolutionarily conserved emotional expression system.

- New perspectives on emotional communication – This research offers insights into the evolutionary origins of human language and could reshape our understanding of animal emotions.

Next Steps: Expanding Research and Sharing the Data

The researchers have publicly made their database of labeled emotional calls from the seven ungulate species to support further studies.

"We want this to be a resource for other scientists. By making the data open access, we hope to accelerate research into how AI can help us better understand animals and improve their welfare," Briefer concludes.

This study brings us one step closer to a future where technology allows us to understand and respond to animal emotions, offering exciting new possibilities for science, animal welfare, and conservation.

Source:

Journal reference: