Harnessing advanced AI, EasyDAM_V4 overcomes fruit shape and texture challenges, enabling faster, more accurate labeling for smart agriculture and boosting efficiency in orchard management.

Study: EasyDAM_V4: Guided-GAN-based cross-species data labeling for fruit detection with significant shape difference. Image Credit: Olga Mukashev / Shutterstock

Study: EasyDAM_V4: Guided-GAN-based cross-species data labeling for fruit detection with significant shape difference. Image Credit: Olga Mukashev / Shutterstock

Traditional fruit detection models rely on extensive manually labeled datasets, a labor-intensive and time-consuming process that becomes particularly challenging for fruits with diverse shapes, sizes, and textures. Real-world orchard environments introduce further complexity, as fruits are often densely packed, occluded by leaves, or displayed under varying lighting conditions. These challenges underscore the need for an automated, intelligent labeling system that can handle substantial shape variations while improving detection generalization.

In response to these challenges, a research team from Beijing University of Technology and The University of Tokyo has developed EasyDAM_V4, a pioneering AI-driven approach, published in the journal Horticulture Research. Leveraging a Guided-GAN model, this method enables cross-species fruit image translation, significantly improving labeling accuracy even for fruits with large phenotypic variations. The study's results highlight substantial improvements in automated labeling, offering a powerful new tool for agricultural image processing.

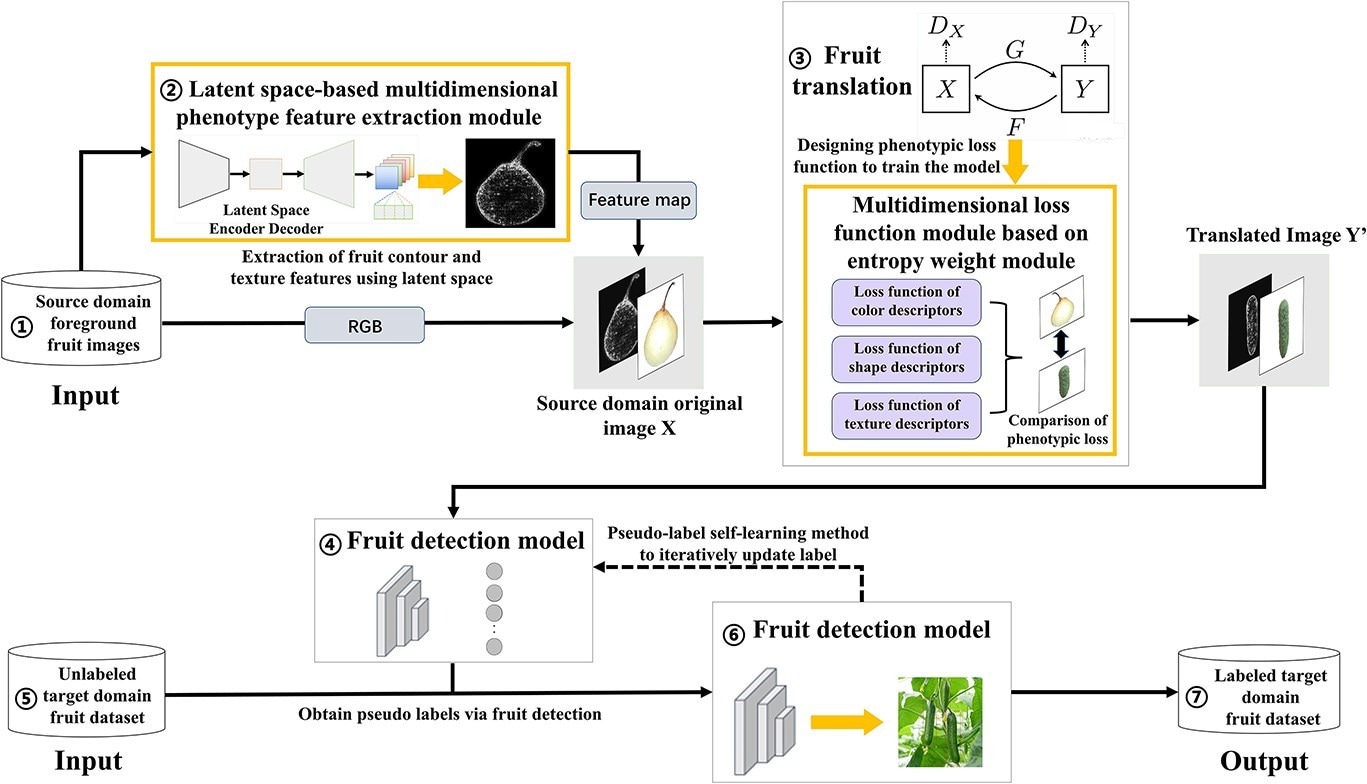

At the core of EasyDAM_V4 lies a multi-dimensional phenotypic feature extraction technique, which integrates deep learning and latent space modeling to enhance fruit image translation. The method employs a pre-trained VGG16 network to extract shape and texture features from fruit images, which are then fused with original RGB images to enhance the input for the GAN model. A novel multi-dimensional loss function is introduced, with separate loss components for shape, texture, and color features, dynamically weighted using an entropy-based adjustment strategy. This sophisticated approach enables precise control over the generation of fruit features, overcoming previous limitations in handling large shape and texture variations across fruit species.

Overall architecture of the EasyDAM_V4 method. The overall architecture of the proposed method involves seven main parts. The source domain foreground fruit image represented by (1) and the labeled target domain fruit dataset represented by (7) are the input and output of the method, respectively. Boxes (2) and (3) illustrate the main innovation points of this paper. (2) The shape texture feature map obtained by using the latent space-based multi-dimensional phenotype feature extraction method. The fruit translation model is trained together with the original RGB image after concatenation. (3) The multi-dimensional loss function module based on the entropy weight method, which is used to accurately describe the phenotypic features of fruits during the training process. Rectangles of different colors indicate the fruit image translation results controlled by different categories of loss functions.

The effectiveness of EasyDAM_V4 was demonstrated using pear images as the source domain and pitaya, eggplant, and cucumber images as target domains. The model achieved impressive labeling accuracies of 87.8% for pitaya, 87.0% for eggplant, and 80.7% for cucumber, significantly outperforming existing methods. The Guided-GAN model's ability to generate realistic fruit images with large phenotypic differences represents a major leap forward in automated dataset generation for agricultural AI.

Dr. Wenli Zhang, one of the lead researchers behind the study from Beijing University of Technology, emphasized the impact of this breakthrough, "EasyDAM_V4 represents a significant step toward full automation in fruit detection. By effectively addressing the challenges of shape variance and domain adaptation, this technology not only enhances labeling accuracy but also lays the groundwork for the next generation of agricultural AI and smart orchards."

The potential applications of EasyDAM_V4 extend far beyond fruit labeling. By streamlining dataset preparation, this innovation could accelerate the development of smart agriculture, enabling more accurate yield predictions, efficient robotic harvesting, and advanced phenotypic studies. Additionally, high-quality labeled datasets will support plant phenomics and breeding strategies, ultimately contributing to more sustainable and resilient agricultural systems.

As AI continues to reshape modern farming, EasyDAM_V4 stands at the forefront, revolutionizing how fruit detection models are built, trained, and deployed one pixel at a time.

Journal reference:

- Zhang, W., Liu, Y., Wang, C., Zheng, C., Cui, G., & Guo, W. (2024). EasyDAM_V4: Guided-GAN-based cross-species data labeling for fruit detection with significant shape difference. Horticulture Research, 11(3). DOI: 10.1093/hr/uhae007, https://academic.oup.com/hr/article/11/3/uhae007/7515263