The researchers at FutureHouse Inc., San Francisco; University of Rochester, NY; and Francis Crick Institute, London, along with a multidisciplinary team, emphasized the potential of language agents to automate complex tasks across diverse scientific fields.

Advancements in Language Agent Technology

The development of language agents represents a significant step in the field of artificial intelligence (AI). By leveraging large language models (LLMs), these agents can interpret natural language inputs and perform complex reasoning tasks, including multi-step reasoning and tool usage, which are essential in scientific applications.

Unlike traditional AI systems that rely on predefined rules or reinforcement learning and often struggle to generalize in new environments, LLMs excel at zero-shot generalization, enabling adaptation to unfamiliar tasks with minimal prior knowledge. However, the stochastic nature of LLMs can sometimes result in flawed reasoning when used in isolation. Grounding LLMs within environments offering observational feedback mitigates these issues, as detailed in the study.

Aviary: Novel Technique for Executing Scientific Tasks

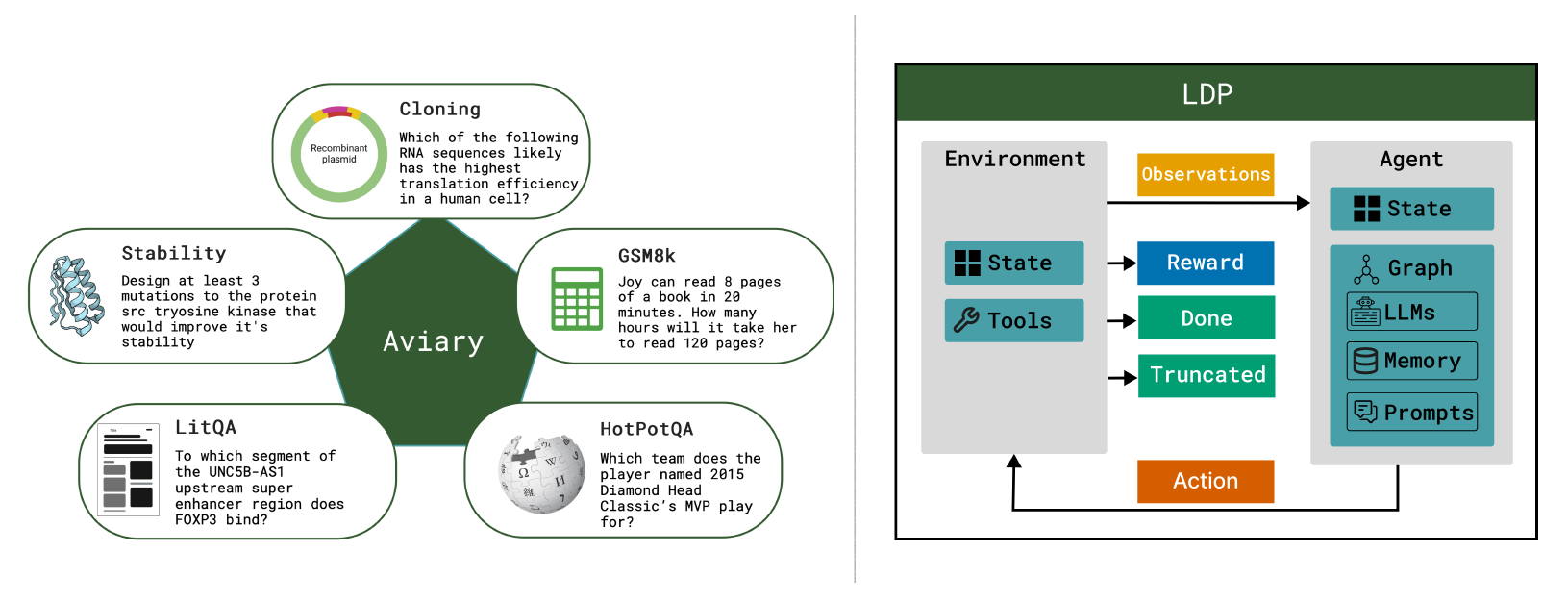

In this paper, the authors highlighted the importance of grounding LLMs in environments that provide observational feedback to address flawed reasoning. They introduced the Aviary framework to train and optimize language agents by formalizing them as policies to solve language-grounded partially observable Markov decision processes (POMDPs).

Aviary includes five environments, each designed to address scientific tasks like molecular cloning, scientific literature question answering, and protein stability engineering. These environments were selected because they need multi-step reasoning and are highly relevant to modern biological research.

An overview of the five implemented Aviary environments and the language decision process (LDP) framework. The term language decision process here jointly refers to our theoretical description of the class of problems solved by language agents, as well as a software framework for implementing language agents based on a stochastic computation graph that enables training of language agent components such as LLM weights, prompts, memories, or LLM sampling parameters like temperature.

The framework depends on language decision processes (LDPs) as the foundational structure for these environments. The study emphasizes the unique use of stochastic computation graphs to optimize components like LLM weights and sampling parameters, enabling modular experimentation.

The study employed several methods to train and evaluate language agents within the Aviary framework. Expert iteration, combining behavior cloning with iterative refinement through rejection sampling, played a central role in this process.

Additionally, the researchers explored scaling inference-time computing through majority voting, where multiple trajectories are sampled, and their consensus is used to determine the task's solution. They incorporated tools and techniques such as retrieval-augmented generation (RAG), rejection sampling, and the ReAct framework to further optimize performance.

Findings of Implementing Aviary Framework

The outcomes showed that language agents trained within the Aviary framework matched or sometimes exceeded the performance of human experts and state-of-the-art LLMs on various tasks. For example, agents successfully manipulated deoxyribonucleic acid (DNA) constructs in the molecular cloning environment, answered complex molecular biology questions, and performed iterative reasoning tasks.

These achievements were evaluated using benchmarks like SeqQA and LitQA2, where agents demonstrated up to 72% accuracy, even on complex datasets. They achieved expert-level performance while using significantly fewer computational resources, giving results at up to 100 times lower inference costs.

These results highlight the efficiency and effectiveness of the Aviary framework in advancing language agents' capabilities in scientific research.

The authors also highlighted the importance of integrating diverse training methodologies to enhance agent performance. Expert iteration plays a key role by enabling agents to learn from their mistakes and refine their decision-making over time, a critical approach for tasks requiring comprehensive understanding and complex problem-solving.

The agents improved their accuracy across tasks by using majority voting techniques, demonstrating the value of ensemble methods for enhancing performance. This technique aggregated multiple outputs from the agents, resulting in accuracy improvements of up to 20 percentage points on certain benchmarks.

Applications of Newly Developed System

This research has significant potential across diverse scientific domains, including genomics, drug discovery, and environmental science. Language agents trained with the Aviary framework can significantly enhance the efficiency of tasks like molecular cloning, where precise DNA manipulation is essential.

Automating the design and execution of cloning experiments can streamline research timelines and minimize human error. By combining tools like annotation systems, primer design utilities, and DNA sequence databases, the agents effectively replicate expert workflows.

The framework's use in scientific literature question-answering could enable faster and more effective literature reviews and data synthesis. This allows scientists to quickly access relevant information and insights, which is especially valuable in fast-paced research settings where timely access to knowledge can shape the direction of investigations.

Furthermore, the study has implications for protein engineering, where language agents can help design mutations to improve protein stability. Leveraging biophysical modeling tools like Rosetta alongside language decision processes, these agents contribute to advances in enzyme engineering and therapeutic design.

Conclusion and Future Directions

In summary, the novel platform represents a significant step in developing language agents for scientific applications. It provides a structured environment for training agents and demonstrates their ability to outperform traditional methods in handling complex tasks.

The findings emphasize the importance of integrating observational feedback and iterative training to enhance performance. The research also underscores the efficiency of smaller models, such as Llama-3.1-8B-Instruct, which matched or exceeded human-level performance at far lower costs.

The framework’s open-source nature also fosters collaboration and innovation across diverse scientific fields. GitHub repositories for Aviary and LDP have been made available to encourage broader experimentation and application of the framework. As language agents continue to advance, their potential applications extend across different scientific fields.

Future work should focus on expanding Aviary’s capabilities by incorporating more complex environments and additional tools. The ongoing progress in language agent development can transform scientific research across various domains.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Source:

Journal reference:

- Preliminary scientific report.

Narayanan, S., & et al. Aviary: training language agents on challenging scientific tasks. arXiv, 2024, 2412, 21154. DOI: 10.48550/arXiv.2412.21154, https://arxiv.org/abs/2412.21154