Advancements in Video Generation Technology

Diffusion models have emerged as a leading technique in generative modeling. They outperform traditional methods, such as variational autoencoders (VAEs) and generative adversarial networks (GANs) in image and video synthesis. These models work by gradually denoising random noise into coherent outputs, enabling the generation of complex data distributions. However, recent advancements in DiTs have introduced significant computational challenges, resulting in slower inference speeds due to their large architectures and complex attention mechanisms.

AdaCache was introduced to address these challenges by recognizing that not all videos require the same computational resources. Using a pre-defined "codebook of basis cache-rates," AdaCache optimizes denoising by tailoring computations to each video's unique content complexity. This approach improves speed and quality without requiring extensive retraining of the underlying models.

AdaCache-Based Novel Methodology

In this paper, the authors developed a training-free method to speed up video generation using DiTs. The proposed AdaCache technique is based on the idea that certain videos can achieve satisfactory quality with fewer denoising steps. A key innovation is the use of a distance metric (e.g., L1 or L2) to measure the rate of change between previously computed and current representations, determining when to cache or recompute. This is accomplished through a caching mechanism that stores computations from previous diffusion steps, allowing them to be reused in subsequent steps. The study designed a content-dependent caching schedule that adapts to the specific characteristics of each video being generated.

To implement this framework, the researchers introduced a motion regularization (MoReg) scheme that allocates computational resources based on the motion content within the video. This approach optimizes compute allocation, ensuring that sequences with higher motion complexity receive the necessary processing while more straightforward sequences benefit from reduced computational overhead. MoReg achieves this by estimating a "motion score" and "motion gradient," which are derived from latent frame differences during the diffusion process. These metrics allow real-time adjustments to caching schedules, even in the early stages of video generation.

Not all videos are created equal: We show frames from 720p - 2s video generations based on Open-Sora (Zheng et al., 2024). We try to break each generation by reducing the number of diffusion steps. Interestingly, not all videos have the same break point. Some sequences are extremely robust (e.g. first-two columns), while others break easily. (Right) When we plot the difference between computed representations in subsequent diffusion steps, we see unique variations (Feature distance vs. #steps). If we are to reuse similar representations, it needs to be tailored to each video. Both these observations suggest the need for a content-dependent denoising process, which is the founding motivation of Adaptive Caching.

Impact of Using AdaCache Technique

The outcomes showed that AdaCache significantly boosted inference speeds, with improvements of up to 4.7× in Open-Sora 720p video generation, while maintaining quality comparable to existing methods. This performance gain was achieved without retraining, making AdaCache an attractive solution for practitioners in generative modeling.

The authors conducted extensive experiments across multiple video DiT baselines, including Open-Sora, to validate the effectiveness of AdaCache. Inference speedups and quality preservation were measured using various metrics, including video benchmark (VBench), peak signal-to-noise ratio (PSNR), structural similarity index measure (SSIM), and learned perceptual image patch similarity (LPIPS). AdaCache consistently outperformed competing methods like PAB and ∆-DiT, achieving the best speedup-quality balance. These metrics provided a comprehensive evaluation of the generated videos' speed and quality.

The study demonstrated that the caching schedule effectively reduces the required computations based on each video's unique characteristics. For example, videos containing static or straightforward content require fewer denoising steps, whereas those with high-frequency textures or significant motion benefit from more intensive processing. The MoReg scheme further optimized this by adjusting computational allocation according to the observed motion, thereby improving the quality-latency trade-off.

Additionally, user studies indicated a strong preference for AdaCache-generated videos over those produced by traditional methods, highlighting the framework's practical relevance and acceptance. In randomized A/B tests, 70% of participants favored AdaCache over PAB for perceived quality, further validating its human-aligned performance. The results emphasized AdaCache's potential as a plug-and-play solution that can be easily integrated into existing video generation pipelines.

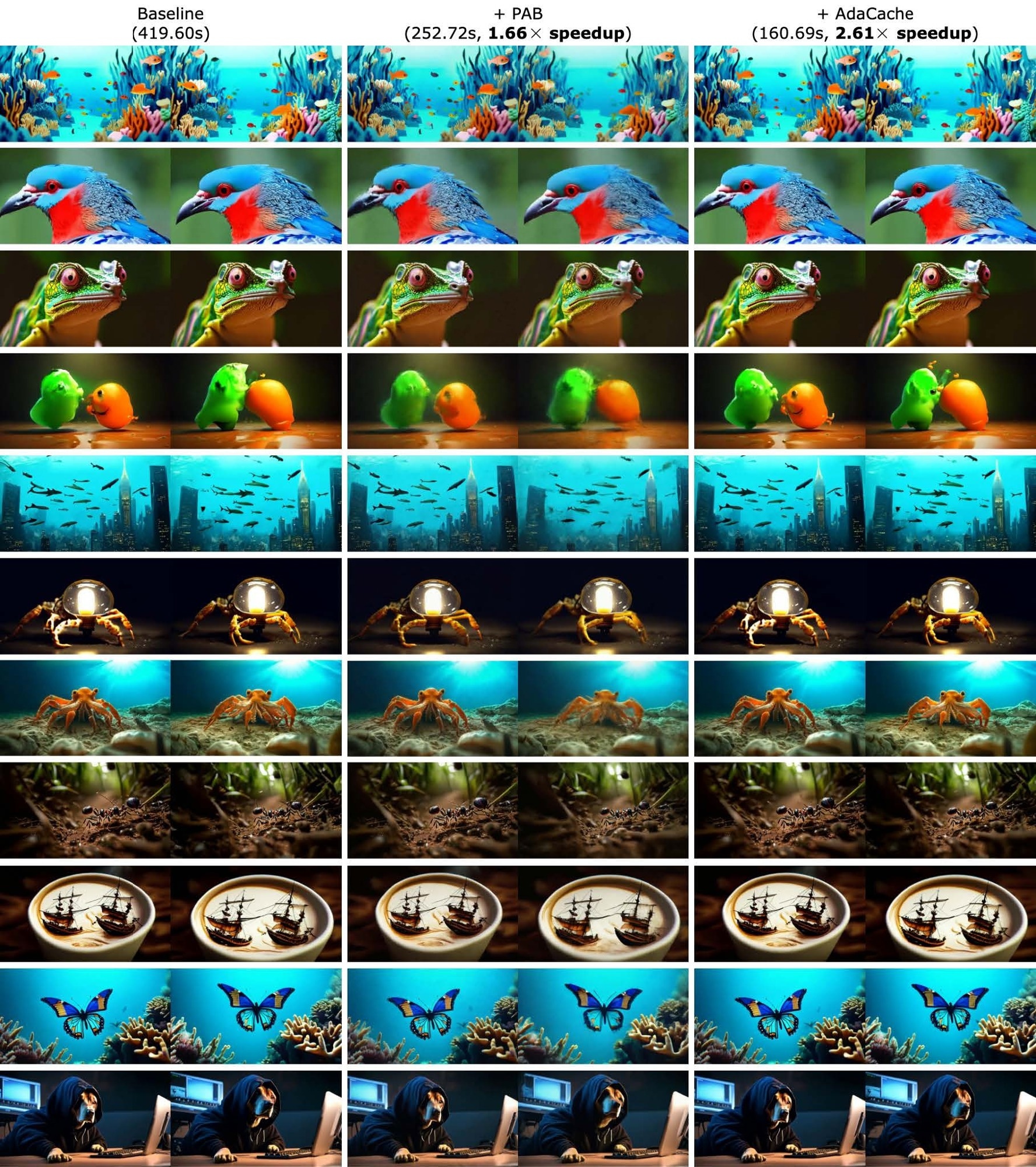

Additional qualitative comparisons with prior-art: We show qualitative comparisons with prior-art on baseline Open-Sora (Zheng et al., 2024) (720p - 2s at 100-steps). Here, we evaluate against prior training-free inference acceleration method PAB (Zhao et al., 2024c) at a comparable speedup. AdaCache consistently shows a better generation quality.

Practical Applications of Adaptive Caching

This research has significant implications for various industries. In sectors like entertainment, gaming, and virtual reality, the ability to generate high-quality videos quickly can enhance user experiences and streamline production workflows. For example, faster video generation can improve the production of animated films, video games, and virtual reality experiences, where high-quality content is essential. Companies can use this technology in advertising and marketing to create dynamic promotional videos that engage audiences in real-time.

AdaCache's training-free design makes it suitable for resource-constrained environments, benefiting smaller studios and independent creators. Furthermore, efficiently generating high-quality videos could support sectors like education and training, where engaging visual content is crucial for effective learning. The ability to tailor computational resources dynamically also makes it versatile across varying content types and resolutions. By reducing computational demands, AdaCache could make advanced video creation tools more accessible.

Conclusion

In summary, the AdaCache-based technique represents a significant advancement in video generation. Its ability to optimize computational resources while maintaining high-quality outputs marks a crucial step forward in addressing the challenges posed by traditional diffusion models. The integration of MoReg enhances its scalability, ensuring consistent quality across dynamic content types. The researchers highlighted AdaCache's potential to democratize access to high-quality video generation for various applications.

As the demand for efficient video generation grows, future work could explore AdaCache's scalability across different video resolutions and content types. Additionally, investigating its integration with emerging AI and machine learning technologies could lead to even more innovative solutions for video generation. Overall, this research not only contributes to academic discourse but also has the potential to revolutionize video content creation.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Kahatapitiya, K., Liu, H., He, S., Liu, D., Jia, M., Zhang, C., Ryoo, M. S., & Xie, T. (2024). Adaptive Caching for Faster Video Generation with Diffusion Transformers. ArXiv. https://arxiv.org/abs/2411.02397