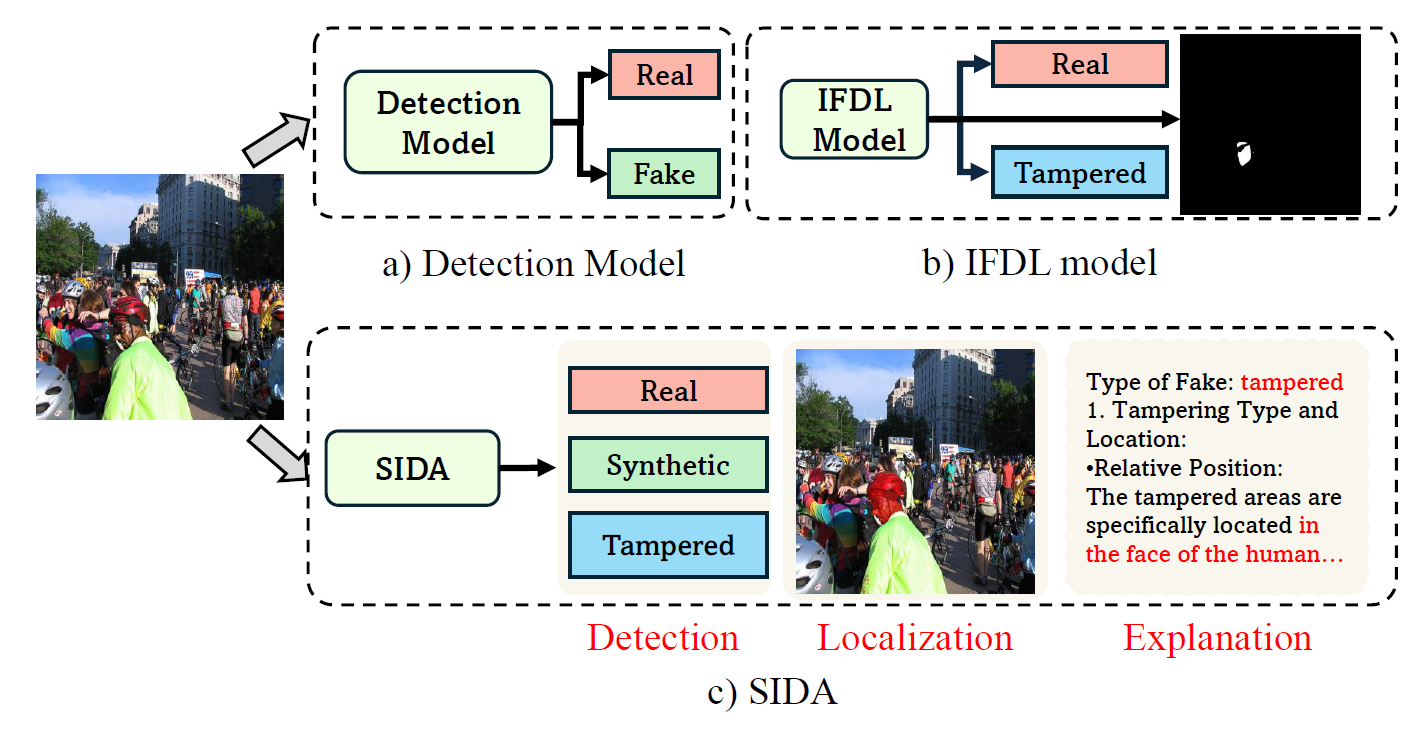

The framework comparisons. Existing deepfake methods (a-b) are limited to detection, localization, or both. In contrast, SIDA (c) offers a more comprehensive solution, capable of handling detection, localization, and explanation tasks.

Advancements in Generative Technology

The rise of generative artificial intelligence (AI) has revolutionized image creation, enabling the production of highly realistic synthetic images that can easily misguide viewers. Recent advancements in deep learning technology, particularly in generative adversarial networks (GANs) and diffusion models, have made these images nearly indistinguishable from real photographs. While these innovations unlock creative possibilities, they also bring significant risks, such as the potential misuse for producing misleading content that can distort public perception and undermine trust in digital media. This growing concern has highlighted the need to develop reliable detection methods for combating growing deepfake content.

SID-SET and SIDA: Introducing Novel Dataset and Framework

In this paper, the authors presented SID-Set, a robust dataset containing 300,000 images, equally divided into 100,000 real images, 100,000 fully synthetic images, and 100,000 tampered images. The tampered images include both object-level and part-level manipulations, providing a more comprehensive resource for model training. This dataset is precisely annotated, providing a rich resource for training deepfake detection models. The goal was to overcome the limitations of existing datasets, which often lack diversity and comprehensive annotations, by creating a dataset that reflects the complexities of real-world social media content.

The study also proposed SIDA, a novel framework leveraging large vision-language models (VLMs) to perform three critical tasks: detecting the authenticity of images, localizing tampered regions, and providing textual explanations for their judgments. The framework incorporates an attention module, which enhances its ability to detect and pinpoint tampered areas more accurately. SIDA extends the capabilities of traditional detection models by incorporating advanced multimodal techniques that allow for a more nuanced understanding of image content and manipulation.

The methodological approach involves several stages. Firstly, the dataset was generated using state-of-the-art generative models such as FLUX, ensuring high-quality synthetic images. Secondly, the image tampering process was carefully designed to create realistic alterations, allowing the model to learn from both fully synthetic and manipulated images. The architecture of SIDA integrates new tokens specifically for detection and segmentation tasks, enhancing the model's ability to discern and explain manipulations effectively.

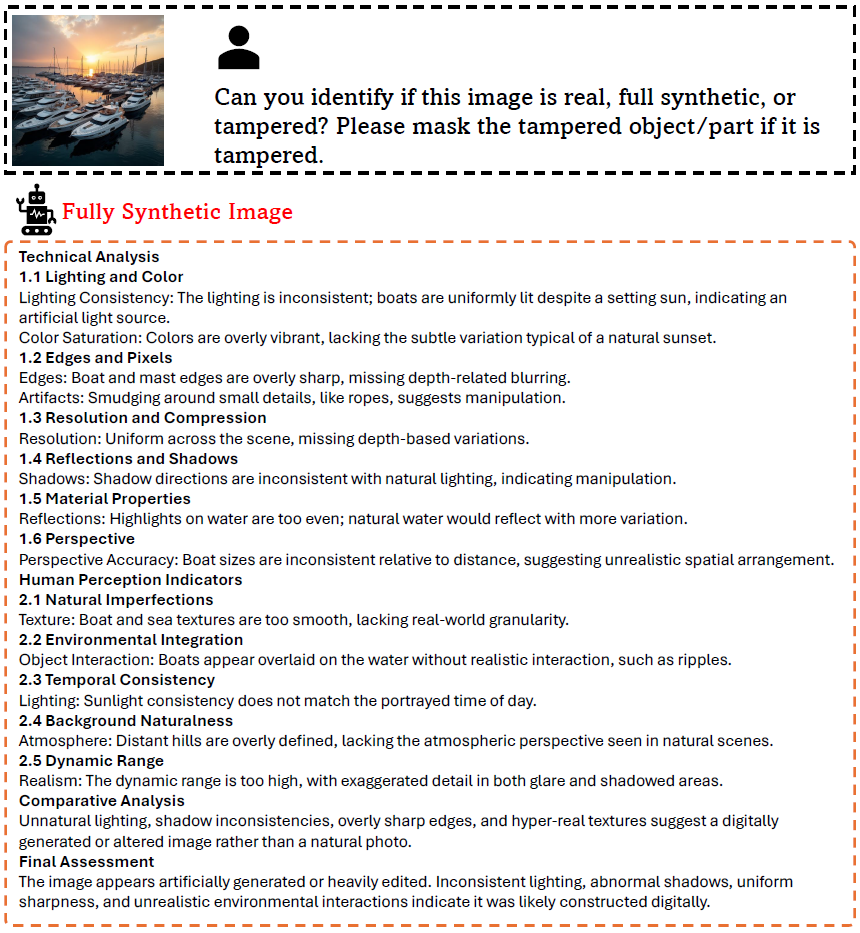

Example output from SIDA when analyzing a fully synthetic image.

Findings of Using Presented Dataset and Framework

The outcomes demonstrated that SIDA outperformed existing state-of-the-art deepfake detection models across various benchmarks. Specifically, it achieved an accuracy of 97.3% in detecting tampered images. This level of accuracy highlights the framework’s robustness in identifying subtle manipulations that other models might overlook. Additionally, it provides transparency in its decision-making process by generating detailed explanations for its outputs, enhancing interpretability, an important feature for users and stakeholders concerned about the implications of deepfake technology.

The experiments conducted showed that SIDA is not only effective in detecting deepfakes but also excels in localizing tampered regions within images. This is crucial for applications in law enforcement, media verification, and digital forensics, where understanding the extent and nature of manipulations can inform appropriate responses and actions.

Furthermore, the study highlighted the importance of dataset diversity in training robust detection models. By incorporating a wide range of image types and manipulation techniques, SID-Set enables SIDA to generalize better across different scenarios, making it a valuable tool for addressing the growing challenges posed by deepfake technology.

Applications

This research has significant real-world implications beyond academics. The SID-Set dataset and SIDA framework can be a critical resource for researchers and developers working on deepfake detection technologies. By providing a comprehensive and diverse dataset for training and evaluating detection models, SID-Set has the potential to enhance the reliability of systems designed to combat misinformation on social media platforms.

With its ability to detect and localize tampered regions, SIDA can be deployed across various sectors, including social media platforms, news organizations, and law enforcement agencies, to counter misinformation and preserve the integrity of digital content. The framework’s capacity to offer real-time detection and detailed explanations of deepfakes can help stakeholders address the growing challenges of deceptive media.

Conclusion and Future Directions

In summary, SID-Set and SIDA significantly advance deepfake detection, localization, and explanation. They can potentially address challenges posed by synthetic media that can mislead audiences. As the digital landscape evolves, these insights will be crucial for safeguarding the integrity of visual content across platforms.

Future work should focus on expanding the SID-Set dataset to include even more diverse scenarios and image types, further improving the robustness of detection models. Integrating advanced detection methods into practical applications will be crucial for maintaining trust in digital content and mitigating the potential harms of misinformation. Additionally, exploring the integration of SIDA with existing content moderation systems could enhance the efficacy of misinformation detection on social media platforms.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Source:

Journal reference:

- Preliminary scientific report.

Huang, Z., & et al. SIDA: Social Media Image Deepfake Detection, Localization, and Explanation with Large Multimodal Model. arXiv, 2024, 2412, 04292. DOI: 10.48550/arXiv.2412.04292, https://arxiv.org/abs/2412.04292