Discover how Genie 2 turns simple images into immersive, dynamic virtual worlds, revolutionizing AI training and interactive environment creation.

Image Credit: Google DeepMind

In an article published on the Google DeepMind blog, researchers introduced Genie 2, a foundation world model that generates diverse three-dimensional (3D) training environments for artificial intelligence (AI) agents from a single prompt image. These environments are action-controllable and support training with measurable progress, addressing the lack of rich, diverse environments for general agent development. By enabling consistent and realistic virtual scenarios, Genie 2 also enhances opportunities for creative experimentation in interactive AI workflows. Genie 2 enables limitless curriculum creation for AI training and fosters new workflows for prototyping interactive experiences, advancing embodied AI capabilities.

Background

Games have long been integral to advancing AI, offering controlled environments to train and test agents. However, the lack of rich, diverse, and scalable training environments has limited the development of general embodied agents. Addressing this, researchers introduced Genie 2, a foundation world model capable of generating action-controllable 3D environments from a single image prompt. Genie 2 supports limitless curriculum creation for AI training and enables creative workflows for prototyping interactive experiences, advancing embodied AI research and applications. It builds on its predecessor, Genie 1, by extending capabilities from 2D to richly detailed 3D environments.

Capabilities of Genie 2

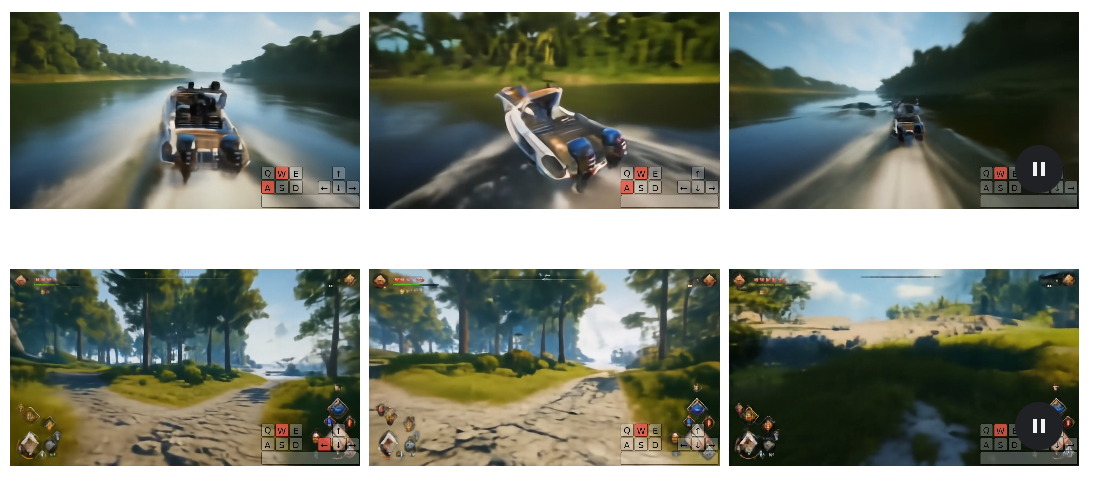

Genie 2 is a groundbreaking world model that generates diverse, interactive 3D environments from single image prompts, transforming virtual worlds into action-responsive, immersive spaces. Trained on a large-scale video dataset, it demonstrates emergent capabilities, including realistic physics, character animation, object affordances, and interactions with non-player characters (NPCs). Genie 2 supports counterfactual simulations, long-horizon memory, and consistent world generation for up to a minute, allowing users to explore unique scenarios through keyboard and mouse inputs. This capability also enables diverse trajectories from a single starting frame, enhancing its utility for counterfactual training.

Its ability to create diverse perspectives, such as first-person or isometric views, and simulate elements like gravity, lighting, reflections, and fluid dynamics enhances its realism. Genie 2 also enables rapid prototyping of interactive environments, turning concept art and real-world images into playable, functional worlds. For instance, concept art featuring fantastical environments can now be directly converted into dynamic scenarios for training. By fostering creativity and enabling extensive training scenarios, Genie 2 accelerates AI development, providing a powerful tool for researchers, artists, and designers in environment creation and embodied AI training.

Deploying Agents in the World Model

Genie 2 enables the creation of rich, diverse environments for testing and training AI agents. Using a single image prompt, researchers generate novel 3D worlds, allowing agents like scalable instructable multi-world agents (SIMA) to perform tasks in previously unseen scenarios. SIMA, developed with game designers, follows natural-language instructions to interact with these environments, such as opening doors or exploring specific areas using keyboard and mouse inputs, while Genie 2 dynamically renders frames.

For instance, Genie 2 generated a 3D scene with two houses, where SIMA successfully executed commands to open the red and blue doors. Another test involved exploring consistency by directing SIMA to navigate behind the house, confirming Genie 2's ability to maintain coherent environments. These interactions illustrate the potential of Genie 2 to serve as a testing ground for diverse and generalizable AI tasks.

This innovative approach addresses the challenge of training embodied agents safely while enhancing their generality. Although still evolving, Genie 2's ability to synthesize interactive environments is a step toward broader AI capabilities and progress toward AGI. The research team envisions ongoing refinements to enhance both world consistency and long-term interactions.

Model Architecture and Development

Genie 2 is an autoregressive latent diffusion model trained on a large video dataset. It leverages autoencoders and a transformer dynamics model similar to those in large language models. It generates 3D environments frame-by-frame, using past latent frames and classifier-free guidance to improve action control. While the undistilled base model showcases its full potential, a distilled version supports real-time interactions at a reduced output quality. This trade-off between performance and interactivity highlights the practical considerations for deploying the model in live applications.

Genie 2 highlights the promise of foundational world models for creating diverse, controllable environments and advancing AI research. Its applications, including training agents like SIMA, aim to develop general AI systems capable of safely performing diverse tasks in virtual and real-world contexts. Although in its early stages, Genie 2 represents a step toward more general, responsible AI development, with ongoing improvements focused on enhancing its consistency and generality.

Conclusion

In conclusion, Genie 2 represents a transformative step in AI research, providing a foundation world model capable of generating diverse, action-controllable 3D environments from single image prompts. This innovation addresses the longstanding bottleneck of limited and static training environments, enabling the creation of limitless, dynamic scenarios for training and testing embodied AI agents. Its ability to handle complex simulations with consistent physics and interactions makes it a valuable tool for AI experimentation.

Additionally, Genie 2 accelerates the prototyping of creative, interactive experiences by transforming concept art and real-world images into functional environments. It supports applications such as agent training, where systems like SIMA can perform complex tasks and adapt to unseen scenarios. Built on cutting-edge autoregressive latent diffusion models, Genie 2 combines robust architecture with practical utility, paving the way for safer, more general AI development. As researchers continue to refine its capabilities, Genie 2 holds promise for revolutionizing both AI training and interactive experience design.