SAMURAI incorporated temporal motion cues and a motion-aware memory selection mechanism to improve monitoring in crowded and dynamic scenes. It demonstrated robust, zero-shot performance, achieving significant gains in tracking precision and success rates over existing methods.

The model achieved a 7.1% improvement in AUC on LaSOText and a 3.5% AO gain on GOT-10k, showcasing its strong generalization ability. Evaluations showed competitive results compared to fully supervised trackers, highlighting SAMURAI's effectiveness in complex tracking scenarios.

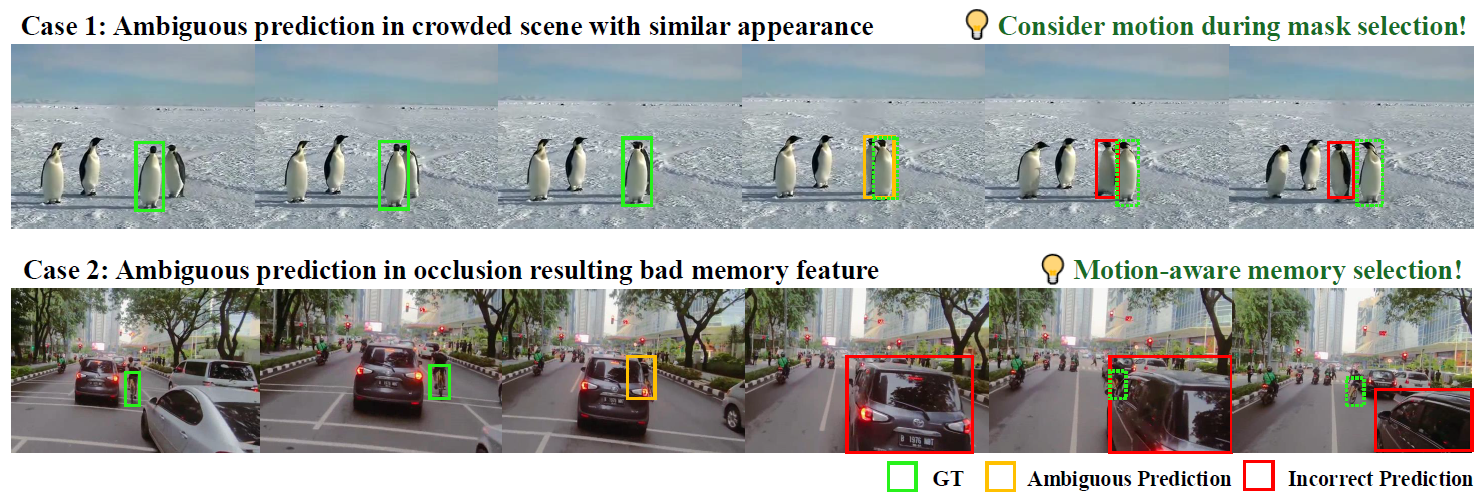

Illustration of two common failure cases in visual object tracking using SAM 2: (1) In a crowded scene with similar appearances between target and background objects, SAM 2 tends to ignore the motion cue and predict where the mask has the higher IoU score. (2) The original memory bank simply chooses and stores the previous n frames into the memory bank, resulting in introducing some bad features during occlusion.

Illustration of two common failure cases in visual object tracking using SAM 2: (1) In a crowded scene with similar appearances between target and background objects, SAM 2 tends to ignore the motion cue and predict where the mask has the higher IoU score. (2) The original memory bank simply chooses and stores the previous n frames into the memory bank, resulting in introducing some bad features during occlusion.

Related Work

Past work in visual object tracking includes Siamese and transformer-based trackers, though they struggle with drift. SAM and SAM 2 introduced prompt-based segmentation and memory for monitoring, while SAMURAI builds on these models to improve extended video sequence tracking.

Key advancements include the integration of motion modeling and memory-aware mechanisms that prioritize relevant features during tracking, reducing error propagation. Motion modeling has shifted from heuristic methods like Kalman Filters to learnable, data-driven models like Tracktor and MambaTrack, with SAMURAI integrating these advancements.

Enhanced Object Tracking

SAM 2 has shown strong performance in visual object tracking and video object segmentation tasks. Still, it needs help encoding incorrect or low-confidence objects, leading to errors in long-sequence tracking. To address these issues, a Kalman Filter (KF)-based motion modeling approach is proposed on top of SAM 2, along with an enhanced memory selection technique based on a hybrid scoring system.

This system combines affinity and motion scores to improve mask selection accuracy. Specifically, it ensures that selected masks are highly reliable by combining object quality scores with motion-based predictions. Notably, the proposed method does not require any fine-tuning or additional training. It can be integrated directly into the existing SAM 2 model, offering a reliable and real-time solution for online visual object tracking.

In the motion modeling approach, a linear-based Kalman filter serves as the baseline to improve the accuracy of bounding box position and dimensions. The Kalman filter helps select the most confident mask by predicting the state of the tracked object and calculating the Intersection over Union (IoU) score between the predicted masks and bounding boxes.

The mask that maximizes the weighted sum of the KF-IoU score and the original affinity score is selected. By introducing this weighting mechanism, SAMURAI enhances its ability to track objects through abrupt motions without additional computational overhead. This approach enhances tracking performance without additional computational overhead, making it suitable for real-time applications.

Motion-aware memory selection is also introduced to enhance SAM 2's memory bank. SAM 2 selects frames based on the target's quality, but this method does not effectively handle long occlusions or deformations. The motion-aware memory selection prioritizes frames with high-quality masks, combining three metrics: mask affinity, object occurrence, and motion scores. Frames for memory are selected when all three scores meet their respective thresholds. This method ensures the memory bank comprises the most relevant frames, improving tracking accuracy during challenging scenarios like occlusion or deformation.

Integrating motion modeling and memory selection into SAM 2 enhances visual object tracking without requiring retraining. This approach is model-agnostic, meaning it can be applied to other tracking frameworks beyond SAM 2. By combining effective motion modeling with intelligent memory selection, SAMURAI demonstrates an ability to significantly improve tracking performance across varied and complex environments.

Evaluation of SAMURAI Performance

The experiments section evaluates SAMURAI's zero-shot performance across multiple visual object tracking benchmarks. SAMURAI demonstrates superior generalization, outperforming zero-shot and supervised methods on these benchmarks. For instance, SAMURAI achieves significant improvements over state-of-the-art methods on large-scale single object tracking (LaSOT) and LaSO with text annotations (LaSOText), with notable gains in metrics such as the area under the curve (AUC), normalized precision (Pnorm), and precision (P). In addition, SAMURAI's zero-shot performance on TrackingNet, nuclear factor stimulation (NFS), and object tracking benchmark 100 (OTB100) is comparable to or better than that of many supervised models.

Quantitative results from tables and figures indicate that SAMURAI models consistently surpass current methods on LaSOT, LaSOText, and GOT-10k, showcasing the effectiveness of its design. For instance, SAMURAI-L reached 74.23% AUC and 82.69% Pnorm on LaSOT, outperforming other state-of-the-art zero-shot models. The results highlight SAMURAI's strong ability to generalize and track objects in real-world scenarios.

Ablation studies further demonstrate the effectiveness of the proposed modules, such as motion memory and the impact of motion weight on performance. The combined use of memory modules and proper tuning of motion weights significantly improves tracking performance, with the best results achieved when both modules are used together.

The SAMURAI model shows robust and competitive performance across various tracking datasets, including zero-shot and supervised tracking benchmarks. It significantly outperforms existing methods, especially in challenging scenarios, and offers promising results regarding its generalization ability.

Conclusion

In summary, SAMURAI, built on the segment-anything model, introduced motion-based scoring and memory selection to address self-occlusion and abrupt motion in crowded scenes. The combination of motion-aware memory and enhanced mask selection demonstrates a novel approach to real-time tracking that bridges the gap between supervised and zero-shot models.

The proposed modules consistently improved performance across multiple visual object tracking benchmarks without re-training or fine-tuning. SAMURAI demonstrated robust performance on all metrics. The capability for real-time online inference was also highlighted.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Source:

Journal reference:

- Preliminary scientific report.

Yang, C., et al. (2024). SAMURAI: Adapting Segment Anything Model for Zero-Shot Visual Tracking with Motion-Aware Memory. ArXiv. DOI:10.48550/arXiv.2411.11922, https://arxiv.org/abs/2411.11922