ALT significantly enhanced the performance of models like large language model meta-artificial intelligence 3.1 with 70 billion parameters (LLaMA-3.1-70B), showing up to 30-point improvements in reasoning benchmarks and notable gains in math, coding, and other tasks. The approach combined principles grounded in symbolic logic theory and empirical insights to create high-quality, systematically designed training samples.

Related Work

Past work highlighted LLM's limitations in reasoning, attributing them to the scarcity of structured, high-quality reasoning samples in pre-training data. Challenges in improving reasoning include the low quality of reasoning samples in pre-training data, often riddled with biases and fallacies, and the reliance on reflexive human thinking rather than rigid, formal logic. This hinders models from handling novel problems that require structured, multi-step reasoning.

Optimizing Logical Reasoning

Designing effective synthetic logic samples for large language models involves systematically addressing key reasoning challenges. This study emphasizes the inclusion of samples that incorporate reasoning with unknown facts, ensuring models generalize deduction rules without reliance on specific premises or conclusions.

Assigning arbitrary, diverse content to logical variables allows models to deduce relationships accurately. Moreover, adversarial distractors are included to train models in avoiding erroneous conclusions when premises are insufficient. Together, these principles aim to teach logical reasoning comprehensively.

To cover diverse reasoning capabilities, samples should involve multi-step deduction processes built on axiomatic foundations and representative theorems, such as syllogism and contraposition. This approach helps models handle complex reasoning structures and generalize to broader scenarios.

Moreover, including various linguistic expressions, such as "If F then G" or "F leads to G," prevents models from overfitting to specific patterns and enhances their ability to interpret natural language. Enhanced corpora like formal logic deduction diverse (FLD×2) incorporate these principles, outperforming earlier datasets by offering more extensive reasoning scenarios, adversarial examples, and a richer vocabulary.

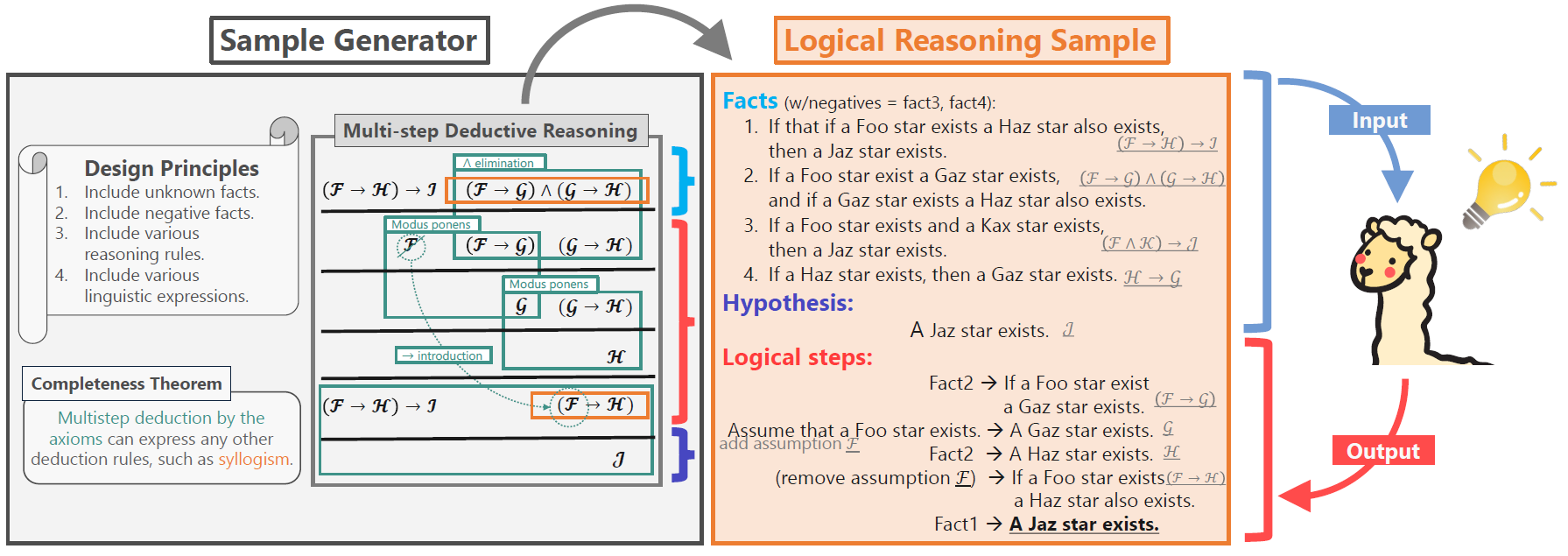

Our proposed Additional Logic Training (ALT) aims to enhance LLMs’ reasoning capabilities through training on many synthetically generated logical reasoning samples. Our sample generator (left) first generates a sample of multi-step deductive reasoning and then converts it into a deduction sample written in English (right). LLMs must generate logical steps to derive a given hypothesis from provided facts. The sample generator adheres to theoretically and empirically grounded design principles

Our proposed Additional Logic Training (ALT) aims to enhance LLMs’ reasoning capabilities through training on many synthetically generated logical reasoning samples. Our sample generator (left) first generates a sample of multi-step deductive reasoning and then converts it into a deduction sample written in English (right). LLMs must generate logical steps to derive a given hypothesis from provided facts. The sample generator adheres to theoretically and empirically grounded design principles

Advanced Deductive Reasoning Dataset

To create the FLD×2 corpus, a novel generator was developed. The generator creates multi-step deductive reasoning samples, integrating adversarial distractors to emphasize cases where premises do not logically lead to conclusions. Logical formulas are translated into English using diverse templates and a large vocabulary of around 100,000 words derived from WordNet.

This approach ensures that the samples are highly diverse, enabling models to generalize their reasoning to handle unfamiliar or previously unseen facts. Compared to earlier datasets, FLD×2 includes a greater variety of deduction rules, more complex reasoning steps, and enhanced linguistic diversity for robust training.

The FLD×2 corpus was tested using LLaMA-3.1 models (8B and 70B) with supervised fine-tuning on 100,000 samples—a method optimized by the Recall Adam optimizer to prevent the loss of pre-trained knowledge. Evaluations across 31 benchmarks excluded the synthetic logic corpora to avoid overfitting. FLD×2 enhanced performance due to its adversarial distractors, broader logical scope, and varied linguistic templates. These features enabled the trained LLMs to perform complex reasoning tasks effectively and reliably.

Boosted Reasoning and Inference

The benchmark results indicate that ALT significantly improved the performance of LLaMA-3.1-70B, particularly in logical reasoning tasks. For instance, ALT enhanced the model's performance by up to 30 points across various logical reasoning benchmarks, including abductive reasoning.

Interestingly, these improvements extended to abductive reasoning tasks, which involve inferring missing premises rather than deriving direct conclusions, showcasing the model's adaptability to different logical paradigms.

In a detailed case analysis, the improvements brought by ALT were linked to the model's ability to handle unknown facts, recognize insufficient information, apply diverse deduction rules, and interpret more natural linguistic expressions. For example, one test showed that ALT-trained LLaMA-3.1-70B could correctly solve problems involving unknown facts, while it had previously failed to do so without ALT. Other problems demonstrated that ALT helped the model understand the importance of neutral or "insufficient information" answers when necessary and how to perform syllogistic reasoning effectively.

ALT improved the model's performance by 7 points on math tasks and 10 points on coding tasks, demonstrating the pivotal role of logical reasoning in domains like mathematics and programming. These results highlight the widespread implications of logical reasoning skills across diverse applications. Finally, ALT showed its utility in natural language inference (NLI) tasks, with a performance boost of up to 6 points across key benchmarks.

This enhancement suggests that by incorporating ALT, LLaMA-3.1-70B became more adept at integrating natural language understanding with rigorous logical reasoning, extending the applicability of these models beyond their traditional capabilities. Thus, ALT is a powerful tool for enhancing a model's reasoning and problem-solving capabilities across multiple domains.

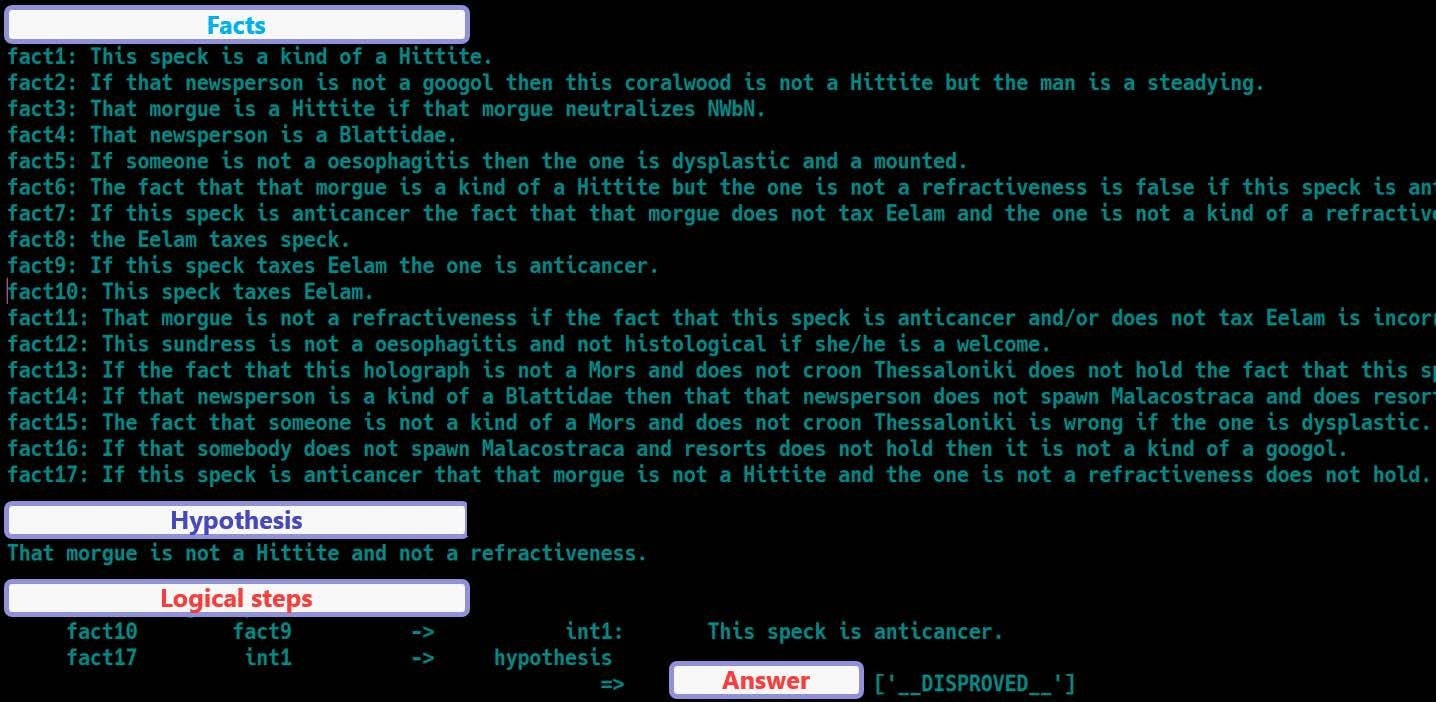

A real deduction sample included in Formal Logic Deduction Diverse. Facts and hypothesis are given to LLMs, then the LLMs are required to generate logical steps to (dis-)prove the hypothesis based on the facts, and an answer label

A real deduction sample included in Formal Logic Deduction Diverse. Facts and hypothesis are given to LLMs, then the LLMs are required to generate logical steps to (dis-)prove the hypothesis based on the facts, and an answer label

Conclusion

To sum up, ALT on synthetic logic samples was proposed to enhance reasoning capabilities in AI. Grounded in a robust framework of symbolic logic theory and empirical findings, the researchers established systematic design principles to develop high-quality reasoning samples. Based on these principles, a corpus called FLD×2 was constructed. The approach was empirically tested to validate its effectiveness in improving logical reasoning.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Morishita, T., Morio, G., Yamaguchi, A., & Sogawa, Y. (2024). Enhancing Reasoning Capabilities of LLMs via Principled Synthetic Logic Corpus. ArXiv. https://arxiv.org/abs/2411.12498