Advancements in LLMs have significantly transformed the field of artificial intelligence (AI), especially in natural language processing (NLP). Models like generative pre-trained transformer version 4 (GPT-4) and Claude show impressive abilities to generate human-like text, understand context, and handle tasks across various domains. However, applying these models in real-world scenarios presents challenges, such as unpredictable outputs, errors in task handling, and difficulties in managing complex interactions with users and tools.

MARCO: An Innovative Framework

In this paper, the authors presented a solution with MARCO, a framework designed to leverage LLMs while addressing limitations in existing systems. MARCO uses a structured, multi-agent approach, enabling agents to interact with users and tools cohesively. The multi-agent design allows MARCO to seamlessly coordinate agents for specialized tasks, ensuring a flexible and scalable system for real-time automation. This setup supports seamless task automation and helps manage multi-turn interactions effectively.

The framework is designed to enhance real-time conversational interfaces. It includes key components such as an Intent Classifier, Retrieval Augmented Generation (RAG), a Multi-Agent Reasoner and Orchestrator (MARS), and sophisticated guardrails to ensure reliable interactions.

The Intent Classifier identifies user intent by categorizing messages as informational, action-oriented, or out-of-domain, guiding the system in selecting the appropriate response channel. RAG enhances responses by adding relevant information, while MARS coordinates task execution across agents, each following distinct task execution procedures (TEPs). MARCO’s shared hybrid memory stores context across agents, and its multi-step functions adhere to specific business logic. In addition to handling errors with reflection prompts, MARCO’s guardrails prevent function hallucinations, incorrect output formatting, and misinterpretation of user parameters.

This design allows each component to work independently while sharing context, maximizing efficiency, and enabling quick error recovery through structured error-handling techniques. The researchers validated MARCO by extensively testing it on two datasets, including the Digital Restaurant Service Platform and Retail Conversations. These tests demonstrated MARCO’s effectiveness in handling multi-turn tasks and highlighted its capabilities in real-world scenarios.

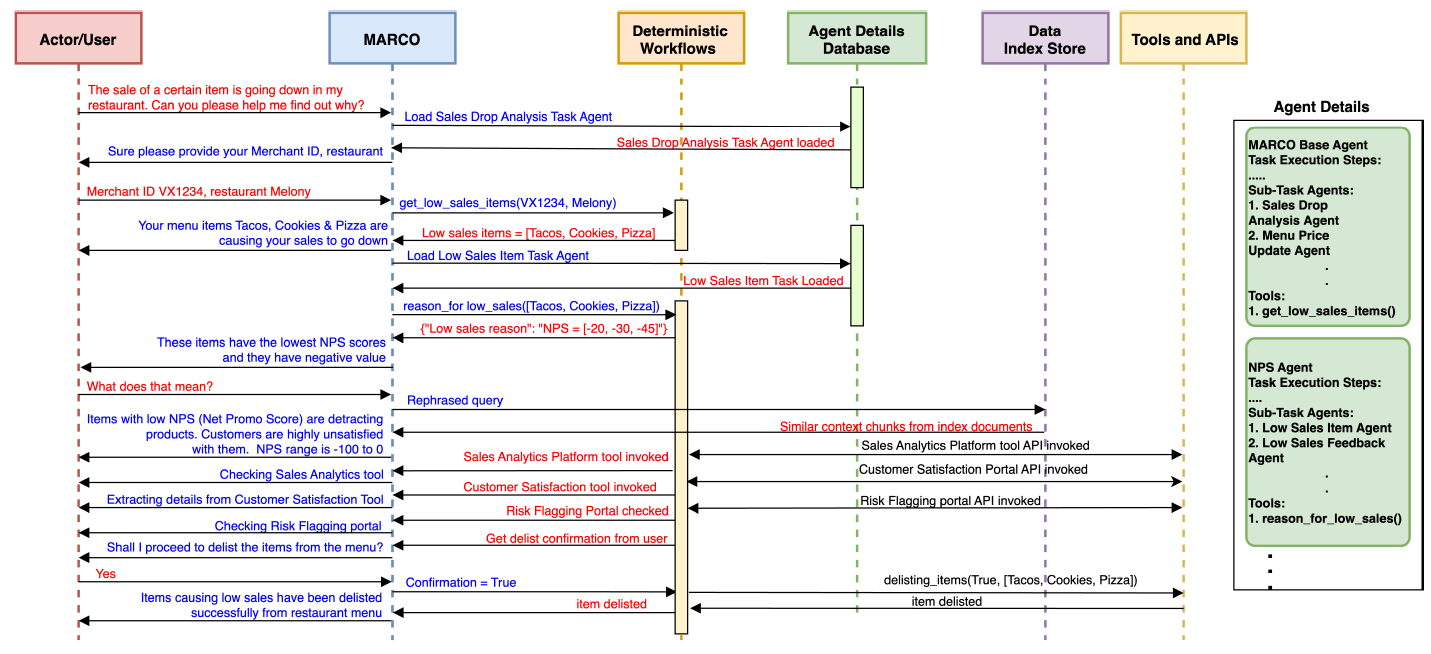

Multi-Agent Conversation Flow in MARCO Framework. This diagram illustrates the complex interactions within the MARCO system as it addresses a user’s query about declining sales. It showcases MARCO’s orchestration of multiple components, including the MARCO Base agent, specialized task agents, deterministic multi-step workflows, data stores, and external tools/APIs. The figure demonstrates MARCO’s capability to manage multi-turn communications with both the user and various system components, highlighting its process of task decomposition, information gathering, analysis, and action execution in response to real-world business scenarios.

Multi-Agent Conversation Flow in MARCO Framework. This diagram illustrates the complex interactions within the MARCO system as it addresses a user’s query about declining sales. It showcases MARCO’s orchestration of multiple components, including the MARCO Base agent, specialized task agents, deterministic multi-step workflows, data stores, and external tools/APIs. The figure demonstrates MARCO’s capability to manage multi-turn communications with both the user and various system components, highlighting its process of task decomposition, information gathering, analysis, and action execution in response to real-world business scenarios.

Key Findings and Insights

The outcomes showed that the MARCO framework significantly improved the accuracy and efficiency of task execution compared to traditional single-agent systems. MARCO achieved 94.48% and 92.74% accuracy on the respective datasets, demonstrating its effectiveness in handling complex tasks. Additionally, the framework reduced latency by 44.91% and cut operational costs by 33.71%.

A key contribution of this research is the implementation of modular guardrails to address common LLM issues, such as incorrect output formatting and hallucinations. Integrating reflection prompts within the agent's workflow allows real-time corrections, enhancing the system’s overall reliability. These guardrails improved accuracy and contributed to a smoother user experience by reducing errors across multiple interactions.

The authors also emphasized MARCO's modular design, which enables scalability and adaptability in various domains. Each module can be tailored to support unique, domain-specific task execution procedures, making MARCO adaptable for diverse applications. Detailed comparisons across foundational LLMs highlighted MARCO's effectiveness in specific applications, including Digital Restaurant Service and Retail scenarios, supporting its potential for broad adoption in industries requiring automated task management. As part of the guardrail mechanisms, reflection prompts effectively minimized error recurrence during task execution.

By enabling agents to review and correct their outputs, MARCO increased the reliability of its interactions. This modular design makes MARCO adaptable to different domains, indicating its potential usefulness beyond the tested environments.

Applications

The MARCO framework has potential in sectors that rely on task automation and user interaction management. For example, in the restaurant industry, MARCO can improve customer service by automating order processing and answering inquiries. In retail, it can provide real-time support, help customers navigate product information, and resolve issues.

Additionally, the framework’s adaptable design makes it suitable for healthcare, finance, and education, where multi-turn interactions and complex decision-making are critical. By integrating MARCO into existing systems, organizations can use the power of LLMs to streamline operations, reduce costs, and improve customer satisfaction.

Conclusion and Future Directions

In summary, the MARCO framework proved effective for transforming task automation using LLMs. Addressing challenges like output inconsistencies and the need for real-time interaction management provides a reliable solution for automating complex tasks across various domains. The findings show accuracy, latency, and cost-effectiveness improvements, highlighting its relevance in today's fast-evolving technological landscape.

Future work should focus on refining the guardrail mechanisms and exploring integration with emerging technologies, such as reinforcement learning and real-time data analytics. As demand for intelligent automation grows, insights from this study will be important in shaping the future of multi-agent systems and their applications in real-world scenarios.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Shrimal, A., Kanagaraj, S., Biswas, K., Raghuraman, S., Nediyanchath, A., Zhang, Y., & Yenigalla, P. (2024). MARCO: Multi-Agent Real-time Chat Orchestration. ArXiv. https://arxiv.org/abs/2410.21784