Aiming to improve AI-powered user interactions, OMNIPARSER uses pure vision to decode UI screenshots, enabling GPT-4V to better understand and respond to diverse interfaces without additional HTML or DOM data.

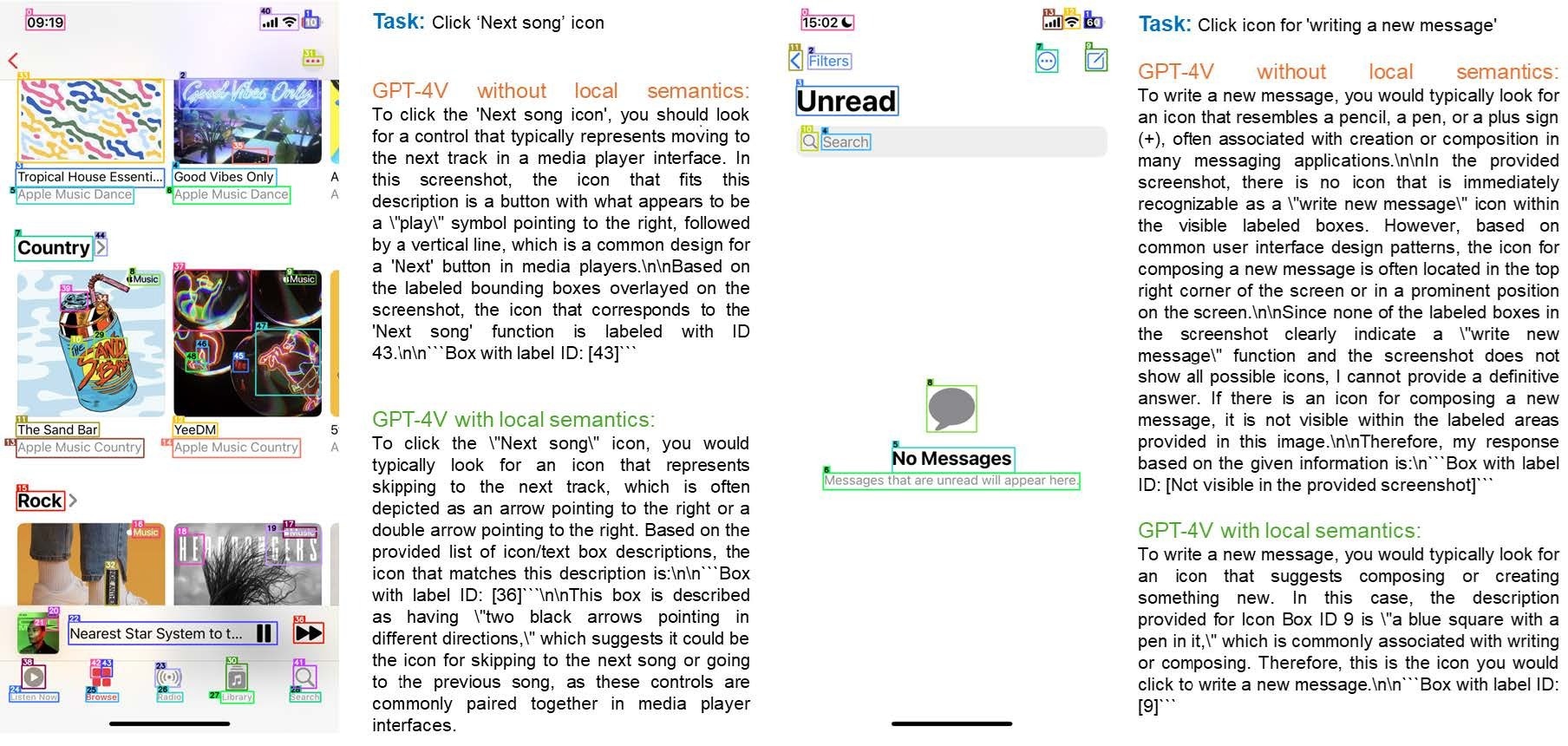

Examples from the SeeAssign evaluation. We can see that fine-grain local semantics improves the GPT-4V’s ability to assign correct labels to the referred icon. Research: OmniParser for Pure Vision Based GUI Agent

Examples from the SeeAssign evaluation. We can see that fine-grain local semantics improves the GPT-4V’s ability to assign correct labels to the referred icon. Research: OmniParser for Pure Vision Based GUI Agent

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

In an article recently submitted to the arXiv preprint* server, researchers introduced OMNIPARSER, an innovative method for parsing user interface screenshots into structured elements to enhance the generative pre-trained transformer 4 vision (GPT-4V’s) ability to generate actions accurately grounded in the interface. They curated datasets for interactable icon detection and description, which were used to fine-tune an icon detection model and a caption model, significantly improving GPT-4V's performance.

OMNIPARSER boosted GPT-4V’s performance on the widely-used ScreenSpot benchmark and outperformed baselines on Mind2Web and artificial intelligence task on the web (AITW) benchmarks using only screenshot input. This work addressed gaps in screen parsing techniques for multimodal models and showcased how vision-only approaches could achieve state-of-the-art results in interface interaction.

Background

Past work on user interface (UI) screen understanding, such as Screen2Words, UI bidirectional encoder representations from transformers (UI-BERT), and WidgetCaptioning, focused on extracting screen semantics but relied on additional information like view hierarchies and HTML elements.

Public datasets like Rico and PixelHelp were key for UI element recognition but lacked sufficient coverage of real-world websites. Autonomous graphical UI (GUI) agents, like Pixel2Act and web-based graphical user model (WebGUM), aimed to predict actions in web and mobile domains, yet these models relied on data sources like the document object model (DOM) to identify interactable elements.

OMNIPARSER: Enhancing UI Interaction

A complex task can be broken down into steps that require a model like GPT-4V to analyze the UI screen and predict the following actions. To streamline this process, OMNIPARSER leverages fine-tuned models and optical character recognition (OCR) to create a structured, DOM-like representation of the UI with overlaid bounding boxes for interactable elements.

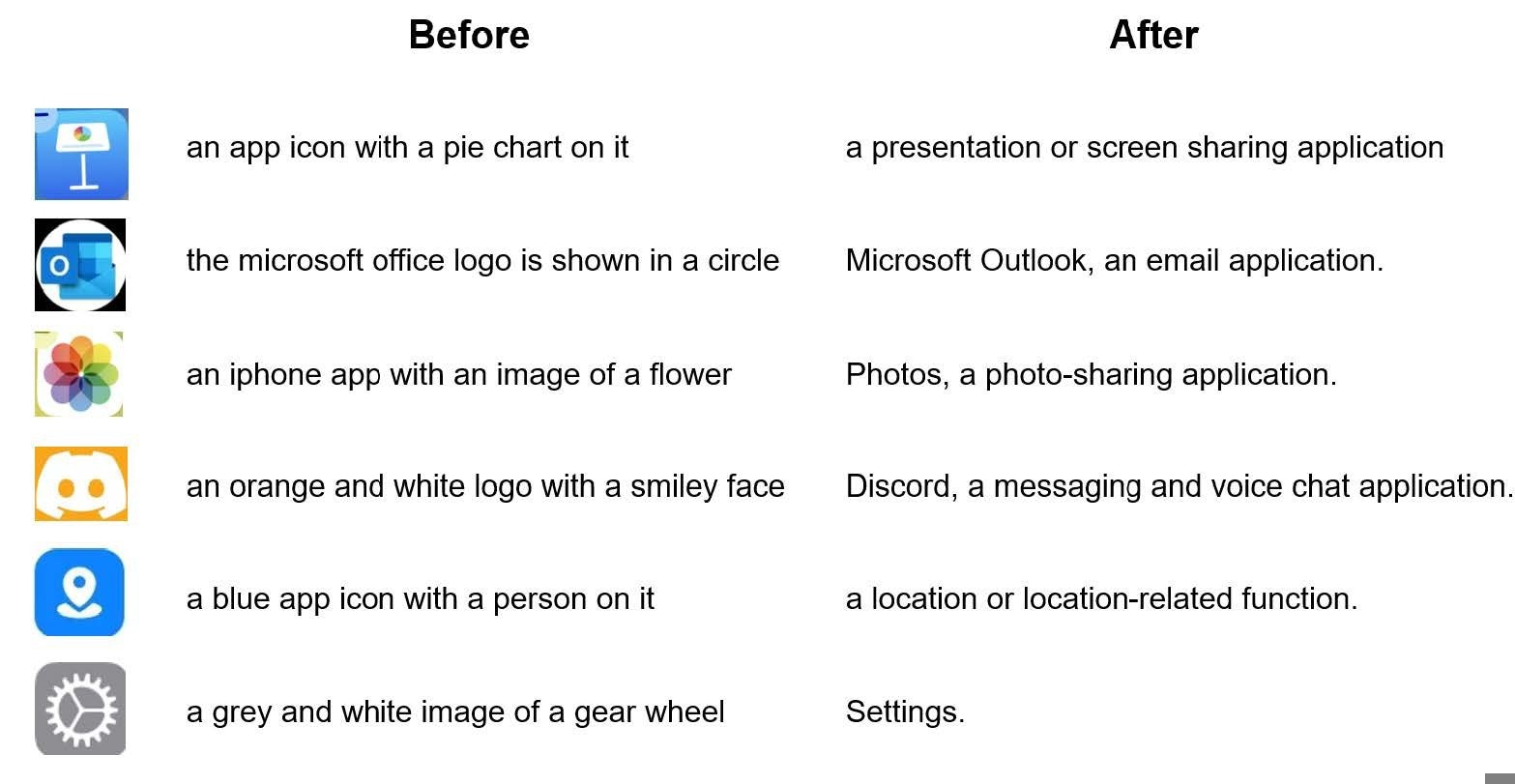

Example comparisons of icon description model using BLIP-2 (Left) and its fine-tuned version (Right). Original BLIP-2 model tend to focus on describing shapes and colors of app icons. After finetuning on the functionality semantics dataset, the model is able to show understanding of semantics of some common app icons.

Example comparisons of icon description model using BLIP-2 (Left) and its fine-tuned version (Right). Original BLIP-2 model tend to focus on describing shapes and colors of app icons. After finetuning on the functionality semantics dataset, the model is able to show understanding of semantics of some common app icons.

For interactable region detection, the researchers curated a dataset of 67,000 unique screenshots, labeling bounding boxes and incorporating functionality descriptions for common app icons and texts. This dataset includes 7,000 icon-description pairs to fine-tune the model, providing more reliable recognition of icon functionality in modern app environments.

Improving GPT-4V with OMNIPARSER To demonstrate the effectiveness of OMNIPARSER, experiments were conducted across several benchmarks, starting with an initial evaluation of GPT-4V's performance with 'set-of-mark' prompting, which overlays numeric IDs over bounding boxes for targeted identification. The results revealed that the model was prone to incorrect label assignment without sufficient semantic context.

Subsequent evaluations were performed on the ScreenSpot and Mind2Web benchmarks to illustrate how incorporating local semantics through OMNIPARSER could enhance GPT-4V's performance across platforms and applications.

The SeeAssign task evaluation created a custom dataset of 112 tasks across mobile, desktop, and web platforms, with challenging scenarios involving complex UI layouts. The addition of local semantics improved accuracy from 0.705 to 0.938, enhancing the model's ability to link task descriptions with correct icons.

The ScreenSpot dataset evaluation further confirmed OMNIPARSER's value, with significant performance improvements observed across mobile, desktop, and web platforms. OMNIPARSER’s results even exceeded specialized models fine-tuned for GUI data, highlighting the critical role of accurate interactable element detection. Additionally, incorporating local semantics, such as OCR text and detailed bounding box descriptions, enhanced GPT-4V’s ability to identify correct UI elements, thus improving overall task performance.

Adaptable Across Platforms

The comprehensive evaluation across multiple platforms underscores OMNIPARSER’s adaptability and robustness in handling diverse UI environments. These findings suggest that adding local semantics optimizes model performance and opens up opportunities for future advancements in intelligent UI interaction systems.

The Mind2Web benchmark further demonstrated OMNIPARSER's superior capabilities in tasks involving web navigation, such as cross-domain and cross-website challenges. Results indicated that OMNIPARSER, equipped with local semantics and the fine-tuned detection model, significantly outperformed both GPT-4V and other current methods, highlighting OMNIPARSER’s unique advantage in screen parsing to enhance action prediction accuracy.

Conclusion

In summary, OMNIPARSER presents a vision-only solution for parsing UI screenshots into structured elements using two specialized models: one for icon detection and another for generating functional descriptions. The authors curated datasets for interactable region detection and icon semantics, demonstrating significant improvements in GPT-4V’s ability to interpret and act upon complex UI elements.

OMNIPARSER outperformed GPT-4V models that utilize HTML data on the Mind2Web benchmark and surpassed specialized Android models on the AITW benchmark. With its user-friendly design, OMNIPARSER can parse screens across PC and mobile platforms without reliance on HTML or view hierarchy data, marking a step forward in generalizable UI interaction tools.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Lu, Y., Yang, J., Shen, Y., & Awadallah, A. (2024). OmniParser for Pure Vision Based GUI Agent. ArXiv. https://arxiv.org/abs/2408.00203