HART overcame the limitations of previous autoregressive (AR) models by using a hybrid tokenizer that combined discrete and continuous tokens. This approach improved image reconstruction quality and reduced training costs, leading to a model that rivaled diffusion models in image generation quality while being more efficient.

Background

AR models have emerged as a powerful tool for visual generation, offering versatility across various tasks. However, they often lag behind diffusion models regarding image quality and computational efficiency. The primary challenges lie in the poor reconstruction capabilities of discrete tokenizers and the high computational cost associated with generating high-resolution images.

Previous research has focused on improving AR models by enhancing tokenization techniques and exploring more efficient training methods. However, these efforts have not fully addressed the gap in image quality and computational efficiency compared to diffusion models.

This paper aimed to bridge this gap by introducing HART, a novel AR model that combined discrete and continuous tokens for improved image reconstruction and generation. HART leveraged the strengths of both AR and diffusion models, aiming to achieve comparable image quality while maintaining computational efficiency. By addressing the limitations of existing AR models, HART offered a promising approach for advancing the field of visual generation.

Efficient High-Resolution Image Generation

The HART introduced a novel approach to image generation by integrating both discrete and continuous latent spaces. Unlike traditional models limited to discrete or continuous tokens, HART combines both through a hybrid tokenizer and residual diffusion. This allowed HART to maintain high reconstruction quality, leveraging both discrete and continuous visual features for better image representation. The hybrid tokenizer alternated between these two token types during training, which improves the upper bound of generation compared to purely discrete models.

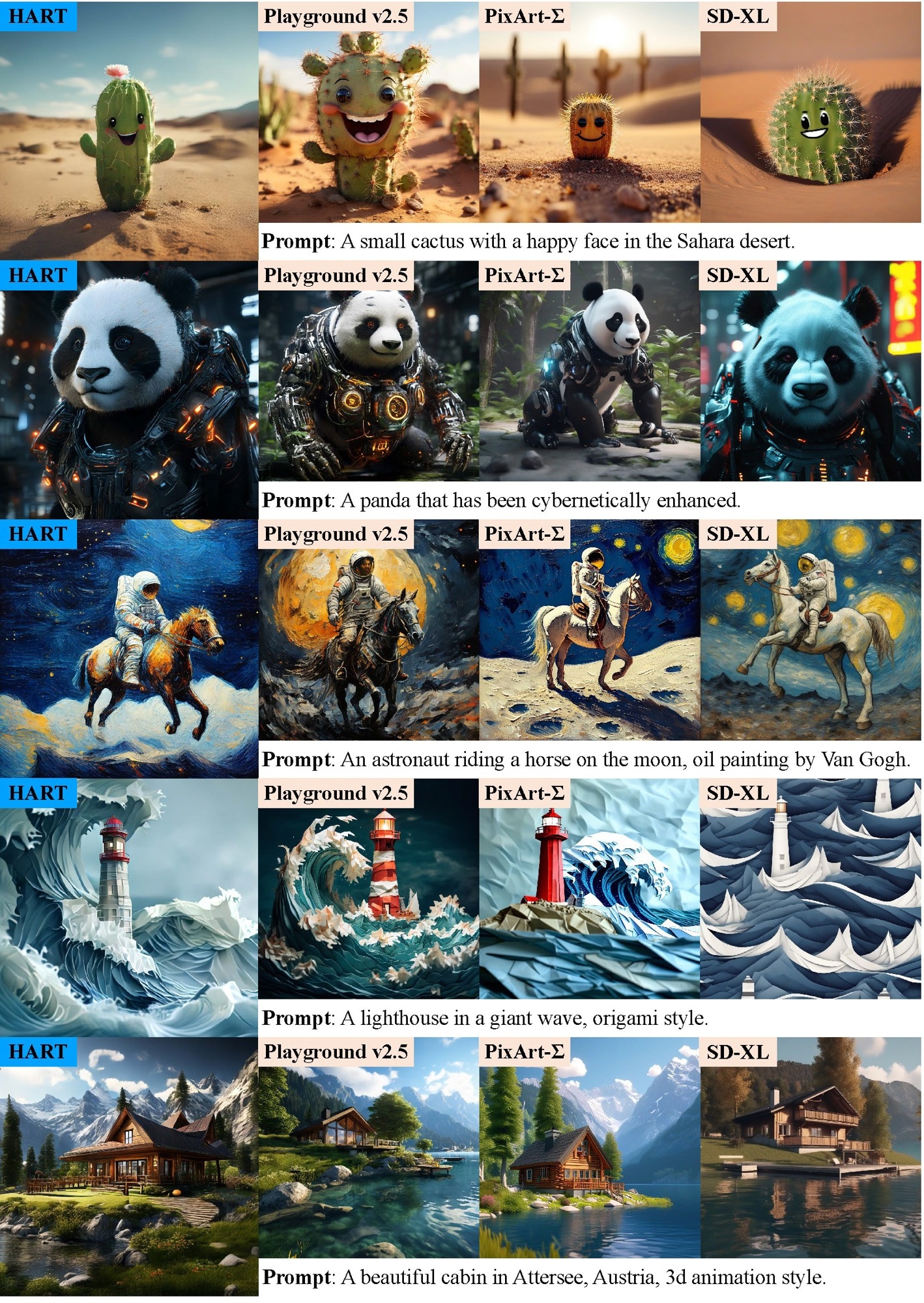

HART generates 1024px images with quality comparable to state-of-the-art diffusion models such as Playground v2.5 (Li et al., 2024a), PixArt-Σ (Chen et al., 2024a), and SDXL (Podell et al., 2023) while being 4.6-5.6× faster.

HART generates 1024px images with quality comparable to state-of-the-art diffusion models such as Playground v2.5 (Li et al., 2024a), PixArt-Σ (Chen et al., 2024a), and SDXL (Podell et al., 2023) while being 4.6-5.6× faster.

HART utilized a scalable-resolution AR transformer to model discrete tokens and incorporated a lightweight residual diffusion module to handle residual continuous tokens. This dual approach enabled efficient high-resolution image generation. By combining discrete tokens with residual diffusion, HART outperforms models that rely solely on one method, producing higher-quality images with fewer computational resources. Additionally, HART’s use of relative position embeddings accelerated convergence at higher resolutions, while optimizations during training and inference further reduced overhead.

HART’s innovations, such as token subsampling and the fusion of graphic processing unit (GPU) kernels for embeddings and normalization, enhanced both training speed and inference efficiency. This made HART an efficient solution for generating detailed, high-resolution images with fewer parameters and computational steps, offering significant improvements over existing autoregressive and diffusion-based methods.

Experimental Evaluation and Performance Analysis

The experiments conducted to evaluate the HART's performance focused on tokenization and generation, including text-to-image and class-conditioned image generation. HART used varying parameter sizes for the class-conditioned generation models, with an additional diffusion multilayer perceptron (MLP) containing 37 million (M) parameters. The model was reduced by 30% in parameters in text-conditioned generation, using Qwen2-1.5B as the text encoder.

HART was evaluated against state-of-the-art diffusion models on MJHQ-30K, ImageNet, GenEval, and DPG-Bench datasets. In terms of quality and efficiency, HART achieved comparable or superior performance with fewer parameters. For instance, at 512x512 resolution, HART achieved a 9.3x higher throughput and 4-7x lower latency than models like SD3-medium, showcasing its efficiency. Moreover, at 1024x1024 resolution, it outperformed PixArt-Σ and SDXL in both latency and throughput while demonstrating superior image quality.

Additionally, HART’s hybrid tokenization approach significantly reduced the reconstruction error at higher resolutions, improving Fréchet inception distance (FID) scores by 31% at 1024px compared to traditional VAR models. HART also benefits from an alternating training strategy for its hybrid tokenizer, which uses scalable-resolution transformers for efficient training. These results demonstrated that HART excelled in both quality and computational efficiency across various benchmarks.

Conclusion

In conclusion, the HART represented a significant advancement in visual generation models, effectively combining discrete and continuous tokens to enhance image quality and efficiency. By integrating a hybrid tokenizer and a lightweight residual diffusion module, HART not only achieved superior image reconstruction but also reduced computational costs.

Experimental evaluations demonstrated that HART delivered comparable or superior performance to state-of-the-art diffusion models, achieving faster throughput and lower latency. With its innovative approach, HART paves the way for future research in sequence-based visual generation, marking a promising development in the field.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Tang, H., Wu, Y., Yang, S., Xie, E., Chen, J., Chen, J., Zhang, Z., Cai, H., Lu, Y., & Han, S. (2024). HART: Efficient Visual Generation with Hybrid Autoregressive Transformer. ArXiv.org. DOI: 10.48550/arXiv.2410.10812, https://arxiv.org/abs/2410.10812