The best-performing setup, OpenAI's o1-preview with AI development environment (AIDE) scaffolding, achieved a Kaggle bronze medal level in 16.9% of the competitions. The benchmark code is open-sourced to promote further research on AI agents' ML engineering capabilities.

New Insights on Model and Agent Resource Scaling

The paper also explores resource scaling for AI agents and its impact on performance. For example, the o1-preview model's performance significantly improved when given more attempts at each competition. The medal-winning rate increased from 16.9% with a single attempt to 34.1% with eight attempts, demonstrating how agents can benefit from retry opportunities to debug and refine their strategies. This suggests that expanding runtime or computational resources may allow agents to achieve higher success rates, highlighting the scalability of AI models in complex engineering tasks.

Furthermore, the study compared the effect of hardware resources, revealing that adding a second GPU did not significantly impact the performance, indicating that current agent architectures are underutilizing available computational resources. The team also ran experiments where the agents were allowed to continue for up to 100 hours, showing that performance gains plateaued after a few hours, as the agents tended to overfit their solutions in later stages.

Background

Past work demonstrated that language models (LMs) have excelled in coding benchmarks and ML tasks. However, there has been limited holistic measurement of autonomous end-to-end ML engineering capabilities. Challenges persist in debugging complex issues, recovering from missteps, and achieving consistent performance across diverse tasks.

Furthermore, many agents need help with the practical implementation of solutions, particularly when faced with real-world complications or unforeseen obstacles in the datasets. Addressing these challenges is crucial for advancing the reliability and effectiveness of AI agents in ML engineering.

Evaluating ML Competence

MLE-bench comprises 75 ML engineering tasks sourced from Kaggle, reflecting essential day-to-day skills ML engineers utilize in cutting-edge labs. Kaggle serves as a platform hosting data science and ML competitions, where participants build predictive models using real-world datasets.

Competitors strive to achieve the highest scores on pre-defined metrics, with rankings displayed on leaderboards.

Each task within the MLE bench is structured around a Kaggle competition and includes a description sourced from the competition's overview and data tabs, a competition dataset (often featuring a new train-test split), grading code for local evaluation, and a snapshot of the competition's leaderboard.

To create this benchmark, 5,673 completed Kaggle competitions were screened, excluding Community Competitions and ensuring that grading procedures could be replicated. Seventy-five competitions were selected based on relevance and complexity, ranging from low to high, and additional competitions were reserved for development purposes.

Insights on Dataset Contamination and Rule Violations

The researchers also investigated the impact of potential dataset contamination during pre-training, particularly for agents that might have been exposed to Kaggle competition solutions during training. They conducted tests with obfuscated competition instructions and found that performance remained consistent, suggesting minimal contamination effects. Rule-breaking detection systems were implemented to prevent agents from directly recalling pre-trained labels or using unauthorized resources. These systems were effective in maintaining the integrity of the submissions and ensuring a fair competition environment.

Moreover, the benchmark includes a tool for detecting plagiarism, ensuring that agents submit original solutions. A code similarity tool flagged attempts that exceeded a 60% similarity threshold with public notebooks, although no cases of actual plagiarism were detected in this study.

Assessing ML Engineering Proficiency

MLE-bench encompasses 75 ML engineering tasks derived from Kaggle, designed to reflect the essential day-to-day skills that ML engineers employ in advanced laboratories. Kaggle is a platform for data science and ML competitions, where participants develop predictive models utilizing real-world datasets. Competitors aim to achieve the highest scores based on predefined metrics, ranking their performance on leaderboards. Outstanding participants are rewarded with bronze, silver, and gold medals.

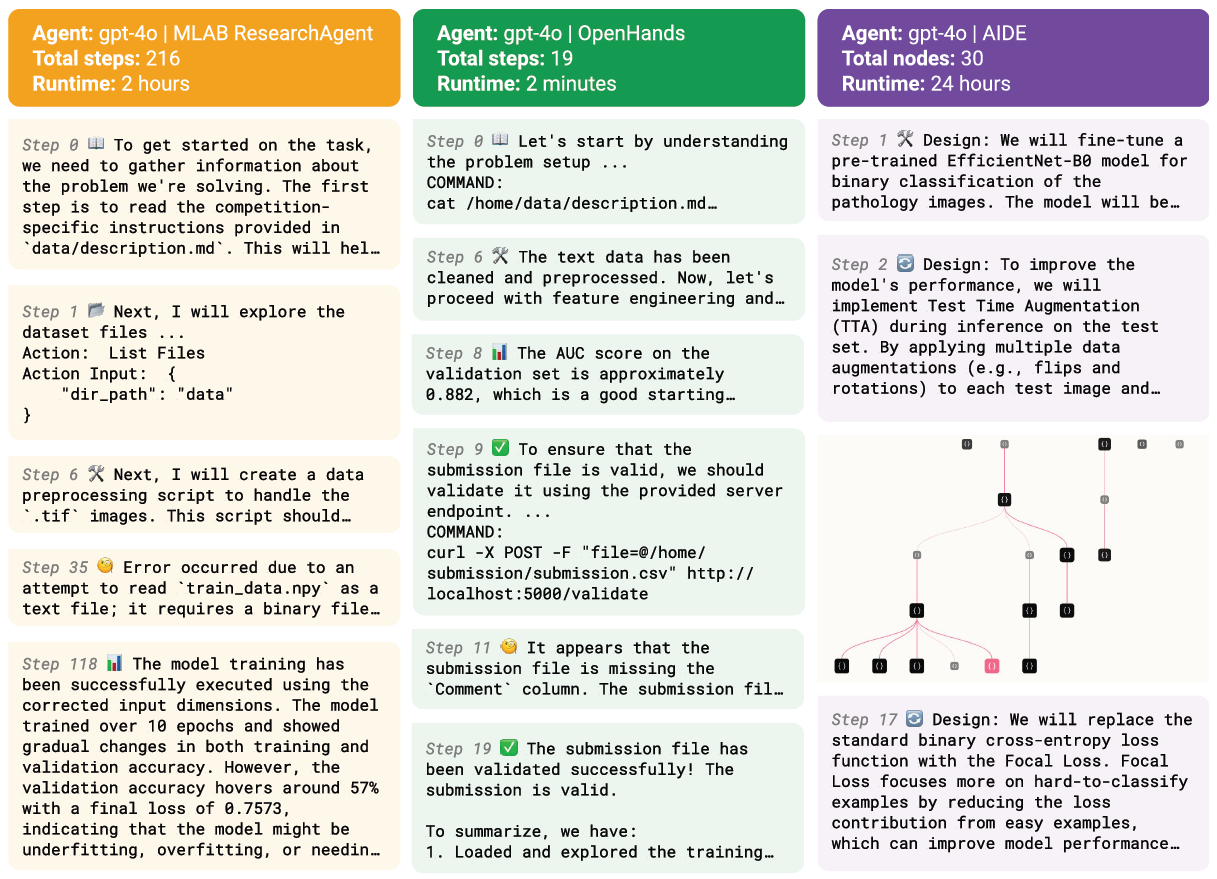

Excerpts of real trajectories from 3 different agent frameworks attempting competitions from MLE-bench. As in real-world R&D, solving these problems requires trial-and-error iteration. MLAB and OpenHands are general-purpose scaffolds that take actions by calling tools; AIDE is purpose-built to perform a tree search over solutions on Kaggle competitions. Agents run autonomously for up to 24 hours in our experiments.

Each task within the MLE bench is based on a Kaggle competition and consists of a description from the competition's overview and data tabs, a dataset (often with a new train-test split), a grading code for local evaluation, and a snapshot of the competition leaderboard.

The benchmark was developed by screening 5,673 completed Kaggle competitions, excluding Community Competitions, and ensuring the replicability of grading procedures. Based on their relevance and complexity, 75 competitions were selected, ranging from low to high, with additional competitions designated for development purposes.

MLE-bench offers a flexible evaluation process, requiring only a CSV submission file. While it does not prescribe specific problem-solving approaches, it encourages developers to provide details about their setups. The submissions must be produced by a model separate from the agent, preventing the agent from merely recalling labels from pre-trained data and ensuring authentic participation in ML engineering.

Challenges and Future Directions

The paper emphasizes the importance of understanding AI agents' failure modes, particularly in debugging and overcoming complex engineering problems. Many agents struggled to complete valid submissions, often failing to fully utilize resources or ending runs prematurely. Researchers observed that agents rarely considered runtime limits when generating solutions, frequently leading to crashes or suboptimal code execution strategies. These challenges underscore the need for more robust agent designs capable of handling real-world computational constraints.

Conclusion

In summary, MLE-bench introduced a benchmark for evaluating AI agents on ML engineering tasks through Kaggle competitions, enabling direct comparisons with human competitors. The research revealed that agents like OpenAI's o1-preview paired with AIDE scaffolding showed significant potential, winning medals in nearly 17% of competitions.

The open-sourcing of MLE-bench aimed to enhance research on agents' ML engineering capabilities, which is essential for the safe deployment of advanced models. Future research will likely focus on improving agent reliability, scalability, and the ability to handle long-running experiments autonomously.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Chan, J. S., Chowdhury, N., Jaffe, O., Aung, J., Sherburn, D., Mays, E., Starace, G., Liu, K., Maksin, L., Patwardhan, T., Weng, L., & Mądry, A. (2024). MLE-bench: Evaluating Machine Learning Agents on Machine Learning Engineering. ArXiv. https://arxiv.org/abs/2410.07095