They proposed two methods: ComfyGen-IC, which selects workflows based on historical performance, and ComfyGen-FT, which fine-tunes predictions using a broader dataset, including both high- and low-scoring workflows. Both methods enhanced image quality compared to traditional models, highlighting a novel direction for improving text-to-image generation by adapting workflows based on the input prompt.

Background

Text-to-image generation has evolved from simple models to complex, multi-component workflows aimed at improving image quality. Previous work in the field primarily focused on enhancing individual components, such as refining the generative model or using large language models (LLMs) for better prompt interpretation. However, crafting workflows remains a manual, expertise-heavy task. Many existing methods struggle to generalize or are computationally expensive, requiring extensive fine-tuning or reinforcement learning.

This paper addressed these gaps by proposing a novel approach, namely, prompt-adaptive workflow generation. Instead of relying on static models, it leveraged LLMs to automatically tailor workflows to specific user prompts.

Two approaches were introduced—ComfyGen-IC, a method that selects workflows based on prompt similarity without additional fine-tuning, and ComfyGen-FT, which fine-tunes predictions using target scores and leverages negative examples to improve prediction accuracy. These methods optimized the entire image generation pipeline, aligning it with human preferences, and demonstrated superior results compared to traditional models and fixed workflows.

This research filled a critical gap by automating workflow design, making text-to-image generation more efficient and accessible without sacrificing quality.

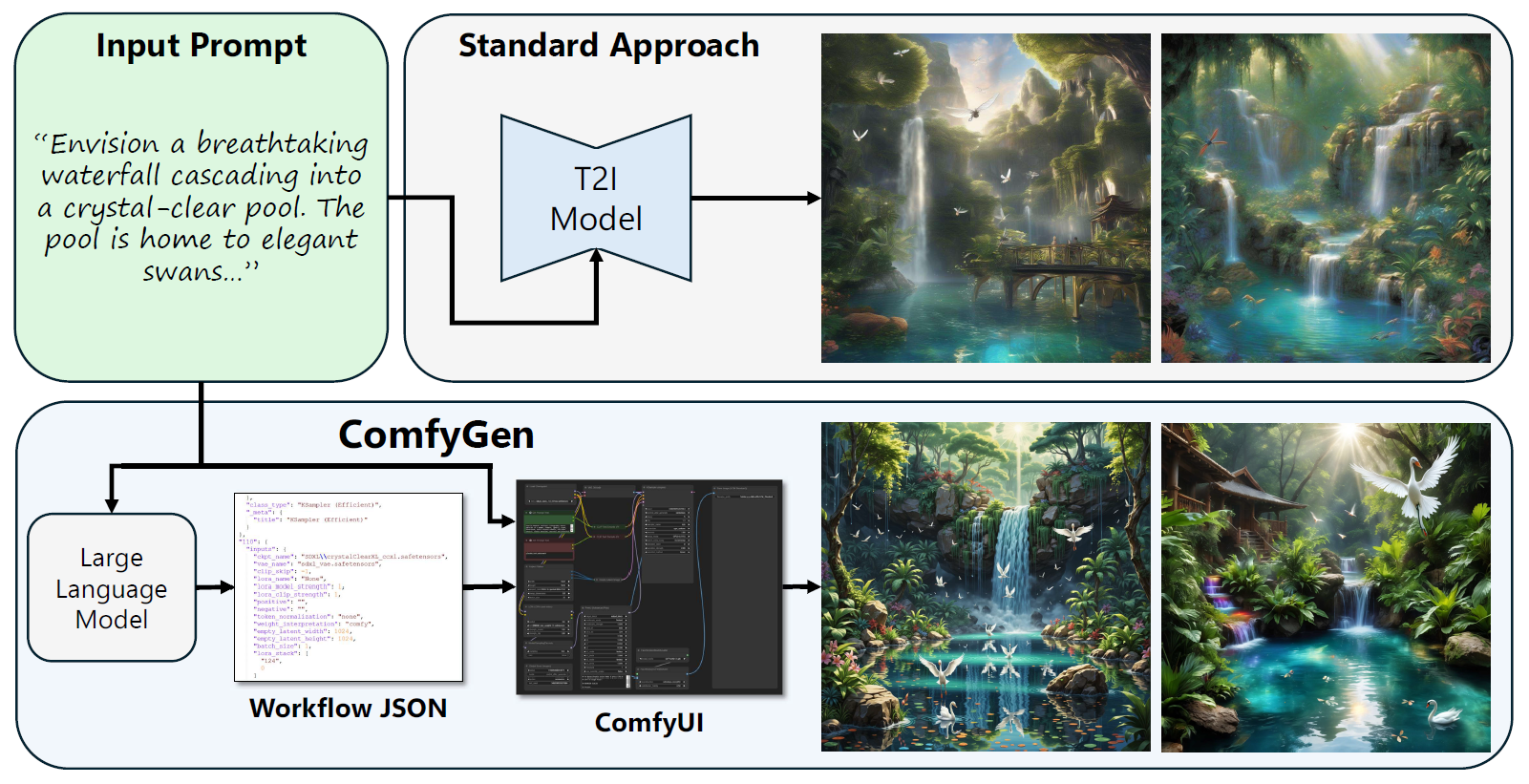

The standard text-to-image generation flow (top) employs a single monolithic model to transform a prompt into an image. However, the user community often relies on complex multimodel workflows, hand-crafted by expert users for different scenarios. We leverage an LLM to automatically synthesize such workflows, conditioned on the user’s prompt (bottom). By choosing components that better match the prompt, the LLM improves the quality of the generated image.

The standard text-to-image generation flow (top) employs a single monolithic model to transform a prompt into an image. However, the user community often relies on complex multimodel workflows, hand-crafted by expert users for different scenarios. We leverage an LLM to automatically synthesize such workflows, conditioned on the user’s prompt (bottom). By choosing components that better match the prompt, the LLM improves the quality of the generated image.

Prompt-Adaptive Workflow Selection Methods

The proposed method aimed to improve text-to-image generation by selecting workflows tailored to specific prompts rather than using generic or monolithic models. The researchers hypothesized that effective workflows depended on the topics and themes in the prompt, which is why they leveraged LLMs to predict the best workflow for each prompt.

Their work is built on the open-source tool ComfyUI, which allows users to design generative pipelines by combining various blocks like models, samplers, and upscaling modules. From an initial collection of 500 workflows sourced from generative-resource-sharing websites, the researchers filtered out complex workflows and augmented them with random parameter changes to generate 310 distinct workflows for their experiments.

To evaluate workflow performance, they used a set of 500 prompts and synthesized images, which were scored by quality prediction models. The scores formed a dataset of prompt, workflow, and score triplets.

Two approaches were explored: ComfyGen-IC, which used a pre-trained LLM to classify new prompts and select the best workflow based on historical performance, and ComfyGen-FT, which fine-tuned an LLM to predict workflows given a prompt and a target score. The latter allowed for more extensive training, including learning from both high- and low-scoring workflows, resulting in improved predictions.

This broader dataset allowed ComfyGen-FT to handle more diverse prompts and predict more effective workflows. Both methods demonstrated superior image generation quality compared to standard models and fixed workflows.

Experiments, Analysis, and Limitations

ComfyGen was evaluated against two alternative strategies: fixed models conditioned on prompts and generic workflows used across all prompts. The baseline models included Stable Diffusion XL (SDXL), its fine-tuned variations (JuggernautXL, DreamshaperXL, and direct preference optimization (DPO)-SDXL), and popular workflows like Segmind Stable Diffusion (SSD-1B) and Pixart-Σ.

The authors benchmarked their approach using the GenEval framework, which tested prompt alignment in tasks such as object counting, attribute binding, and position accuracy. Their fine-tuned (ComfyGen-FT) and in-context (ComfyGen-IC) models outperformed all baselines, demonstrating superior prompt alignment and visual quality.

However, ComfyGen-IC showed reduced performance on short, simplistic prompts used in GenEval, suggesting that brief prompts posed challenges for LLMs in workflow classification.

Further evaluation was conducted using 500 CivitAI prompts, filtered for quality and content appropriateness, and both ComfyGen methods consistently outscored baselines in both automated assessments (human preference score (HPS) v2.0) and a user preference study. ComfyGen-FT demonstrated higher flow diversity, utilizing 79 unique workflows, compared to 41 for ComfyGen-IC, indicating better adaptation to prompt variations.

An analysis of workflow selection revealed intuitive patterns in flow component selection, such as the frequent use of the GFPGAN model for 'People' prompts and anime-specific models for 'Anime' prompts. Despite the successes, limitations persisted, such as the models' inability to address complex tasks or adapt quickly to new components without retraining.

The researchers suggested future directions, including using reinforcement learning to explore flow parameters more efficiently and incorporating retrieval-based methods to improve scalability.

Conclusion

In conclusion, the researchers introduced ComfyGen, a novel approach to text-to-image generation using prompt-adaptive workflows. By tailoring workflows based on specific user prompts, the study demonstrated improvements in image quality compared to fixed, monolithic models. Two methods were proposed, namely, ComfyGen-IC, which selected workflows based on prompt similarity, and ComfyGen-FT, which fine-tuned predictions using target scores.

Both approaches significantly outperformed traditional methods. The authors highlighted a new direction for workflow optimization in generative models, making the process more efficient and accessible. Future work will focus on expanding the scope of prompt-adaptive workflows to tasks such as image-to-image generation and more complex control-based editing tasks.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Source:

Journal reference:

- Preliminary scientific report.

Gal, R., Haviv, A., Alaluf, Y., Bermano, A. H., & Chechik, G. (2024). ComfyGen: Prompt-Adaptive Workflows for Text-to-Image Generation. ArXiv. abs/2410.01731, https://arxiv.org/abs/2410.01731