The system not only generated novel research ideas but also wrote code, executed experiments, visualized results and wrote full scientific papers. It even incorporated a simulated review process to develop and improve ideas iteratively. This framework, tested across subfields like diffusion modeling, language modeling, and learning dynamics, demonstrated potential for accelerating scientific discovery at a remarkably low cost—about $15 per paper.

Related Work

Previous approaches introduced frameworks where AI models assisted in specific aspects of scientific research, such as brainstorming ideas, writing code, and prediction tasks. These early systems played a supportive role rather than autonomously conducting most of the research process.

AI models were used primarily to automate routine tasks and aid human researchers. Still, they did not generate or develop research ideas independently, nor did they manage tasks like running experiments or writing full scientific papers.

Automated Scientific Research Framework

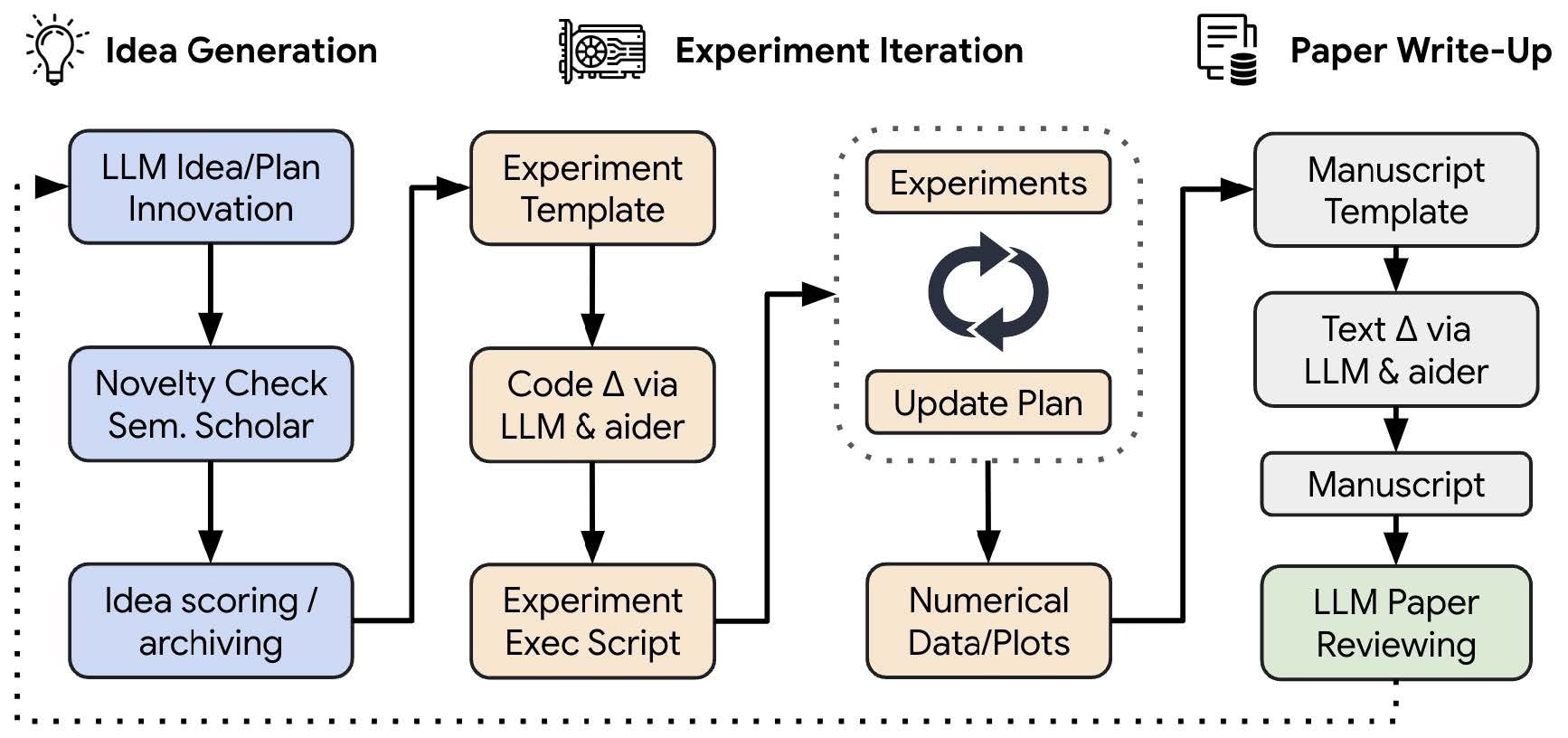

The AI Scientist framework operates in three phases: (1) Idea Generation, (2) Experiment Iteration, and (3) Paper Write-up. Starting with a code template, it generates novel research ideas, executes experiments, visualizes results, and writes a full scientific paper.

It uses multiple iterations of refinement and web searches to ensure novelty and accuracy, while the final write-up is compiled using LaTeX (a popular scientific writing tool). Afterward, the system conducts an automated review to assess the quality of the generated paper, refining it based on the feedback and improving its overall presentation.

Conceptual illustration of The AI Scientist, an end-to-end LLM-driven scientific discovery process. The AI Scientist first invents and assesses the novelty of a set of ideas. It then determines how to test the hypotheses, including writing the necessary code by editing a codebase powered by recent advances in automated code generation. Afterward, the experiments are automatically executed to collect a set of results consisting of both numerical scores and visual summaries (e.g. plots or tables). The results are motivated, explained, and summarized in a LaTeX report. Finally, The AI Scientist generates an automated review, according to current practice at standard machine learning conferences. The review can be used to either improve the project or as feedback to future generations for open-ended scientific discovery.

Conceptual illustration of The AI Scientist, an end-to-end LLM-driven scientific discovery process. The AI Scientist first invents and assesses the novelty of a set of ideas. It then determines how to test the hypotheses, including writing the necessary code by editing a codebase powered by recent advances in automated code generation. Afterward, the experiments are automatically executed to collect a set of results consisting of both numerical scores and visual summaries (e.g. plots or tables). The results are motivated, explained, and summarized in a LaTeX report. Finally, The AI Scientist generates an automated review, according to current practice at standard machine learning conferences. The review can be used to either improve the project or as feedback to future generations for open-ended scientific discovery.

Automated Paper Review System

The document outlines the design of an automated paper reviewing system using a variant of GPT-4, referred to as GPT-4o—based models. The system is applied explicitly to scientific papers and focuses on following Neural Information Processing Systems (NeurIPS) conference review guidelines.

The system processes raw text from manuscripts and evaluates papers based on multiple factors, including soundness, presentation, contribution, and overall quality. In addition to providing numerical scores and feedback, the system generates both a numerical score and a binary decision (accept/reject). It uses techniques like few-shot prompting, self-reflection, and review ensembling to improve decision-making.

The performance of the GPT-4o-based reviewer is compared with human reviewers using a dataset of 500 International Conference on Learning Representations (ICLR) 2022 papers. The results demonstrate that the AI reviewer achieves nearly competitive performance with human reviewers, achieving human-level accuracy on balanced datasets and better F1 Scores.

The system was tested against other foundation models like Claude Sonnet 3.5 and GPT-4o-mini, which proved less efficient or accurate. The AI-based reviewer was also tested for consistency in scoring compared to human reviewers, and the correlation between AI-generated scores and human average scores was slightly higher than the correlation between two human reviewers.

Moreover, a case study on an AI-generated paper, "adaptive dual-scale denoising," reveals both the strengths and limitations of the AI Scientist, which autonomously generates research ideas, code, and experiments.

While the generated paper demonstrated impressive algorithmic insights, experimental details, and novel visualizations, it also exhibited notable errors, such as hallucinated details (e.g., incorrectly identifying the hardware and software used), positive bias in interpreting results, and minor experimental flaws.

Overall, the LLM-based review system shows promise as a tool for initial evaluations of papers. It offers valuable feedback and aligns more closely with the average human reviewer scores than individual human reviewers. However, there is still room for improvement, especially in reducing false positives and enhancing the clarity of AI-generated papers.

AI Scientist Evaluation Insights

The AI Scientist was evaluated extensively using three templates across publicly available LLMs, including Claude Sonnet 3.5, DeepSeek Coder, and GPT-4o. Each run involved providing 1-2 seed ideas, leading to the generation of up to 50 new ideas. Performance was assessed on novelty, experimental success rate, and valid manuscript completion.

Results indicated that Claude Sonnet 3.5 produced the highest-quality papers, while GPT-4o struggled with LaTeX formatting, impacting its completion rate.

Despite these challenges, both models demonstrated significant potential for idea generation in scientific contexts, paving the way for future enhancements in the automation of research processes. The findings suggested that while LLMs can assist in the earlier stages of research, substantial ongoing improvements are essential for achieving higher standards in scientific writing.

The evaluations revealed that the AI-generated papers often exhibited both notable strengths and weaknesses, demonstrating great potential for initial paper evaluations despite some shortcomings in quality and detail. While some papers showcased innovative ideas, others needed more depth in scientific writing, highlighting the critical need for further model refinement.

Conclusion

In summary, introducing the AI Scientist represents a significant advancement in harnessing AI for scientific research, automating the discovery process while incorporating an AI-driven review system. While it offers impressive innovation, the system has raised important questions regarding its ability to propose truly paradigm-shifting ideas. It aimed to create an AI-driven scientific ecosystem, though it was believed that the role of human scientists would evolve rather than diminish.

The current version showed strong innovation capabilities but raised questions about its potential to propose paradigm-shifting ideas. Ultimately, the AI Scientist was positioned as a highly capable tool that could augment human scientists, though its ability to replicate the full extent of human creativity remains an open question.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Lu, C., Lu, C., Lange, R. T., Foerster, J., Clune, J., & Ha, D. (2024). The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery. ArXiv. abs/2408.06292, https://arxiv.org/abs/2408.06292