By systematically categorizing uncertainties in 3D scene reconstruction, researchers offer new tools to enhance the accuracy and reliability of neural rendering methods, paving the way for more robust real-world applications.

Study: Sources of Uncertainty in 3D Scene Reconstruction

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

In an article recently posted on the arXiv* server, the researchers discussed how uncertainties such as noise and occlusions impact the accuracy and reliability of 3D scene reconstruction using neural radiance fields (NeRFs) and 3D Gaussian splatting (GS). They introduced a taxonomy to categorize these uncertainties into four distinct types: aleatoric, epistemic, confounding outliers, and input uncertainty, and extended NeRF/GS methods with uncertainty estimation techniques such as ensembles and Bayesian methods. Their empirical study highlighted the intricate ways these uncertainties interact and the critical importance of addressing them in the design of NeRF/GS-based 3D reconstruction methods.

Background

Past work has shown that 3D scene reconstruction methods like NeRFs and 3D GS can produce artifacts in uncertain regions. Despite their high-fidelity reconstruction capabilities, these methods struggle with sparse views, occlusions, and moving objects, and they lack mechanisms to identify and quantify uncertainties. Recent efforts have addressed epistemic uncertainty by recognizing unobserved parts of the scene and selecting the next-best view.

At the same time, other research has tackled aleatoric uncertainty by detecting moving objects or distractors. However, these approaches often cater to specific application tasks rather than providing a comprehensive uncertainty framework. Thus, defining the types of uncertainties NeRFs and GS need to quantify and understand their complex interrelations is crucial for enabling robust 3D scene reconstruction.

Uncertainty in NeRF

The authors identified four types of uncertainty in neural scene reconstruction: aleatoric, epistemic, confounding outliers, and input uncertainty. They discussed how these uncertainties affect methods like NeRFs and 3D Gaussian Splatting. By incorporating uncertainty estimation techniques such as ensembles and Bayesian methods, they aimed to systematically analyze how uncertainty's impact on 3D reconstruction varies across different scenarios. Their study extended previous works by offering a more comprehensive evaluation of the influence of different uncertainty sources on reconstructed scenes.

Neural Reconstruction Uncertainties

The researchers explored the presence of uncertainty in neural scene reconstruction, particularly focusing on NeRFs and 3D GS. They identified four main types of uncertainty: aleatoric, which arises from inherent noise in observations; epistemic, due to insufficient information in parts of the scene; confounding outliers, caused by elements like moving objects; and input uncertainty, related to the sensitivity to camera poses.

These uncertainties can significantly affect the performance of methods like NeRFs and GS, potentially leading to inaccuracies in the reconstructed scenes. Aleatoric uncertainty is fundamental as it stems from random effects in observations, while epistemic uncertainty is reducible through capturing data from new viewpoints. Confounding outliers introduce ambiguities, and input uncertainty focuses on how camera pose changes can impact reconstruction.

To address these issues, the authors incorporated various uncertainty estimation techniques, including ensembles and Bayesian methods. Specifically, ensembles involve training multiple networks with different initializations, while Bayesian methods quantify epistemic uncertainty through posterior approximations. By applying these techniques, they aimed to capture and analyze the reconstruction's sensitivity to these uncertainties.

Their study goes beyond prior research by conducting an in-depth analysis of how different sources of uncertainty influence 3D scene reconstruction. This deeper understanding is crucial for improving the robustness and reliability of NeRF and GS methods in real-world applications, where uncertainties are often intertwined and can affect the final output quality.

Uncertainty Evaluation

The researchers conducted experiments to evaluate different aspects of uncertainty in 3D scene reconstruction using various NeRF and GS methods. They implemented their strategies within the Nerfstudio framework and used Nvidia A100 GPUs for their experiments.

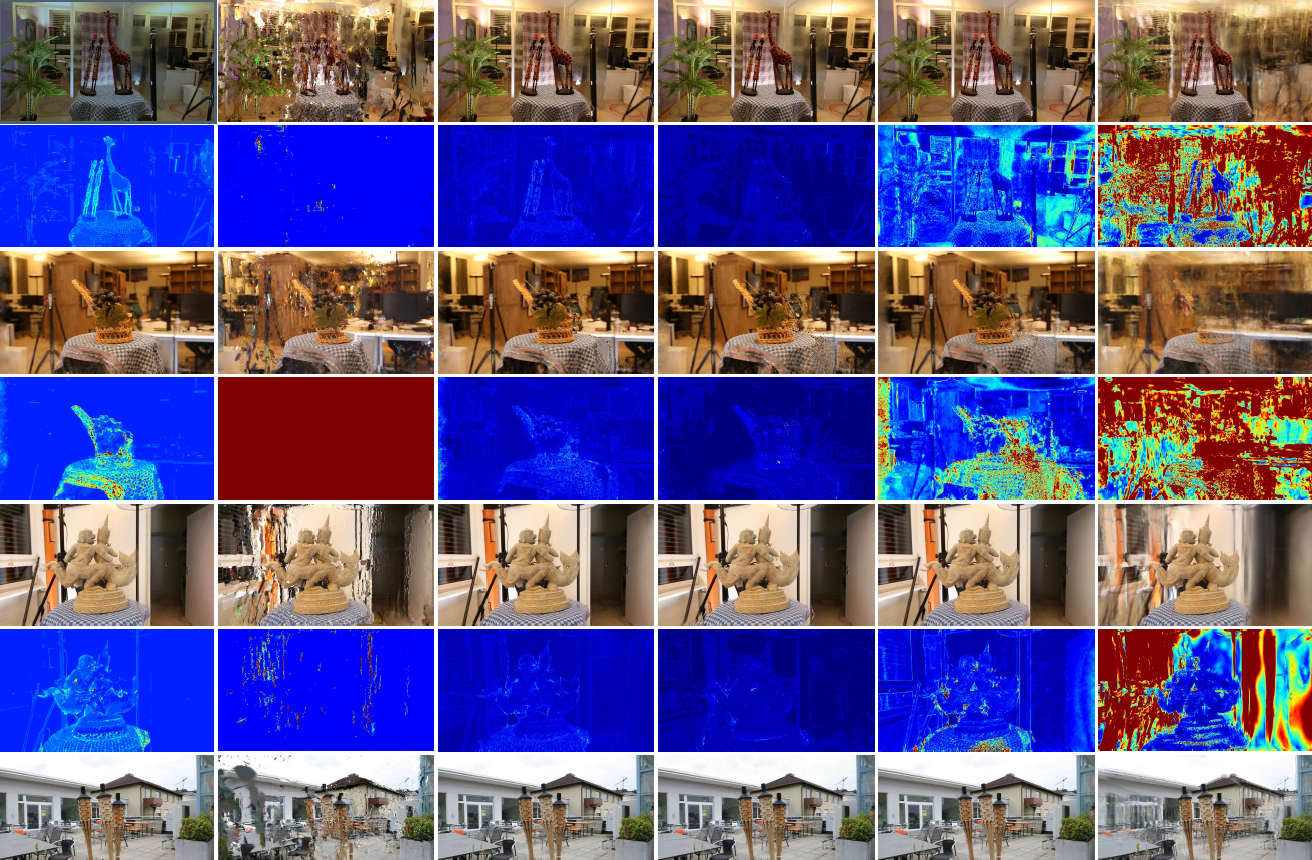

The methods examined included active-nerfacto, active-splatfacto, Monte Carlo (MC)-dropout-nerfacto, Laplace-nerfacto, ensemble-nerfacto, and ensemble-splatfacto, each with distinct techniques for uncertainty estimation such as dropout layers, Laplace approximations, and ensemble approaches. The experiments were performed on datasets like mip-NeRF 360, blender, and light field, assessing different uncertainty aspects like aleatoric and epistemic uncertainties and sensitivity to cluttered inputs and input pose variations.

The results indicated how each method handled different types of uncertainty. For aleatoric uncertainty, active-nerfacto and Ensemble methods performed best across various noise and blur scenarios, while for epistemic uncertainty, active-nerfacto and Ensemble methods excelled with higher image quality and better calibration as the number of training views increased.

Ensemble-splatfacto demonstrated superior image quality and effective uncertainty mapping in cluttered scenarios, whereas Ensemble-Nerfacto effectively detected artifacts. Sensitivity to input pose perturbations showed that gradient norm maps highlighted significant changes in high-contrast areas, illustrating how input inaccuracies impact reconstruction sensitivity. The study also highlighted the importance of selecting the appropriate method based on the specific uncertainty type present, as different methods showed strengths in handling particular uncertainties. These findings emphasize the importance of method selection and uncertainty handling in enhancing 3D scene reconstruction robustness.

Conclusion

To sum up, the paper explored various sources of uncertainty in 3D reconstruction tasks using NeRF- and GS-based methods. It categorized four types of uncertainties — aleatoric, epistemic, confounding outliers, and input uncertainty — and examined their interactions, particularly between aleatoric and epistemic uncertainties.

Different experimental setups were proposed, and an empirical study was conducted using several methods with common uncertainty estimation techniques. The study highlighted the importance of developing new strategies to incorporate uncertainties directly into the reconstruction pipeline for improved robustness and awareness. The research underscores that future work should focus on integrating these uncertainty estimation techniques more deeply into the reconstruction processes to create more reliable and resilient 3D models in diverse real-world scenarios.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Klasson, M., et al. (2024). Sources of Uncertainty in 3D Scene Reconstruction. ArXiv. DOI: 10.48550/arXiv.2409.06407, https://arxiv.org/abs/2409.06407v1