Discover how Meta Llama 3's precision, powered by cutting-edge prompt engineering and seamless AWS integration, empowers users to transform natural language into SQL with unprecedented accuracy.

AWS Machine Learning Blog: Best practices for prompt engineering with Meta Llama 3 for Text-to-SQL use cases. Image Credit: Tee11 / Shutterstock

AWS Machine Learning Blog: Best practices for prompt engineering with Meta Llama 3 for Text-to-SQL use cases. Image Credit: Tee11 / Shutterstock

In a recent article posted to the AWS Machine Learning Blog, engineers explored using Meta’s Llama 3, a publicly available foundation model (FM), to build text-to-SQL applications on Amazon Web Services (AWS). This release showcases the effectiveness of a Retrieval Augmented Generation (RAG) approach by integrating Meta Llama 3 with ChromaDB, a vector database, and Amazon SageMaker JumpStart, a platform for deploying and testing pre-trained models.

Background

The rapid advancements in generative artificial intelligence (AI) and large language models (LLMs) have led to the development of powerful FMs like Meta Llama 3, generative pre-text transformer (GPT), Claude 3 Haiku, Gemini, etc, capable of performing various tasks such as text generation, translation, and code writing. Trained on massive datasets, these models demonstrate impressive abilities in understanding and generating human-like text.

Meta Llama 3, building on Meta Llama 2, maintains the 70-billion-parameter capacity but achieves superior performance through enhanced training techniques, such as the use of a 128,256-token vocabulary and grouped-query attention (GQA) for the 8-billion-parameter model. This approach emphasizes Meta's strategy of optimizing data use and methodologies to advance AI capabilities. The model is available in 8 and 70-billion-parameter versions, each with base and instruct variants to meet different hardware and application requirements.

About the Product

The engineers developed a Text-to-SQL framework by combining techniques such as prompt engineering, RAG, and vector databases. They used Meta Llama 3 and ChromaDB on AWS to allow users to query databases with natural language, thus eliminating the need for structured query language (SQL) expertise.

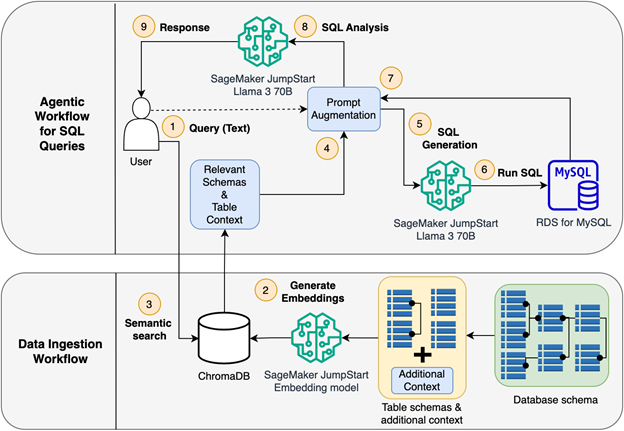

Solution architecture. Image Credit: AWS

A crucial aspect of this solution was the application of best practices in prompt engineering, which is key to optimizing the performance of Meta Llama 3. The framework employs the RAG pattern, where ChromaDB retrieves relevant context from the database schema to enhance the user's query before sending it to Meta Llama 3 for SQL generation. This approach was implemented on Amazon SageMaker JumpStart, which offers access to pre-trained models and solutions.

The framework's effectiveness was highlighted based on its capability to generate accurate SQL queries from natural language inputs. The announcement also emphasized its scalability and flexibility by integrating it with various database management systems, including relational and NoSQL databases.

Key Outcomes

The findings showed that the proposed solution effectively generates accurate SQL queries from natural language inputs. It handled complex queries having multiple tables, filters, joins, and aggregations better than traditional Text-to-SQL systems. Using advanced prompt engineering techniques and ChromaDB to retrieve relevant schema information and augment user queries significantly improved the accuracy and efficiency of the generated SQL. The solution also leveraged LLMs' scalability, enabling it to adapt to new schemas and databases without extensive retraining.

Applications

This product has significant implications for democratizing data access and empowering non-technical users to leverage the power of generative AI for data analysis. The Text-to-SQL solution can be applied in various domains, including finance, healthcare, and e-commerce, where users can query databases using natural language to gain insights from their data. The solution can also be integrated into existing applications and workflows, enhancing their capabilities and user experience. By focusing on prompt engineering best practices, the solution can enable a wider range of users to access and analyze data more effectively by removing the need for SQL expertise.

Conclusion

In summary, integrating Meta Llama 3 and ChromaDB on AWS proved effective in developing robust and efficient Text-to-SQL applications. This approach could transform how technical users traditionally write SQL queries and enable non-technical users with limited SQL knowledge to generate SQL queries quickly for data access and analysis. This release underscores the value of publicly available AI models in developing practical solutions that can be further customized and optimized for specific business needs.

The next steps involve leveraging AWS services to build innovative and scalable AI solutions. The authors suggested improving the solution's accuracy and efficiency by using advanced prompt engineering techniques, incorporating more contextual information, and integrating it with additional database management systems.

The authors suggested future work, including further refining prompt engineering techniques, incorporating more detailed contextual information, and expanding integration with additional database management systems. They also encouraged readers to explore the provided code samples and customize the solution to fit their specific business needs.