In an article published in the journal Agronomy, researchers presented a method for non-destructive localization and weight estimation of banana bunches in commercial orchards using red-green-blue-depth (RGB-D) images. By employing the you only look once (YOLO)-banana neural network and depth information, the authors accurately determined the size and weight of banana bunches, achieving an r-squared (R2) value of 0.8947 and a root mean squared error (RMSE) of 1.4102 kilograms(kg). This approach aided in intelligent orchard management and banana-harvesting automation.

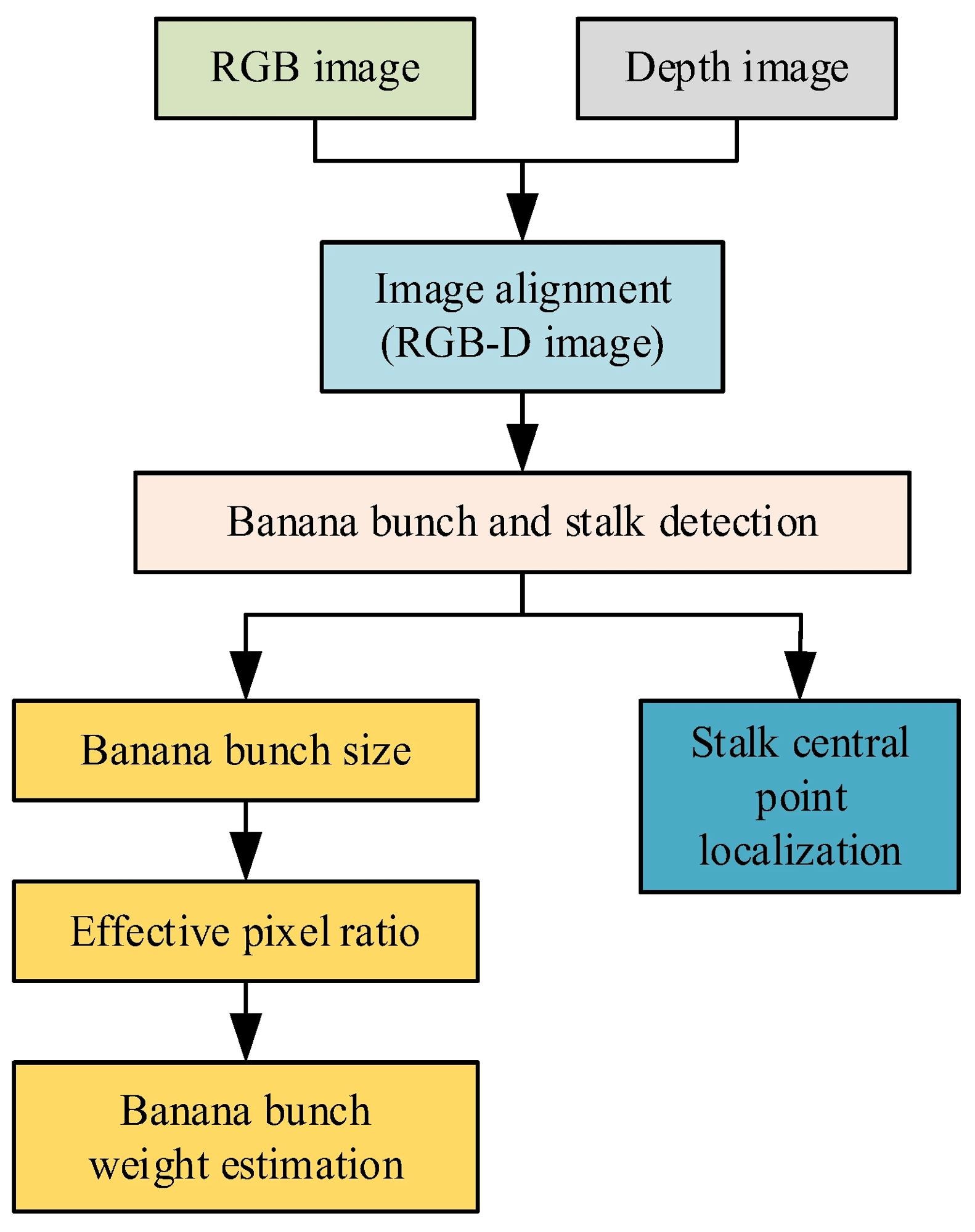

The flowchart of the algorithm. Image Credit: https://www.mdpi.com/2073-4395/14/6/1123

The flowchart of the algorithm. Image Credit: https://www.mdpi.com/2073-4395/14/6/1123

Background

The banana industry faces significant challenges, including labor shortages, rising labor costs, and worker safety concerns, which hinder effective orchard management. As precision agriculture technologies advance, integrating intelligent robots with fruit and vegetable operations has shown promise in improving task accuracy and resource efficiency. Despite these advancements, managing banana orchards remains difficult due to their unstructured environments, characterized by uneven lighting and random occlusion.

Researchers have leveraged various visual sensors to overcome these challenges in non-structured orchard settings. Depth sensors, particularly time of flight (TOF) and structured light technologies have been widely utilized in tasks such as fruit detection, localization, and evaluation of shape and volume. While TOF sensors are effective in low-light conditions, they have low resolution and high power consumption. In contrast, structured light technology, with its higher accuracy and lower power consumption, has gained attention in recent studies.

However, existing research on banana weight estimation primarily relies on manual data analysis, lacking automated solutions. Prior studies have focused on detecting banana stalks, inflorescence axes, and pseudo-stems, as well as correlating plant parameters with bunch weight. To address these gaps, this paper introduced a method for non-destructive localization and weight estimation of banana bunches using RGB-D images and structured light technology.

By employing the YOLO-Banana neural network, the study aimed to enhance intelligent orchard management, offering precise localization information for harvesting robots and reliable weight estimation models to assist farmers in making informed harvest decisions.

Sensor System Deployment and Algorithm Development

The sensor system deployed in this study, mounted on a remote-controlled vehicle within a banana orchard, integrated the Intel Realsense D435i depth camera for capturing RGB-D images. This camera, renowned for its stability and affordability, provided both color and depth information crucial for accurate detection and localization of banana bunches and stalks. Through meticulous alignment of depth and RGB images, achieved via a four-step process, a comprehensive RGB-D image was generated, enhancing the visual system's capability to discern banana structures.

Leveraging the lightweight YOLO-banana detection model, the algorithm swiftly identified and delineated banana bunches and stalks in the RGB-D image, furnishing vital bounding box data for subsequent analysis. Subsequently, precise localization of the central points of banana stalks and bunches was achieved, facilitating size estimation of the bunches within the image plane.

The effective pixel ratio captured the ratio of banana pixels to the bounding box area, refining weight estimation accuracy.

Through meticulous analysis and comparison of various parameters, including surface areas and volumes, a robust model for estimating banana bunch weight was constructed. This model, evaluated through regression analysis metrics such as R2 and RMSE, ensured reliable estimation of bunch weights, vital for informed orchard management decisions.

By amalgamating cutting-edge sensor technology with advanced algorithmic methodologies, the researchers presented a comprehensive approach to non-destructive banana bunch weight estimation, laying the groundwork for enhanced precision agriculture practices in banana cultivation.

Experimental Results and Discussion

Experiments were conducted in two banana orchards under various lighting conditions to test localization and weight estimation. Out of 56 banana trees, data from 45 were used to develop the weight estimation model, while 11 trees were reserved for model validation. Localization accuracy of banana stalks was assessed using a laser rangefinder and a digital angle finder, with results showing an average localization error of 22.875 millimeters (mm), suitable for practical applications in banana labeling and harvesting.

For bunch size estimation, the detected sizes were compared to manual measurements. Height estimation achieved an R² of 0.946 and an RMSE of 28.91 mm, while width estimation had an R² of 0.9069 and an RMSE of 12.74 mm. Height predictions showed a positive bias, and width predictions a negative bias, likely due to structural irregularities and measurement inconsistencies.

Weight estimation involved regression analysis of univariate and bivariate parameters. The height parameter alone provided the highest R² of 0.7865. The bivariate model combining height and effective pixel volume improved accuracy, yielding an R² of 0.8143. Validation with the bivariate model resulted in an R² of 0.8947 and an RMSE of 1.4102 kg, outperforming the univariate height model.

Conclusion

In conclusion, the researchers introduced a method for non-destructive localization and weight estimation of banana bunches using RGB-D images and the YOLO-banana neural network. Conducted in commercial orchards, the method achieved a high accuracy with an R² of 0.8947 and an RMSE of 1.4102 kg. By integrating depth cameras and machine vision techniques, the approach provided precise size and weight estimations, facilitating intelligent orchard management and automated harvesting. Future work will aim to enhance weight estimation accuracy and address challenges related to occlusion and varying illumination conditions.

Journal reference:

- Zhou, L., Yang, Z., Deng, F., Zhang, J., Xiao, Q., Fu, L., & Duan, J. (2024). Banana Bunch Weight Estimation and Stalk Central Point Localization in Banana Orchards Based on RGB-D Images. Agronomy, 14(6), 1123. https://doi.org/10.3390/agronomy14061123, https://www.mdpi.com/2073-4395/14/6/1123