In a paper published in the journal Nature, researchers introduced a groundbreaking advancement in augmented reality (AR) technology by presenting a holographic system that seamlessly integrates digital content into the physical environment. They successfully delivered vibrant, full-color, 3D AR experiences within a compact device form factor by combining metasurface gratings, compact waveguide geometry, and artificial intelligence (AI)-driven holography algorithms.

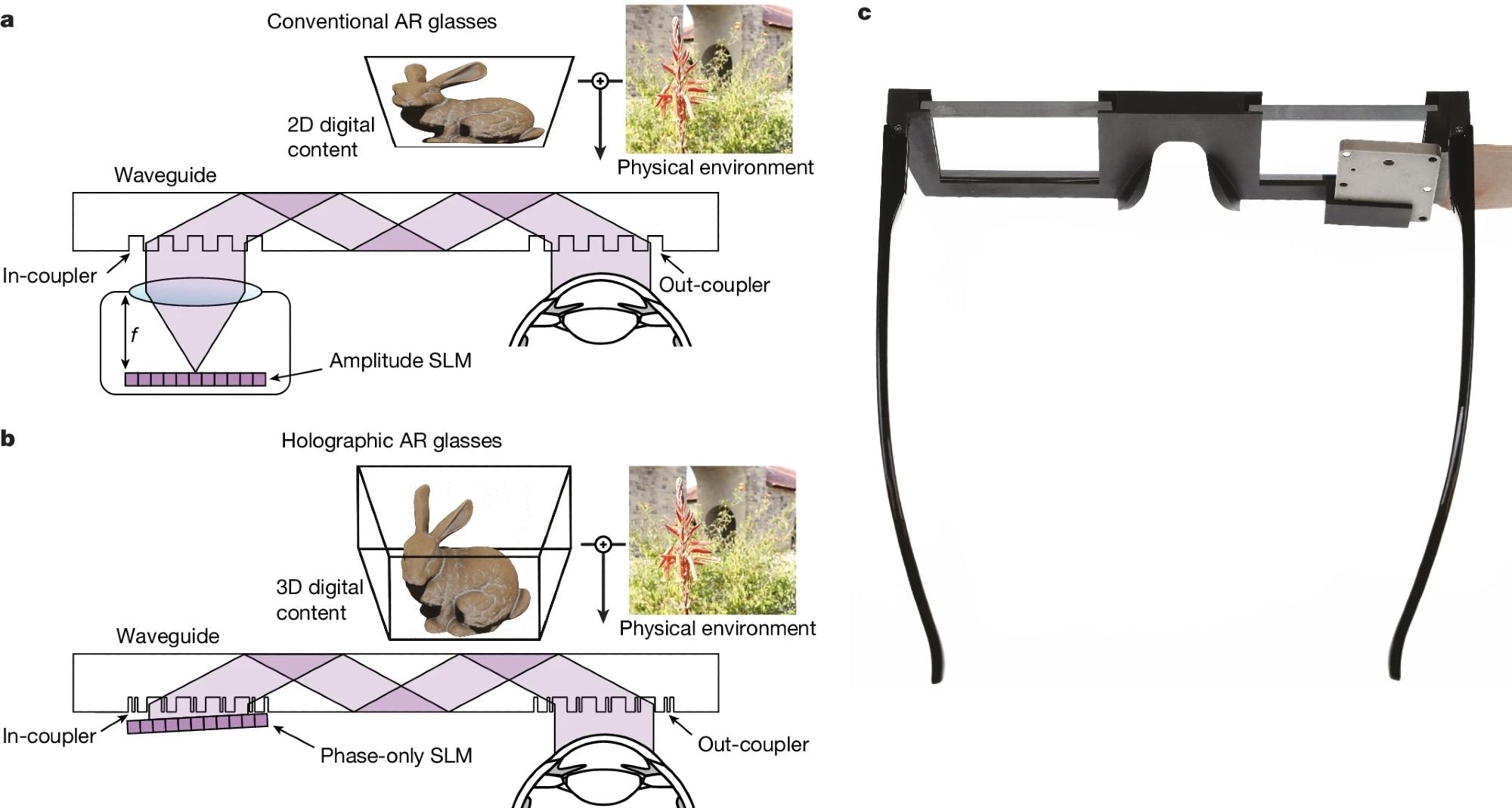

a, Conventional AR glasses use amplitude SLMs, such as organic light-emitting diodes or micro light-emitting diodes, which require a projector-based light engine that is typically at least as thick as the focal length f of the projection lens. b, The design of our holographic AR glasses uses a phase-only SLM that can be mounted very close to the in-coupling grating, thereby minimizing the device form factor. Additionally, unlike conventional AR glasses, our holographic design can provide full 3D depth cues for virtual content, as illustrated by the bunny (adapted from the Stanford Computer Graphics Laboratory). c, Compact 3D-printed prototype illustrating the components of our holographic AR glasses in a wearable form factor. Image Credit: https://www.nature.com/articles/s41586-024-07386-0

a, Conventional AR glasses use amplitude SLMs, such as organic light-emitting diodes or micro light-emitting diodes, which require a projector-based light engine that is typically at least as thick as the focal length f of the projection lens. b, The design of our holographic AR glasses uses a phase-only SLM that can be mounted very close to the in-coupling grating, thereby minimizing the device form factor. Additionally, unlike conventional AR glasses, our holographic design can provide full 3D depth cues for virtual content, as illustrated by the bunny (adapted from the Stanford Computer Graphics Laboratory). c, Compact 3D-printed prototype illustrating the components of our holographic AR glasses in a wearable form factor. Image Credit: https://www.nature.com/articles/s41586-024-07386-0

Their innovative image formation model, merging a precise waveguide model with AI-calibrated components using camera feedback, ensured unmatched visual quality. This co-designed nanophotonic metasurface waveguide and AI-driven holographic algorithms represent a significant milestone in developing wearable AR devices.

Background

Previous research explored optical designs for AR systems, seeking to enhance user experiences across various domains. Waveguide image combiners showed promise for compact AR glasses but faced challenges like optical bulk and limited 3D content display. Efforts to adapt digital holography for AR also encountered hurdles in achieving compactness and high-quality 3D imagery.

Metasurface Fabrication

The fabrication process for the metasurface begins with coating the substrate with a 30-nm-thick chromium (Cr) film using e-beam evaporation. Subsequently, e-beam lithography is employed to create metasurface patterns with specific dimensions for the in-coupler and out-coupler.

This technique involves spin-coating a positive-tone e-beam resist layer, post-backing the layer, and spin-coating a charge dissipation layer. The patterns are transferred onto the high-index glass substrate through multiple dry etching steps, utilizing various equipment and gas mixtures. Scanning electron microscope images of the fabricated all-glass metasurface couplers are obtained for analysis.

Metasurface sample images are captured using a scanning electron microscope. Representative samples are coated with a thin 3 nm film of gold/palladium to reduce charing. Images are acquired with specific parameters, including an accelerating voltage of 10 kV.

Two convolutional neural networks (CNNs), CNN for inverse computation (CNNIC) and CNN for target image prediction (CNNtarget), are employed, utilizing a modified UNet architecture. These networks efficiently learn the residual aberrations in a physical optical system. The input wavefront is augmented, and both CNNs use instance normalization, rectified linear unit activation, and skip connections. CNNIC outputs two-channel representations of real and imaginary values, while CNNtarget directly outputs a single-channel amplitude.

The neural network-parameterized wave propagation model is trained using a dataset comprising pairs of spatial light modulator (SLM) phase patterns and corresponding intensity images captured by a camera at the output of the holographic display prototype. The dataset encompasses images at different virtual distances through the waveguide, enabling training over multiple-intensity planes.

Training is conducted on specialized hardware with specific parameters, emphasizing the importance of diversity in phase patterns for effective model training. After training, the learned waveguide propagation model synthesizes holograms for new 2D and 3D scenes, enabling operation without additional camera feedback.

AR Prototype Configuration

The prototype AR display incorporates a metasurface waveguide and a HOLOEYE LETO-3 phase-only SLM, boasting a resolution of 1080 × 1920 pixels with a pitch of 6.4 μm. Illumination is provided by a FISBA READYBeam fiber-coupled module featuring red, green, and blue laser diodes with 638, 521, and 445 nm wavelengths, respectively.

The SLM and illumination are slightly tilted, mitigating the interference of unwanted light coupled into the waveguide before reaching the SLM to ensure optimal clarity of digital content. Calibration data for the artificial-intelligence-based wave propagation model is gathered, alongside results obtained using a forward-looking infrared (FLIR) grasshopper3 12.3 MP color universal serial bus 3.0 (USB3) sensor through a Canon 35 mm lens, with lens focus controlled by an Arduino.

The evaluation involves qualitative and quantitative assessments of 2D image quality, comparing various propagation models. Notably, the AI-based wave propagation model consistently outperforms the baselines by a significant margin in peak signal-to-noise ratio. The full-color 3D results demonstrate high image quality for the digital content's in and out-of-focus regions.

This accurate portrayal of 3D defocus behavior has potential implications for mitigating discomfort associated with the vergence–accommodation conflict in users. Additionally, experimental full-color 3D results depict the optical combination of physical scenes with digitally overlaid content captured using different camera focus settings. The proposed approach exhibits superior performance compared to baseline models.

Conclusion

To sum up, the prototype AR display integrated advanced components, including a metasurface waveguide, a phase-only SLM, and a fiber-coupled laser module, to achieve impressive results. Through qualitative and quantitative evaluations, it was evident that the AI-based wave propagation model significantly enhanced image quality compared to traditional methods.

The high-quality full-color 3D results demonstrated the system's capabilities and hinted at potential user benefits, particularly in mitigating discomfort associated with visual conflicts. Overall, the experimental outcomes underscored the effectiveness of the proposed approach in advancing AR display technology.