In a recent study published in the journal Scientific Reports, researchers introduced several novel metrics designed to measure the prediction difficulty of individual cases within a dataset using neural networks (NN). These metrics were developed to effectively capture the diverse levels of difficulty present across datasets, thereby offering a fresh perspective for comprehending data complexity.

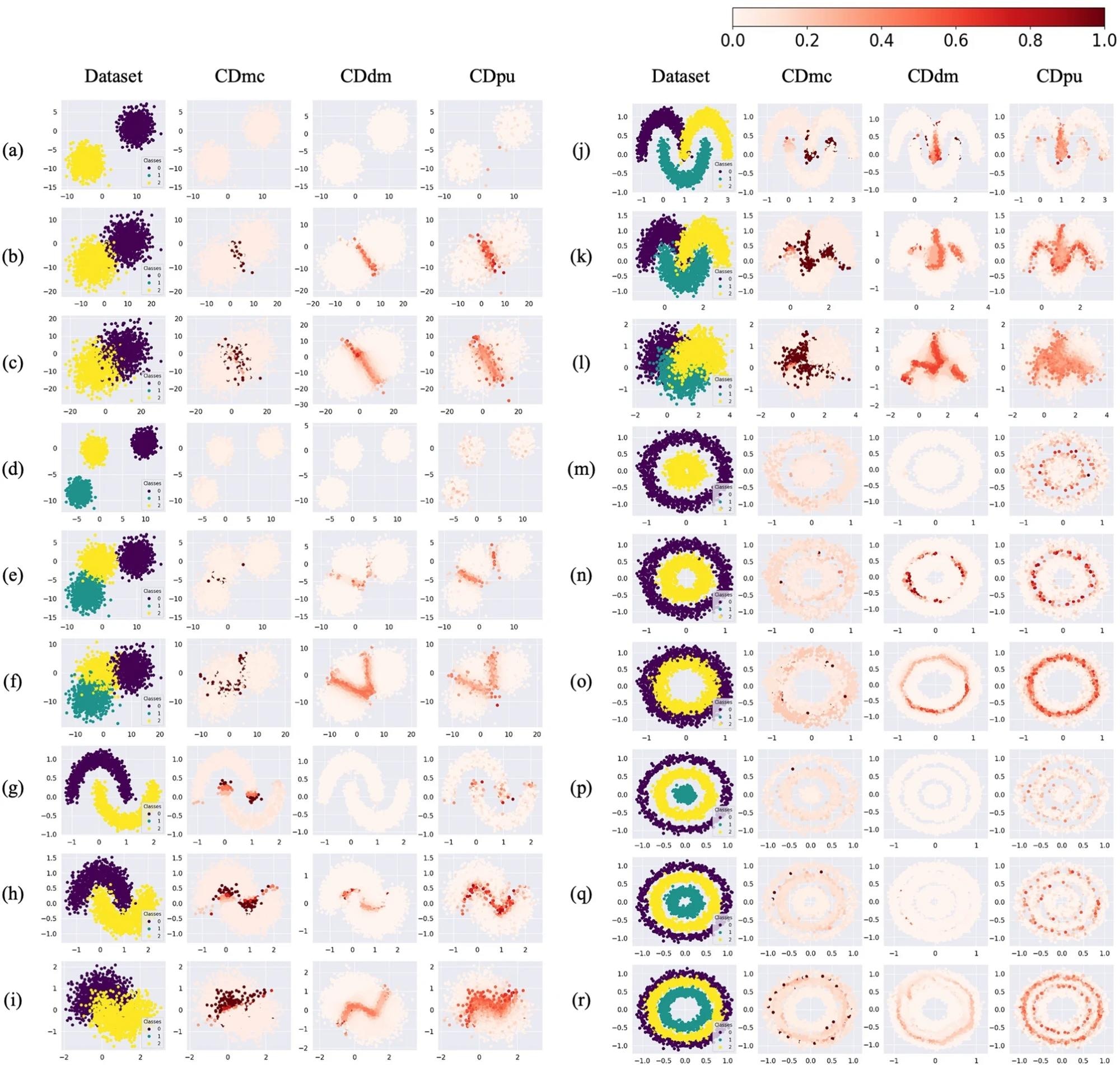

Case difficulty for the simulated datasets. The letters for the rows refer to various simulated datasets, while the columns represent the proposed metrics used to calculate the case difficulty (CDmc: Case difficulty model complexity, CDdm: Case difficulty double models, CDpu: Case difficulty predictive uncertainty). CDdm results were calculated using four times more samples than CDmc and CDpu because more training data were required to train two models. Case difficulty ranges from 0 to 1, with an easy case being colored light red and a hard case being colored dark red. Image Credit: https://www.nature.com/articles/s41598-024-61284-z

Case difficulty for the simulated datasets. The letters for the rows refer to various simulated datasets, while the columns represent the proposed metrics used to calculate the case difficulty (CDmc: Case difficulty model complexity, CDdm: Case difficulty double models, CDpu: Case difficulty predictive uncertainty). CDdm results were calculated using four times more samples than CDmc and CDpu because more training data were required to train two models. Case difficulty ranges from 0 to 1, with an easy case being colored light red and a hard case being colored dark red. Image Credit: https://www.nature.com/articles/s41598-024-61284-z

Background

Machine learning (ML) is a branch of artificial intelligence that enables computers to learn from data and make predictions or decisions. Its widespread application across diverse fields like health, telecommunications, and marketing can solve real-world problems effectively. However, applying ML to data presents challenges due to various factors influencing the performance and reliability of ML models.

One such factor is the prediction difficulty of individual cases within a dataset, indicating the likelihood of misclassification by an ML model. Prediction difficulty is an inherent aspect of each case and can fluctuate based on the characteristics of the data like overlap, noise, or class imbalance. Therefore, understanding the prediction difficulty of individual cases can enable researchers to analyze data complexity, identify error sources, and enhance ML models effectively.

Previous studies have proposed several metrics to measure the prediction difficulty of individual cases. However, these metrics often come with limitations regarding their applicability to diverse dataset types. For example, some metrics require specific data preconditions, such as the presence of categorical or continuous features, the independence of features, or the imbalance of classes. Additionally, some metrics rely on simplistic ML models like decision trees or naive Bayes, which might struggle to capture the complex patterns present in the data.

About the Research

In this article, the authors proposed three novel metrics for quantifying the prediction difficulty of individual cases within a dataset, specifically for use with fully connected feedforward NNs. NNs are a powerful type of ML model capable of approximating complex, non-linear relationships within data. The new metrics leverage different concepts to assess prediction difficulty:

- Model complexity: This approach assumes that cases with higher difficulty require more complex models for accurate prediction. It calculates the number of neurons an NN needs within its hidden layers to correctly predict a specific case.

- Double model: This method operates under the assumption that the difficulty of a case can be predicted by another model. It utilizes a pair of NNs, one for making the actual prediction and another for estimating the probability of successful prediction for a given case.

- Predictive uncertainty: This metric defines difficulty based on the variability in the model's predictions for a particular case. It calculates the mean and standard deviation of the predicted probability distribution to assess the level of uncertainty and, consequently, the prediction difficulty.

Moreover, the paper evaluated the designed metrics using both simulated and real-world datasets sourced from various real-world domains, including healthcare, telecommunications, and marketing, alongside simulated datasets. This evaluation involved:

- Visualization: The researchers visualized the calculated difficulty values for individual cases within the datasets.

- Comparison with existing metrics: They compared their proposed metrics with 15 existing metrics from the literature to understand their relative effectiveness.

- Correlation analysis: Finally, they investigated potential relationships between their novel metrics and the existing ones by calculating correlation coefficients.

Research Findings

The outcomes showed that the newly presented metrics were able to effectively capture the varying levels of difficulty across diverse datasets and provide a unique perspective for understanding data complexity. They were consistent and robust in differentiating the difficulty of individual cases, while the existing metrics showed varying performance depending on the data conditions.

The metrics were particularly effective when there were no overlapping areas in the dataset or when the dataset contained both categorical and continuous features. Furthermore, they exhibited high correlations with some existing metrics, suggesting their ability to measure case difficulty similar to established metrics. The novel metrics hold promise for diverse applications across fields utilizing ML. For example, researchers can leverage these metrics to analyze data complexity and pinpoint sources of errors or outliers within datasets.

Additionally, they can aid in enhancing ML models by assisting in selecting appropriate model complexities, fine-tuning hyperparameters, or implementing instance weighting or sampling techniques. Moreover, these metrics can facilitate the communication of uncertainty and reliability associated with ML predictions to users or stakeholders, thereby furnishing more comprehensive explanations or feedback.

Conclusion

In summary, the novel metrics demonstrated effectiveness in measuring the prediction difficulty of individual cases within datasets. They adeptly captured the varying levels of difficulty across diverse datasets, offering a fresh perspective for understanding data complexity. With potential applications spanning various ML-utilizing fields such as data analysis, model improvement, and uncertainty communication, these metrics present significant opportunities for advancement.

Moving forward, the researchers suggested that their metrics can be further extended using other ML models, and used to develop new prediction performance evaluation metrics that are aware of the case difficulty. They expected that their metrics could provide a new tool for ML researchers in many fields and provide more detailed case-by-case explanations to users.