In an article recently published in the journal Npj Digital Medicine, researchers proposed an artificial intelligence (AI) and video approach to substantially improve the accuracy of habitual fall risk assessment while ensuring privacy.

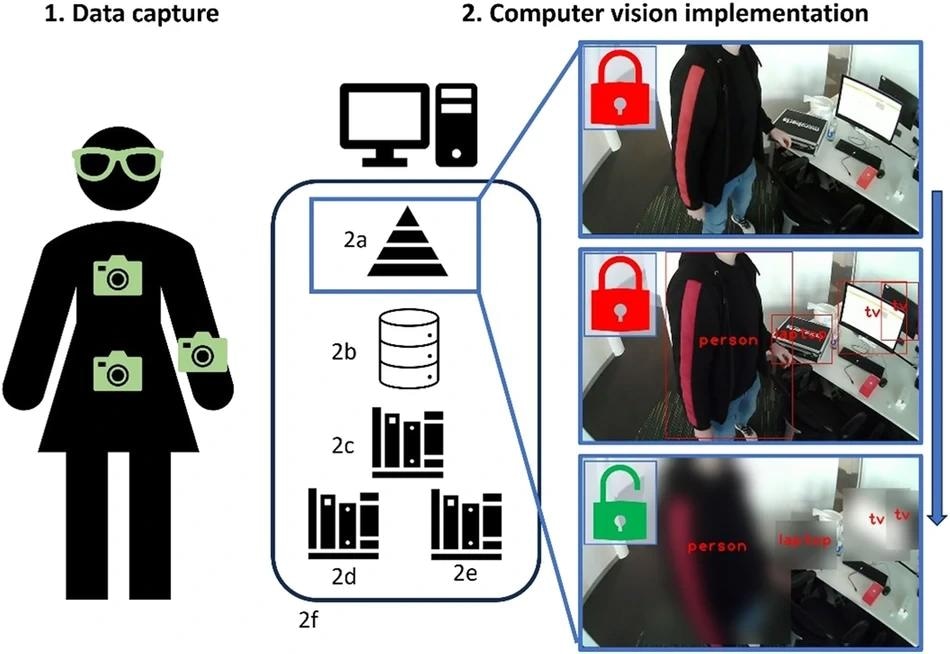

Typically, common wear locations include the chest or waist (1). However, alternative locations with more routinely worn wearables could include the wrist (watch) or face/head via glasses (1). A CV model implementing YoloV8 (2a) drawing upon a well-characterized and comprehensive ground truth learning dataset/database (2b) and necessary libraries (2c, 2d, and 2e) via a suitable analytical environment (2f). The images to the right detail how the raw/original data (top) is anonymized with only the latter being visible as an output i.e., red locks indicate what is analyzed and then deleted with a green lock indicating the remaining image available for viewing. (A wearable IMU to quantify gait is worn on the lower back, not shown.) https://www.nature.com/articles/s41746-024-01050-7

Typically, common wear locations include the chest or waist (1). However, alternative locations with more routinely worn wearables could include the wrist (watch) or face/head via glasses (1). A CV model implementing YoloV8 (2a) drawing upon a well-characterized and comprehensive ground truth learning dataset/database (2b) and necessary libraries (2c, 2d, and 2e) via a suitable analytical environment (2f). The images to the right detail how the raw/original data (top) is anonymized with only the latter being visible as an output i.e., red locks indicate what is analyzed and then deleted with a green lock indicating the remaining image available for viewing. (A wearable IMU to quantify gait is worn on the lower back, not shown.) https://www.nature.com/articles/s41746-024-01050-7

Background

The assessment of free-living mobility through instrumented gait using inertial measurement units (IMUs) can enable personalized fall risk prevention by improving targeted fall reduction strategies. For instance, IMUs can determine the abnormal temporal and spatial gait characteristics indicating a higher risk of falls, including gait variability or asymmetry, during ambulatory walks.

Although wearable IMUs are currently utilized for quantifying gait characteristics associated with increased fall risk, the lack of absolute contextual information, like environmental or extrinsic information, that can clarify the IMU data is a major existing limitation of using an IMU-based wearable. The absence of such information can result in inaccurate fall risk assumptions and gait abnormalities interpretations.

Importance of maintaining AI

Recent studies have investigated the utilization of wearable video-based cameras to enhance the IMU-based gait assessment to understand the fall risk of an individual more comprehensively by adding context to clarify the abnormal IMU data. However, the concept of using wearable cameras in the community and home settings for capturing real-world video remains unpopular due to privacy and ethical concerns, which has hindered the deployment of such cameras.

Additionally, the video data captured by cameras must be viewed and carefully labeled/categorized, which is another major challenge. Specifically, the video data must be labeled with the synchronized IMU data, which significantly increases the timelines for a comprehensive risk assessment. Thus, an approach must be developed to provide rich contextual information on the environment for a complete patient assessment while addressing the challenge of regularly anonymizing the sensitive information within the video to maintain privacy.

The proposed AI-based approach

In this study, researchers used extrinsic video data from wearable glasses to better inform intrinsic digital bio-markers/IMU gait characteristics. Off-the-shelf AI resources/methods were harnessed to derive contemporary deep learning models to obfuscate/shade/blur sensitive information and preserve necessary contextual information to better understand the habitual patient data. Specifically, AI was utilized to maintain privacy in video data to better comprehend abnormal gait indicative of higher fall risk.

This perspective proposed that regular use of wearable cameras can be realized within digital medicine through AI-based computer vision models, where AI first interprets the raw video data to obfuscate sensitive objects and maintain privacy. This model eliminates the need for humans to view the raw video data to understand context. Such models could be achieved routinely using contemporary resources, like the current computer vision approaches.

The You Only Look Once (Yolo) series of algorithms are the fundamental tools to detect objects in images and videos for scene understanding. Specifically, the Darknet-53 network architecture-based YOLOv8 using a similar approach to earlier Yolo implementations possesses improved classification and detection modules, which result in higher accuracy. Additionally, the YOLOv8 architecture contains a deep convolutional neural network, which can be trained using large, openly available datasets like the Microsoft Common Objects in Context (COCO) dataset.

A sensitive object' set that must be obfuscated upon detection with habitual video capture was created from the preselected classes of TV, laptop, book, and person. A Gaussian blur filter was applied for anonymization using the OpenCV library when the YOLOv8 model detected those sensitive objects in a video frame. Specifically, the detected object's bounding box was output by the YOLOv8 model, and subsequently, the Gaussian blur was applied to that specific region of the video frame. Eventually, a new anonymized video was created by combining all processed frames.

Evaluation and findings

Researchers recruited 10 participants, including nine males and one female, to assess the proposed model in a pilot study. The Northumbria University Research Ethics Committee granted the ethics consent for this experiment. A Python 3.8 environment on a desktop containing an NVIDIA RTX 3070 Ti graphics card, 24 GB of RAM, and a Ryzen 7 3800X CPU was selected to implement the model.

A sample of anonymized videos was reviewed manually to determine the model's effectiveness. Results showed that the model could effectively obscure sensitive data points like screens, letters, and faces. The medium-sized YOLOv8 (YOLOv8m) model more effectively processed videos in real-time by maintaining a good balance between accuracy and performance compared to nano, small, large, and x-large YOLO architectures. This makes YOLOv8m extremely suitable for anonymizing videos in practical applications.

Overall, 88% accuracy was achieved while detecting and blurring sensitive objects using the YOLOv8m model during the manual review of anonymized videos involving 10 participants. Additionally, the pilot results indicated that environmental factors could significantly affect gait characteristics. Thus, clinicians must consider these factors while assessing fall risk using video to supplement the numerical IMU gait characteristics with environmental context.

To summarize, the findings of this study demonstrated the feasibility of using the proposed AI and video approach to comprehensively understand an individual's free-living fall risk without compromising privacy.

Journal reference:

- Moore, J., McMeekin, P., Parkes, T., Walker, R., Morris, R., Stuart, S., Hetherington, V., Godfrey, A. (2024). Contextualizing remote fall risk: Video data capture and implementing ethical AI. Npj Digital Medicine, 7(1), 1-7. https://doi.org/10.1038/s41746-024-01050-7, https://www.nature.com/articles/s41746-024-01050-7