In an article published in the journal Scientific Reports, researchers from the USA comprehensively explored the intriguing intersection of technology and psychology. They investigated social perceptions of robots, focusing on how beliefs about their minds shape this perception.

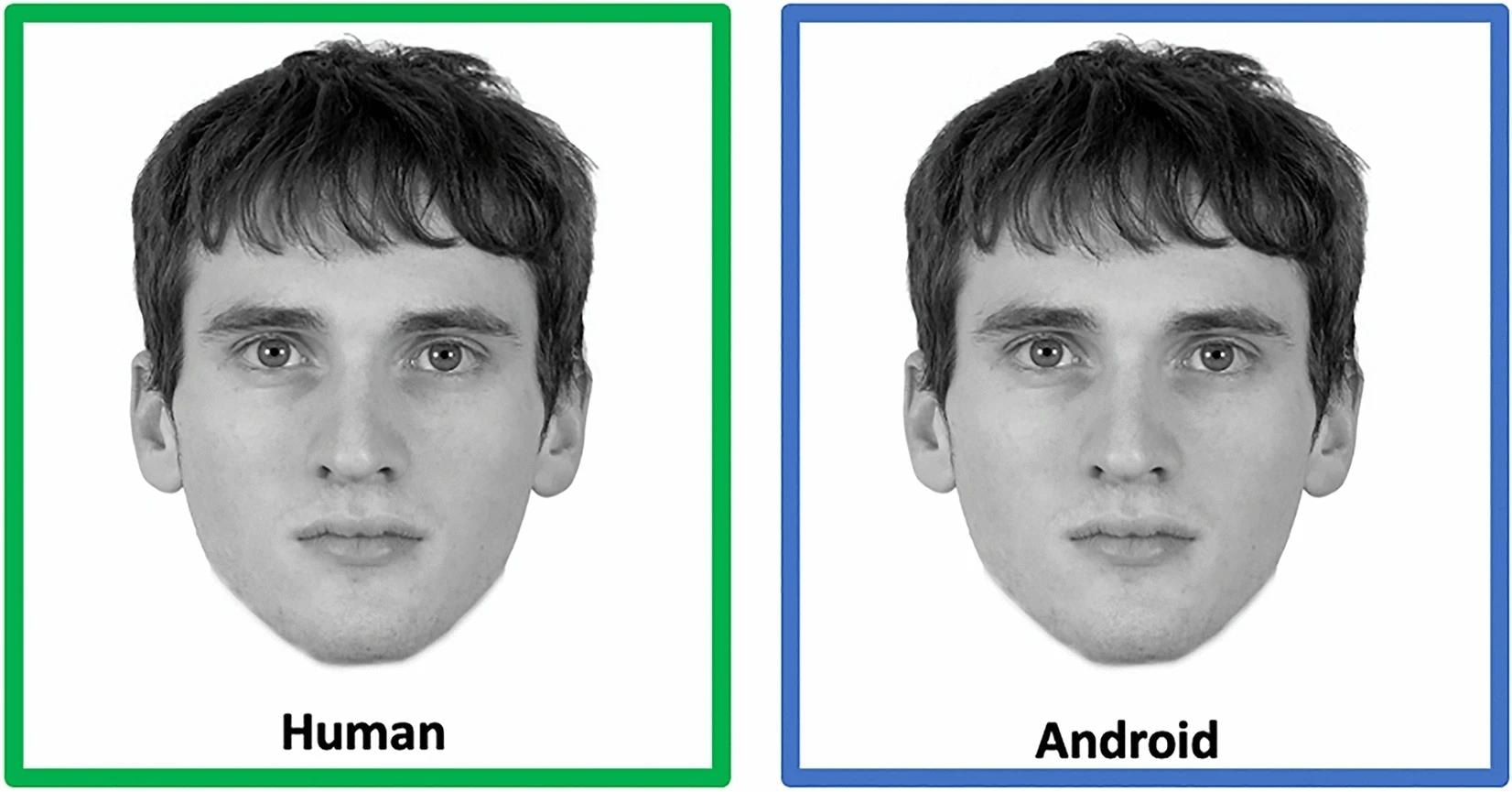

Example face stimuli. For each participant, faces were presented in the human or android condition in a randomized fashion. Each face was presented together with a label and a colored frame indicating the experimental condition: faces in the human condition were presented with the label “human” in a green frame; faces in the android condition were presented with the label “android” in a blue frame. https://www.nature.com/articles/s41598-024-53187-w

Example face stimuli. For each participant, faces were presented in the human or android condition in a randomized fashion. Each face was presented together with a label and a colored frame indicating the experimental condition: faces in the human condition were presented with the label “human” in a green frame; faces in the android condition were presented with the label “android” in a blue frame. https://www.nature.com/articles/s41598-024-53187-w

They examined whether labeling human faces as "android" affected typical face processing in an inversion task, suggesting that even highly realistic robots might not have been fully perceived as social beings due to pre-existing beliefs about their mechanical nature.

Background

Artificial intelligence (AI) represents the simulation of human intelligence in machines programmed to think and learn like humans. These systems are designed to perform tasks requiring human intelligence, including understanding natural language, recognizing patterns, and making decisions. AI utilizes algorithms and data to analyze information, draw conclusions, and solve problems. It finds application in various domains, including virtual assistants, autonomous vehicles, and medical diagnosis tools.

The presence of robots is increasing across diverse fields like healthcare, manufacturing, and entertainment. As these machines become more integrated into society, it is crucial to understand how humans perceive and interact with them. Human-robot interaction, a field focused on studying the dynamics between humans and robots, aims to enhance the usability and acceptance of these machines. An important aspect of this interaction is how humans perceive robots as social entities shaped by their beliefs about machine minds.

Technological advancements like deepfakes and android robots, capable of emulating human appearance and behavior, raise questions about the believability of perceptual information and the ethical implications of their use. These innovations can generate hyper-photorealistic images and videos challenging the ability to distinguish between real and synthetic faces, impacting both robotics and the broader AI field.

About the Research

In the present paper, the authors investigated if higher-order beliefs about faces, such as whether they denote android robots or actual people, adjust how much individuals utilize typical facial processing for social stimuli. They presented participants with facial stimuli labeled as either "human" or "android" and examined their recognition performance using an inversion task, a well-established method in cognitive psychology used to investigate face processing. This task is based on the finding that humans are much better at recognizing upright faces than inverted ones, a phenomenon known as the face inversion effect.

Participants were recruited from Amazon's Mechanical Turk platform, and 233 individuals completed the study. Faces were sourced from the Chicago Face Database, ensuring a diverse sample of stimuli for the experiments. The study employed a within-subjects design, with participants exposed to both human-labeled and android-labeled faces in a randomized order.

The researchers examined the intersection of face perception, artificial intelligence, and social cognition to understand how humans process hyper-realistic synthetic faces. They hypothesized that if faces believed to be androids are processed less holistically than those believed to be humans, then the face inversion effect would be reduced for android faces.

Research Findings

The outcomes showed that participants exhibited better recognition performance for faces labeled as "human" compared to those labeled as "android". Moreover, it highlighted the classic face inversion effect, where recognition was better for upright faces than inverted ones. However, this effect was significantly attenuated for faces labeled as "android," indicating that beliefs about face nature impacted face-typical processing. This suggested that even highly human-like robots may not have been fully perceived as social stimuli due to pre-existing beliefs about their mechanistic nature, impacting how they were processed cognitively.

The results have significant implications for various domains, particularly in human-robot interaction and social cognition. Understanding how beliefs shape face perception could inform the design and development of synthetic agents, such as androids and robots, for more effective communication and interaction with humans. By considering the cognitive processes involved in perceiving synthetic faces, designers could create more socially engaging and intuitive interfaces.

By understanding how people's beliefs about the minds of robots influenced their social perception, researchers and engineers could create robots that were more socially acceptable and engaging. This knowledge could be particularly valuable in fields such as healthcare, where robots are increasingly used for patient care and companionship. By designing robots that were perceived as socially capable, these machines could enhance the well-being and quality of life of individuals in need of assistance.

Conclusion

The paper summarized that the perception of robots and synthetic faces was not solely based on their physical features but was also significantly influenced by the beliefs and perceptions of the observer. This has the potential for the social integration of humanoid robots and AI systems.

The authors highlighted the importance of considering people's beliefs about the minds of robots when designing and developing these machines. Moreover, they demonstrated the importance of considering both top-down beliefs and bottom-up visual features in understanding face perception.

The researchers acknowledged limitations and challenges and suggested that future research could further explore the impact of different manipulations of perceived humanness on face processing and investigate the temporal dynamics of top-down effects on perceptual processing.