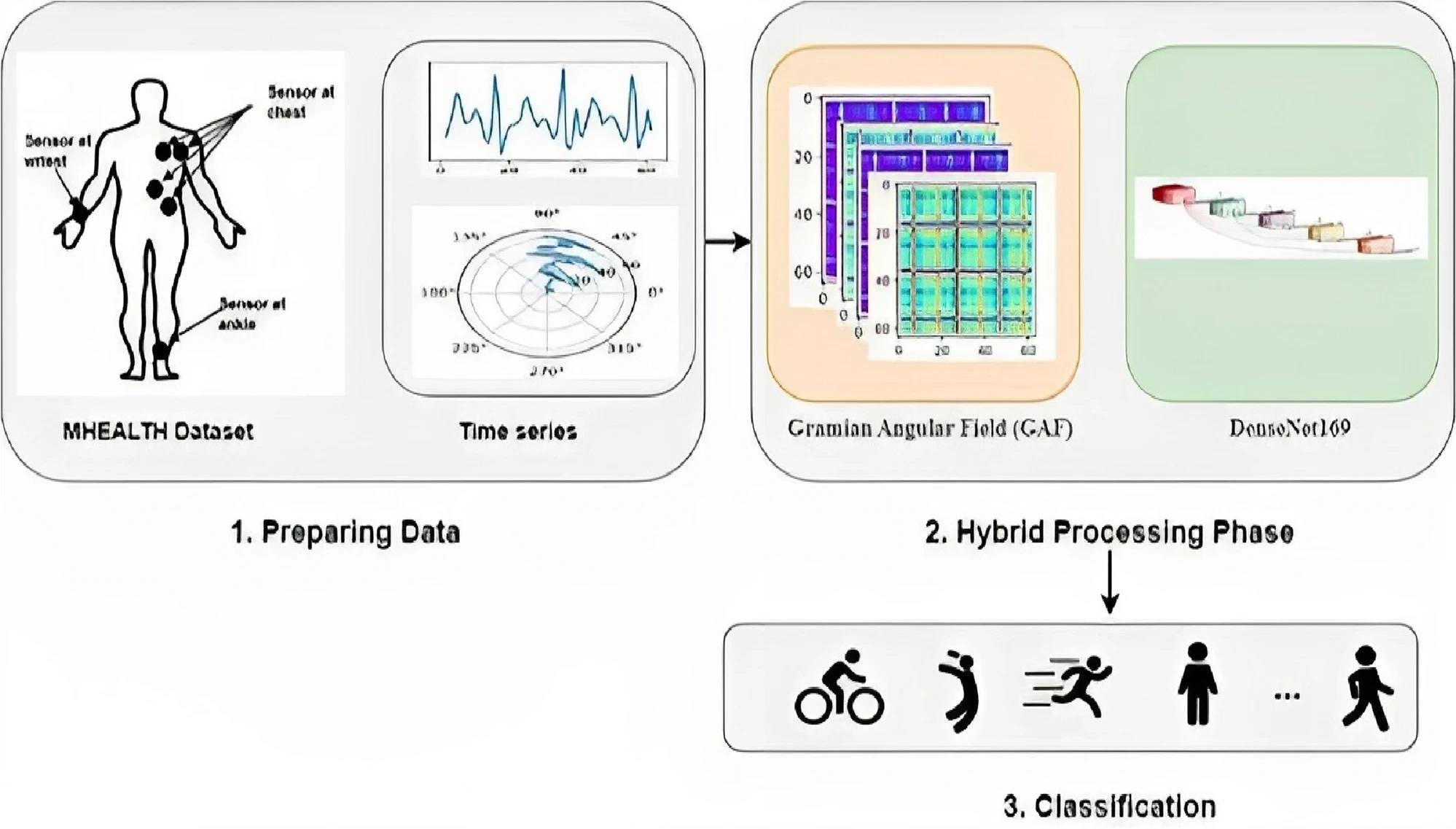

In an article published in the journal Scientific Reports, researchers from Egypt developed a new system for human activity recognition (HAR) using wireless body area sensor networks (WBANs) and deep learning. Their tool employs a feature extraction technique to transform the sensor data into two-dimensional images that can be processed by a convolutional neural network (CNN).

The proposed hybrid HAR system in WBAN architecture based on GAF algorithm and DenseNet169 model. Study: https://www.nature.com/articles/s41598-024-53069-1

The proposed hybrid HAR system in WBAN architecture based on GAF algorithm and DenseNet169 model. Study: https://www.nature.com/articles/s41598-024-53069-1

Background

WBANs are networks of wearable or implantable sensors that can monitor and communicate various physiological and physical parameters of the human body, such as heart rate, blood pressure, temperature, motion, and posture. It can be used for remote patient monitoring, health tracking, physical activity analysis, and gesture recognition. However, it poses some challenges, such as limited battery life, data transmission, and data processing.

Deep learning utilizes artificial neural networks to perform complex tasks like image recognition and natural language processing. In HAR, deep learning automatically extracts relevant features from raw sensor data without the need for manual feature engineering, although it has drawbacks such as high cost and large memory requirements.

About the Research

In the present paper, the authors designed an innovative HAR system that integrates WBAN and deep learning technologies. HAR aims to identify and analyze different human activities using sensor data. It has applications in healthcare, such as health status and physical activity monitoring, detecting abnormal behaviors or falls, and providing feedback and guidance for rehabilitation. The proposed approach consisted of the following four steps:

- Data collection: The system utilized accelerometers, gyroscopes, and magnetometers attached to the chest, right wrist, and left ankle of the user. These sensors measured acceleration, angular velocity, and magnetic field along bodies x, y, and z axes. Additionally, an electrocardiogram (ECG) was attached to the chest to measure the electrical activity of the heart. The sensor data was collected at a sampling rate of 50 Hz and transmitted wirelessly to a central unit.

- Data preprocessing: The sensor data underwent several preprocessing steps, including artifact removal, median filtering, normalization, and segmentation. Artifact removal eliminated noise and outliers, such as spikes, drifts, and baseline wander. Median filtering reduced high-frequency noise while preserving signal edges. Normalization scaled the data to a specific range, such as [-1, 1] or [0, 1], ensuring comparability and consistency. Segmentation divided the data into fixed-length windows, such as 5 s, with a certain overlap, such as 50%, capturing the temporal dynamics of the activities.

- Feature extraction: The system employed a feature extraction method that transformed sensor data into two-dimensional images suitable for a CNN model. This method was based on the Gramian angular field (GAF) algorithm, which converted one-dimensional time series data into a polar coordinate system. It then computed the cosine or sine of the sum or difference of the angles to generate Gramian angular summation field (GASF) and Gramian angular difference field (GADF) images. These images captured patterns and correlations of the time series data while preserving temporal information and interpretability.

- Activity recognition: The system employed a pre-trained DenseNet for this task. The DenseNet CNN model is a deep neural network architecture comprising densely connected layers. Each layer receives feature maps from all preceding layers and passes its feature maps to all subsequent layers. This dense connectivity pattern enhances feature propagation, reuse, and gradient flow while reducing parameters and overfitting risks. The DenseNet model, with 169 layers, was pre-trained on the ImageNet dataset and fine-tuned on sensor data images. The output comprised a vector of probabilities for each of the 12 activities recognized by the system.

The study evaluated the performance of the presented system using a benchmark dataset known as MHEALTH. This dataset contained data from 10 subjects performing 12 activities, such as walking, jogging, cycling, lying down, sitting, standing, and knee bending. It included data collected from accelerometers, gyroscopes, magnetometers, and ECG sensors accompanied by activity labels. The authors divided the dataset into training and testing sets. Specifically, they allocated data from eight subjects to the training set and all data from the remaining two subjects to the testing set.

Research Findings

The outcomes showed that the newly proposed method achieved an accuracy of 97.83%, an F1-score of 97.83%, and a Matthews correlation coefficient (MCC) of 97.64% on the test set. These metrics underscored the system's capability to accurately and consistently recognize various activities performed by subjects. Moreover, the approach surpassed several existing methods in HAR, including decision trees, random forests, support vector machines, CNNs, recurrent neural networks, and autoencoders.

Furthermore, the authors demonstrated the robustness and generalization ability of their technique across different sensor modalities and subjects. The novel device holds promise for diverse applications in healthcare, sports, and elderly care. For example, it can monitor the health status and physical activity of patients with chronic illnesses like diabetes, hypertension, or cardiovascular diseases, offering feedback and guidance for lifestyle improvements and complication prevention.

Additionally, it can track the performance and movements of athletes such as runners, cyclists, or swimmers, providing tailored coaching and training programs to enhance their skills and fitness levels. Moreover, it can aid older adults by detecting falls, abnormal behaviors, or cognitive decline and promptly alert caregivers or emergency services in case of emergencies.

Conclusion

In summary, the novel HAR system efficiently identifies various human activities, including walking, jogging, cycling, and lying down, utilizing data from sensors attached to different body parts. It effectively uses the capabilities of WBAN and deep learning.

The researchers acknowledged limitations and suggested exploring alternative feature extraction methods, such as recurrence plots or Markov transition fields, to further enhance the system's efficiency. Additionally, they recommended extending the device's capabilities to recognize more intricate activities, such as gestures, emotions, or intentions, by integrating additional sensors like cameras, microphones, or electroencephalograms (EEGs).