In an article published in the journal Scientific Reports, researchers from Russia developed a novel methodology for predicting wildfire spread using multimodal data from remote sensing satellites and weather sources. Their technique leverages the power of deep neural networks to overcome the challenges faced during wildfire prediction due to the complexity and uncertainty of fire dynamics and the diversity and variability of environmental factors.

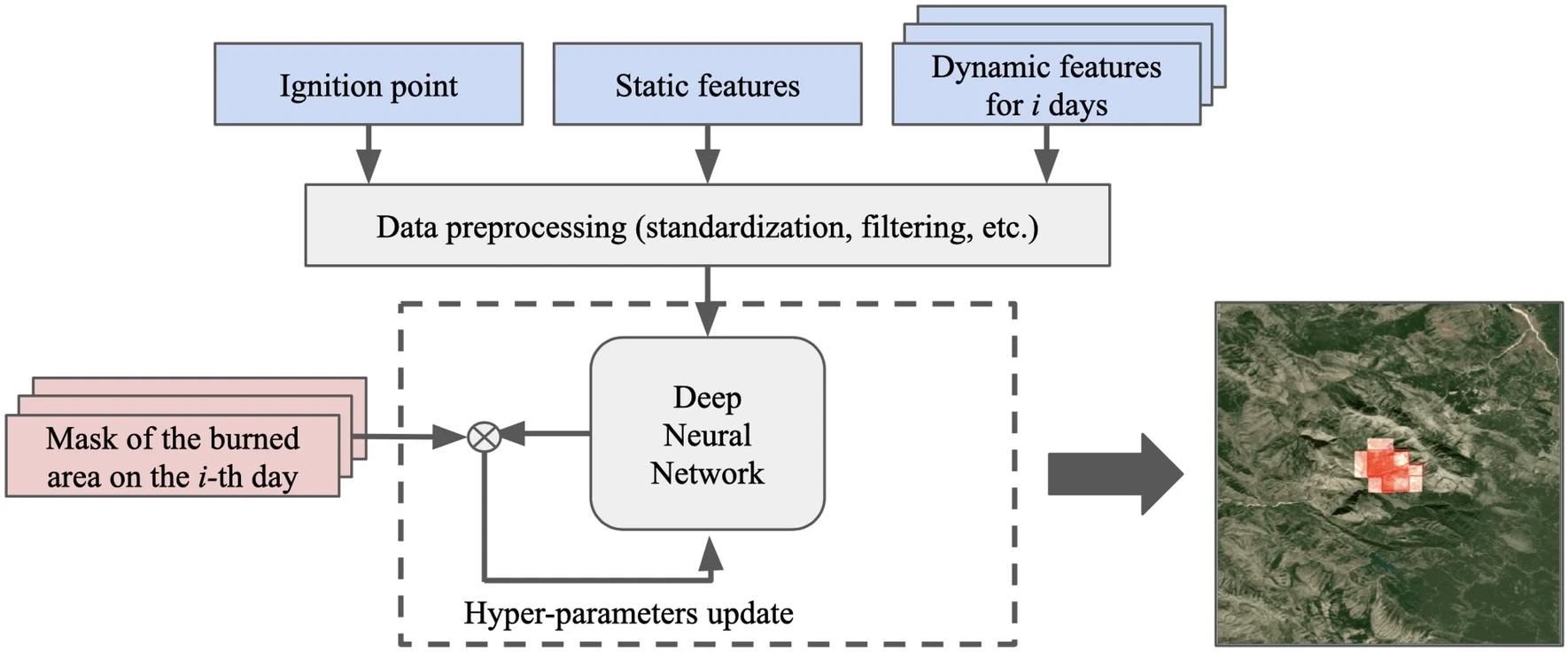

Study workflow. The map was generated with the QGIS v.3.14 software, fire spreading example, and RGB satellite composite from Google Maps layers available in QGIS. Study: https://www.nature.com/articles/s41598-024-52821-x

Study workflow. The map was generated with the QGIS v.3.14 software, fire spreading example, and RGB satellite composite from Google Maps layers available in QGIS. Study: https://www.nature.com/articles/s41598-024-52821-x

Background

Wildfires are a major environmental and socio-economic challenge that pose significant risks to human health, ecosystems, and infrastructure. Predicting wildfire spread behavior is crucial for effective fire management and mitigation. However, this task is complex and depends on various factors, such as weather conditions, land cover, topography, and fuel characteristics.

Remote sensing data, such as satellite imagery and weather measurements, can provide valuable information for monitoring and modeling wildfire dynamics. However, processing and analyzing such data requires advanced machine learning algorithms that can capture the spatial and temporal patterns of fire behavior and account for the uncertainty and variability of environmental factors.

Previous studies have used different types of neural networks, such as convolutional, recurrent, and attention-based models, to predict fire spread based on simulated or historical data. However, most of these studies focus on short-term predictions (up to a few hours) and small-scale areas (up to a few kilometers).

About the Research

In the present paper, the authors designed a machine learning methodology for predicting fire spread that can cover vast territories and consider the most important environmental parameters that influence the direction and speed of fire spread. The forecasting horizon was five days, and the prediction step was one day. The model estimated the fire spread on a grid with a resolution of 650 m. The forecast area was set to 21 x 21 km, and the ignition point was located in the center of this area.

The study used a dataset containing meteorological measurements and the Earth cover characteristics for several areas of the Russian Federation, where wildfires are frequent and severe. It included verified fire data from multiple years. A training dataset was created by deriving additional variables from satellite monitoring data.

The researchers proposed a mobile ad hoc network (MA-Net) architecture-based neural network algorithm, which is a U-Net-like convolutional neural network model for image segmentation that incorporated attention mechanisms to capture spatial and channel dependencies. The model was trained and validated on the dataset, and its performance was evaluated using various metrics, such as the harmonic mean of precision and recall (F1-score), intersection over union (IoU), mean absolute error (MAE), and mean absolute percentage error (MAPE).

Research Findings

The authors evaluated the model's performance using a test set of 947 fires obtained from Krasnoyarsk Territory, Irkutsk Region, and the Republic of Sakha. They compared the designed approach with several baseline architectures and loss functions and highlighted that the MA-Net model with a combination of Dice and Focal loss achieved the best results.

The outcomes showed that the MA-Net model can achieve high accuracy in predicting fire spread for up to 5 days. The model achieved an F1-score of 0.68 for the first day, 0.67 for the third day, and 0.65 for the fifth day. The MAE and MAPE for the burned area were also low, indicating that the model could estimate the size and shape of the fire perimeter well. The model could also estimate the direction and the speed of the fire front, although this was not the primary goal of the research.

The paper also analyzed the importance of the input features and found that meteorological measurements, especially wind direction and speed, are important factors for predicting fire spread. The model can also learn the fire behavior near water bodies and avoid predicting fire spread over water surfaces.

Applications

The new approach has several potential applications for supporting emergency systems and facilitating rapid decision-making to deal with fires. It can estimate the risk and severity of wildfires, as well as plan and optimize fire suppression and mitigation strategies. Additionally, it aids in assessing the environmental and socio-economic impacts of wildfires, including carbon emissions, air quality, soil erosion, and biodiversity loss. Moreover, the approach is adaptable and transferable to other regions and scenarios by expanding the training dataset with new environmental examples and adjusting the model parameters.

Conclusion

In summary, the novel model is an effective, adaptable, and scalable approach for predicting wildfire spread using multimodal data and deep neural networks. It achieved high accuracy and robustness for predicting fire spread on a large scale and a long-term horizon. Moreover, it outperformed other state-of-the-art models and also provided insights into the importance of different features for the prediction task.

The authors carried out the research using a unique dataset of verified fire events and environmental parameters for several regions of the Russian Federation. Their technique uses attention mechanisms to capture the spatial and temporal dependencies of fire behavior and environmental factors.

The researchers acknowledged limitations and challenges and suggested that the presented model can be further improved and extended by incorporating more data sources, such as unmanned aerial vehicles (UAVs), light detection and ranging (lidar), and radio detection and ranging (radar), as well as by integrating more advanced machine learning techniques, such as generative adversarial networks, reinforcement learning, and uncertainty quantification.