In a recent paper published in the journal Algorithms, researchers introduced an artificial intelligence (AI)-based solution for autonomous vehicles using supervised learning techniques. A robotic car equipped with a single camera, was developed to compete in a robotic event called the RoboCup Portuguese Open. The study focuses on this car's development and performance in the competition.

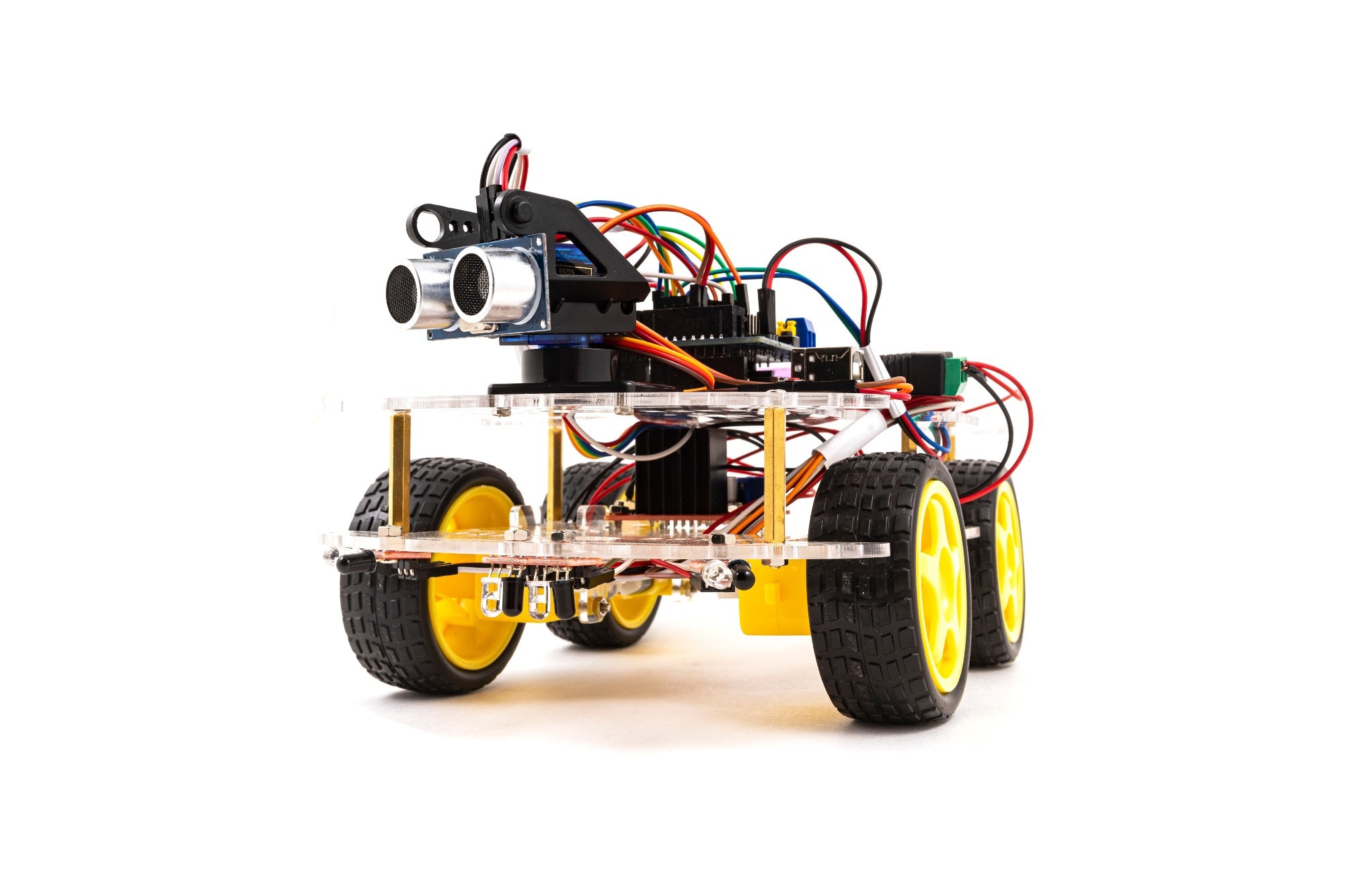

Study: Neural Networks Drive Robotic Cars to Victory in Autonomous Racing. Image credit: Gargantiopa/Shutterstock

Study: Neural Networks Drive Robotic Cars to Victory in Autonomous Racing. Image credit: Gargantiopa/Shutterstock

Background

In the current world, efficient mobility for people and goods is vital. Current land transportation faces challenges such as limited access, pollution, and accidents. Autonomous vehicles offer solutions to these challenges by enhancing safety and accessibility.

Car manufacturers are investing in research related to self-driving technology. Wang et al. used convolutional neural networks (CNNs) to predict steering and speed, while Dutta and Chakraborty expanded this using 20 cameras and sensors, and T. Almeida et al. used combined techniques for road detection. Neural networks trained through simulation surpassed traditional methods in both simulation and real-life scenarios, demonstrating their potential for autonomous driving.

Simulation Environment

The current study presents a system capable of autonomously steering a vehicle on a road-like track in a simulated environment. This simulation environment was crafted using software called CoppeliaSim for testing purposes. This simulation closely replicated the track layout of the RoboCup Portuguese Open. The environment incorporates vital elements, including the car (agent), the track with continuous and dashed white lines, zebra crossings, parking zones, and traffic lights. Randomly positioned green cuboid obstacles were placed on the track.

A front-facing vision sensor (camera) on the car was employed to collect track data. To ensure real-time functionality, a connection was established through a robot operating system (ROS) protocol, linking the simulation environment and control software. The setup entailed two ROS nodes: one for the simulator and another for the control script. This configuration facilitated communication through six ROS topics. Among these, four topics transmitted simulation data to the control software, while three received information.

Algorithms for training robotic agents

The performance of the agent was increased using machine learning techniques based on a foundational computer vision algorithm. Initial tests took place in a simulation environment to develop and fine-tune the algorithm.

Computer Vision Control Algorithm: A classical computer vision algorithm was devised to translate input images into steering angles for the car. The track exhibited a black backdrop with continuous or dashed white lines. Classical segmentation techniques were employed to produce distinct images with track lines and obstacles.

The white-segmented image aimed to locate and orient delimiting lane lines. Systematically scanning radially from the bottom center at 2.5-degree intervals, a pixel-by-pixel search identified the first black-to-white transitions. These coordinates were stored in two arrays—left and right sides. The RANSAC algorithm was subsequently applied, yielding slope and intercept lines for both sides. Fewer than five points led to the assumption of no line, and lane boundaries visible to the car were thereby comprehensively characterized.

Neural Network Model (NNM): The NVIDIA Jetson NanoTM developer kit was the platform for the training process, designed for embedded systems and well-suited for robotics and smart machines. This ensured efficient training with substantial processing power. Post-training, the model was deployed on the same computer for simulation.

The main challenge involved predicting angle and direction values, commonly tackled with regression models. Metrics gauging predictions' proximity to expected values were crucial. The mean squared error (MSE) metric was chosen for evaluation. The project aimed to delve into neural networks, their functions, and their supervised learning potential. Transfer learning was used for efficiency, leveraging a pre-trained model on a similar task's dataset.

The ResNet-18 network architecture was adopted due to its capacity to enhance image classification with limited computational resources. Furthermore, the random sample consensus (RANSAC) algorithm was utilized to estimate continuous lines on the track. By fusing these approaches, the study elevates the potential of autonomous driving in intricate scenarios.

Evaluation of proposed algorithms

Initial testing involved comparing outputs from the simulation camera's assorted images with those generated by the computer vision algorithm and the NNM algorithm. The CV and NNM outputs are represented by dashed and continuous lines, revealing their responses. The CV algorithm succeeds when the road is well-defined, with complexities arising in special cases. In contrast, the NNM demonstrates path accuracy and consistency. Consistency prevails at higher speeds, with minor variations near zebra crossings and intersections. Adjustments were needed at faster velocities due to the NNM's still-image training.

Comparing both approaches, the NNM algorithm outperforms the CV solution. The NNM leverages all image information, enabling it to predict solutions based on the entire image. The NNM's capability to make forecasts based on similar images broadens its range of potential outcomes. In contrast, the CV approach proves intricate and encounters challenges in specific scenarios, whereas the NNM demonstrates smoother behavior in unique cases. The NNM excelled in the tests, prompting additional real-world trials involving robotic cars. These trials yielded promising results despite external influences.

Conclusion

In summary, the current study introduces an autonomous driving system for a robotic car. This system employs a neural network trained on diverse road scenarios generated by a classical computer vision algorithm. The trained model demonstrated enhanced performance, successfully navigating the track across multiple laps at varying speeds. Further validation through real-world competition tests reaffirmed its effectiveness. Future work could extend the model's capabilities, such as avoiding obstacles and changing lanes.