In a paper published in the journal Computer Communications, researchers emphasized the importance of intelligent traffic monitoring systems powered by the Internet of Things (IoT) and artificial intelligence (AI) technologies. These advancements could significantly enhance urban traffic management in fast network environments like 5G, 5G+, and 6G.

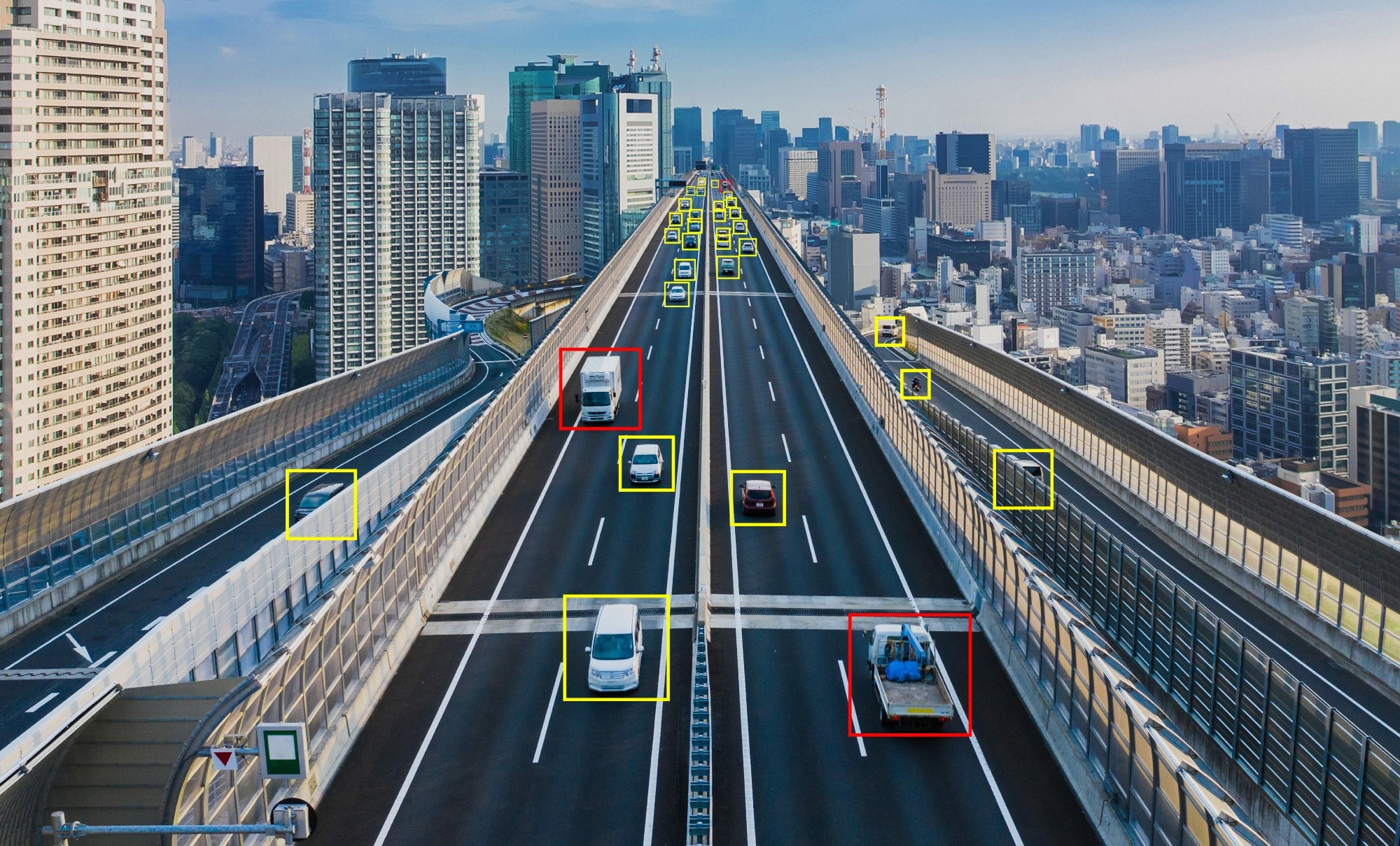

Study: LightSpaN: Advancing Traffic Monitoring Through IoT and AI. Image credit: metamorworks/Shutterstock

Study: LightSpaN: Advancing Traffic Monitoring Through IoT and AI. Image credit: metamorworks/Shutterstock

Unlike previous approaches, which were complex or had accuracy limitations below 98%, this research introduced LightSpaN—a streamlined Convolutional Neural Network (CNN)-based method for vehicle identification. The proposed solution achieved swift and accurate results, as demonstrated through Simulation of Urban MObility (SUMO) simulations and real-world IoT-based traffic monitoring. LightSpaN outperformed existing methods in identifying vehicle types rapidly, with an average accuracy of 99.9% for emergency vehicles. Additionally, it contributed to reduced waiting and travel times, showcasing its potential for effective real-time traffic management.

Background

The issue of traffic congestion has prompted studies focusing on solutions driven by Deep Learning (DL) algorithms and IoT technologies. These methods aim to enhance urban traffic management, particularly in large cities, by improving the flow of vehicles and quality of life. DL algorithms have been used for traffic light detection, while more intricate tasks such as detecting traffic signs, pedestrians, and vehicles have employed advanced approaches like Region-based Convolutional Neural Networks (R-CNN), Faster Region-based Convolutional Neural Networks (Faster RCNN), and You Only Look Once (YOLO).

Related work

In recent years, DL algorithms and IoT technologies have been explored to tackle traffic congestion. CNN forms the basis for several DL models, including R-CNN, Fast R-CNN, and Faster R-CNN, which detect objects in two stages. One-stage DL algorithms like SSD and YOLO have also been used for object detection, although achieving both accuracy and speed remains challenging.

DL algorithms have been combined with IoT for smart traffic light solutions. Approaches involve using fuzzy logic, sensors, and image processing to optimize traffic flow, reduce waiting times, and control traffic density. Lightweight models like PVIDNet and YOLOV3 are considered, and traffic light methods for prioritizing rescue vehicles are explored. The Brazilian Traffic Code emphasizes vehicle priorities, especially for emergency services, underlining the importance of audible alarms and visual warnings.

Proposed method

The approach employed in this present paper entails a comprehensive process for constructing a real-time traffic monitoring system using IoT devices in conjunction with the LightSpaN algorithm. This procedure involves three distinct stages, each contributing to the development and validation of the proposed solution.

Dataset Preparation: The initial step involves compiling a dataset consisting of uniform images intended for training the LightSpaN model. These images are sourced from the COCO API and VOC, providing diverse visuals from different urban settings. This expanded dataset incorporates images representative of various cities to ensure a robust and adaptable model.

Development and Validation of LightSpaN Algorithm: The subsequent phase centers around crafting and evaluating the LightSpaN algorithm. This process encompasses both training and testing aspects. It's noteworthy that during the validation stage, real-world urban traffic scenarios are simulated. Furthermore, a practical implementation of the algorithm is executed, wherein vehicle identification and classification are carried out. To gauge the algorithm's performance, key validation metrics, including accuracy, sensitivity, and F-measure, are employed. These metrics collectively offer insights into the algorithm's effectiveness in accurately identifying and classifying vehicles.

Traffic Light Algorithm Implementation: In the final stage, a specialized Traffic Light Algorithm is introduced to evaluate the efficacy of the LightSpaN algorithm. This algorithm integrates the results produced by LightSpaN to determine the priority of vehicle movement. By aligning with the vehicle priority strategies established in this study, the traffic light controller adapts its behavior accordingly. To assess the impact of the LightSpaN algorithm on traffic flow improvement, a simulation scenario is orchestrated using the SUMO tool. Additionally, the proposed solution is tested in real-world scenarios to validate its practical feasibility.

Experimental results

The experimental evaluation involves comparing LightSpaN with YOLOv3 and Partial View Image Detection Network (PVIDNet) (Smart Road System (SRS)) in classifying vehicle images, demonstrating LightSpaN's enhanced accuracy. Video stream simulations, encompassing 150 urban traffic videos, further affirm LightSpaN's superiority over YOLOv3 and PVIDNet (SRS) in accuracy rates.

Additionally, simulation results in the SUMO tool depict that the traffic control algorithm, combined with LightSpaN, significantly reduces waiting times for emergency and priority vehicles, showcasing a reduction of around 60% and 8%, respectively. Real-world implementation reinforces these findings, underlining LightSpaN's effectiveness in lowering both waiting and traveling times for various vehicle types, particularly emergency vehicles.

Conclusion

To sum up, this study introduced an enhanced sparse network for swift IoT device implementation in intelligent traffic systems. The LightSpaN model demonstrated superior accuracy versus YOLOv3 and PVIDNet (SRS), proving its efficiency for rapid vehicle image detection, particularly emergency vehicles. Simulation results indicated a substantial reduction in waiting and travel times for emergencies without affecting regular cars. The real-world implementation validated the approach using affordable sensors, yielding comparable outcomes. This approach holds promise for optimizing traffic flow through intelligent image-based monitoring systems. Future work could involve broader datasets and traffic scenarios for further validation and exploration.