In an article published in the journal PLoS ONE, researchers proposed a novel methodology that aims to simplify and streamline the process of whole-slide images (WSIs) analysis, bridging the gap between conventional image analysis and the intricacies of analyzing WSIs.

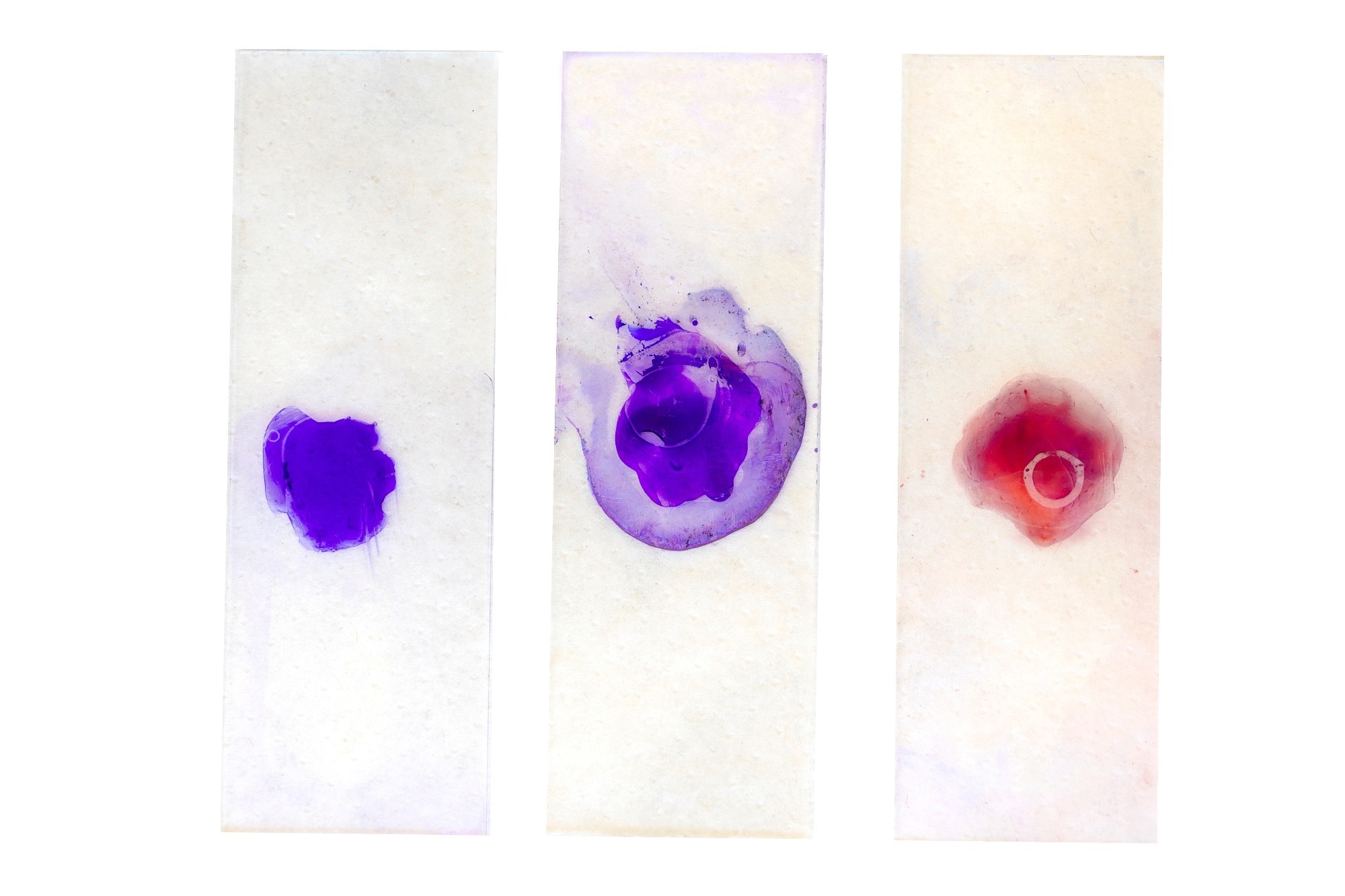

Study: Simplifying Whole-Slide Image Analysis with SliDL: A Deep Learning Toolbox. Image credit: eczserapyilmaz /Shutterstock

Study: Simplifying Whole-Slide Image Analysis with SliDL: A Deep Learning Toolbox. Image credit: eczserapyilmaz /Shutterstock

The field of histopathology has witnessed an astounding transformation with the emergence of digital pathology, enabling the conversion of traditional glass slides into high-resolution WSIs. These digital images hold immense potential for automated image analysis through the application of deep learning techniques, promising to reshape the way diseases are detected, diagnosed, and monitored. However, analyzing WSIs comes with a set of unique challenges, including managing image annotations, addressing slide and image artifacts, and accurately evaluating model performance. To navigate these complexities, a powerful Python library named SliDL (Slide Deep Learning) has been developed.

WSIs in pathology

Traditionally, pathologists have relied on manual examination of stained tissue slides under microscopes to identify pathological features. While effective, this process is time-consuming and susceptible to inter-observer variability. With the advent of digital pathology, WSIs are generated by high-resolution scanning of glass slides, offering a plethora of data for automated analysis. Deep learning methods have demonstrated remarkable capabilities in analyzing WSIs, potentially reducing the workload of pathologists and enhancing diagnostic accuracy.

Analyzing WSIs presents unique challenges. These challenges encompass effective management of image annotations, dealing with slide and image artifacts, and accurately assessing the performance of deep learning models. The intricacies involved in these tasks can impede the widespread adoption of deep learning techniques in medical practice. This is where SliDL enters the scene, designed to simplify and streamline the entire process of WSI analysis.

Introducing SliDL: A comprehensive toolbox

SliDL is a meticulously crafted Python library to facilitate the processing of WSIs for deep learning analysis. It provides a comprehensive set of functionalities that make it easier to manage WSI data and address the unique challenges associated with these intricate images.

Central to SliDL's capabilities is its tissue detection functionality. Using a deep tissue detector, the library automatically identifies tissue regions within WSIs. This classifier distinguishes between 'tissue,' 'background,' and 'artifact,' effectively enhancing the quality of training data by removing unwanted elements. Annotations play a critical role in labeling WSIs, and SliDL simplifies this process by allowing the extraction of tiles along with corresponding segmentation masks. This feature streamlines data preparation for supervised learning tasks.

Data preparation for model training is a pivotal step in the deep learning workflow. SliDL's Slide.extractAnnotationTiles() function empowers users to extract tiles from annotated regions, ensuring that tiles accurately represent different classes. Furthermore, SliDL supports the extraction of tiles from unannotated areas using Slide.extractRandomUnannotatedTiles(). This feature expands the diversity of training data and guarantees a comprehensive representation of the dataset.

Model training and inference

Effective model training requires meticulous attention to architecture and data. SliDL supports two primary types of models: Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs). CNNs excel in image classification tasks, while ViTs have garnered attention for their innovative use of attention mechanisms in image processing. Post-training, SliDL aids inference on WSIs through functions like Slide.inferClassifier() and Slide.inferSegmenter(). These functions generate predictions for tiles with a high probability of containing tissue, providing valuable insights into model behavior.

Numerical evaluation of model performance is a crucial aspect of the deep learning workflow. SliDL simplifies this process by providing functions such as Slide.classifierMetricAtThreshold() and Slide.segmenterMetricAtThreshold(). These methods calculate metrics like accuracy, balanced accuracy, F1-score, precision, and recall for classification tasks, and the Dice coefficient for segmentation tasks across various probability thresholds. This comprehensive evaluation offers valuable insights into model performance across different classes and thresholds, facilitating model refinement.

SliDL's primary objective is to bridge the gap between traditional image analysis tools and the specialized requirements of WSI analysis. By offering a user-friendly interface and code snippets, the toolbox empowers researchers and practitioners in the clinical sciences to seamlessly integrate deep learning methods into their workflow. Additionally, SliDL's compatibility with popular deep learning libraries such as PyTorch ensures smooth integration into existing deep learning pipelines.

Conclusion

SliDL unlocks the potential of deep learning for WSI analysis with its feature-rich functionalities, spanning tissue detection to model evaluation, empowering researchers and medical professionals to overcome the challenges inherent in analyzing WSIs. As the domain of deep learning continues to shape the future of medical diagnostics, tools like SliDL stand as catalysts, propelling progress in medical imaging and diagnosis. Through the lens of SliDL and its accompanying tutorial, the once complex realm of deep learning for WSIs becomes approachable, ushering in a new era in the field of digital pathology. In embracing SliDL, researchers and practitioners hold the power to harness the potential of WSIs and deep learning, transforming the landscape of pathology and healthcare.