Artificial intelligence (AI) empowers robotic vision, transforming robots from blind machines to insightful observers. The vision systems leverage AI techniques like deep learning (DL) to analyze videos and images, which allow robots to recognize people and objects, make smarter decisions, and adapt to evolving environments. In this article, different AI techniques and their varied applications in robotic vision systems are discussed.

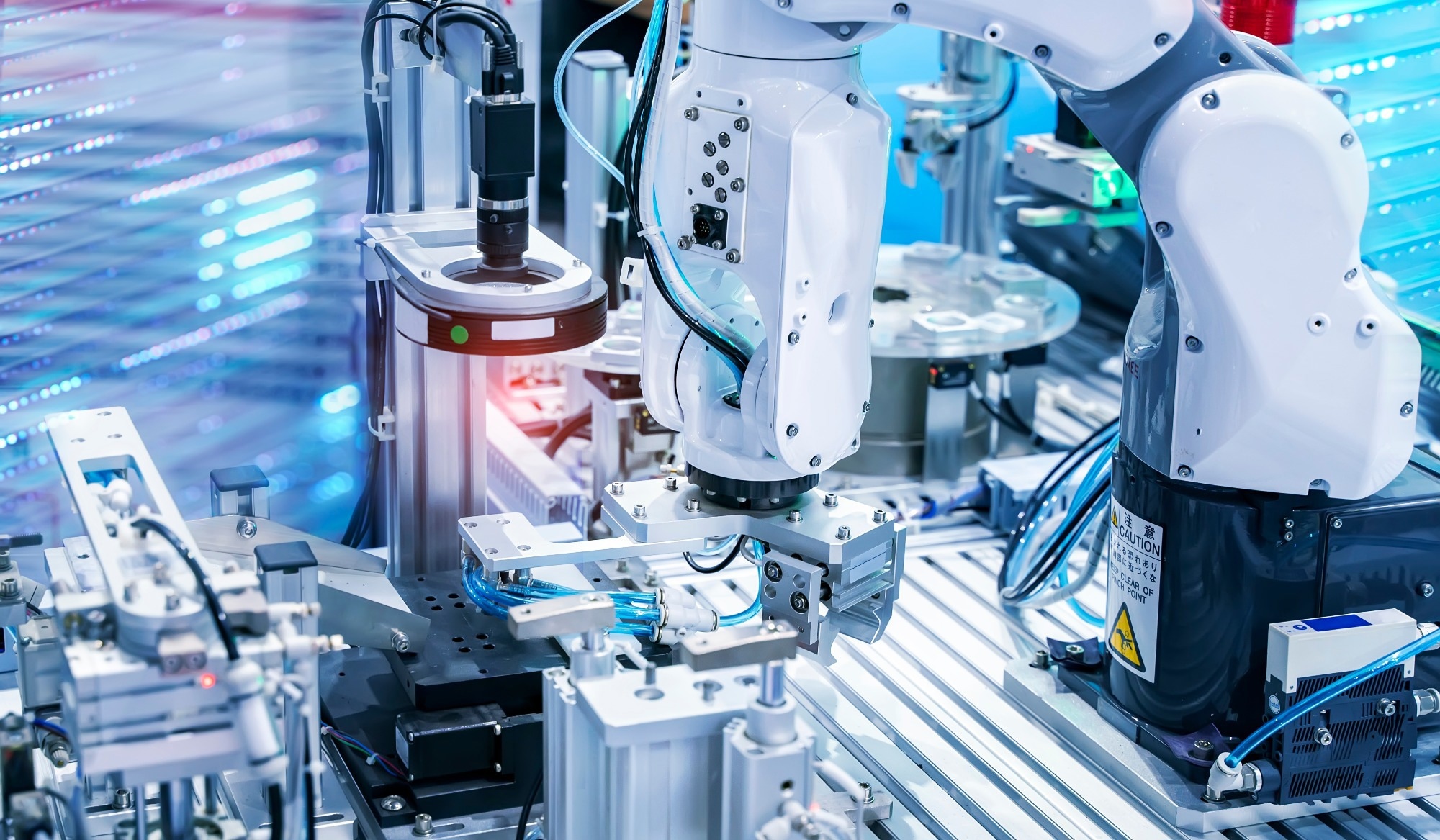

Image credit: asharkyu/Shutterstock

Image credit: asharkyu/Shutterstock

AI Techniques in Robotic Vision Systems

Convolutional Neural Networks (CNNs): CNNs are a class of neural networks that are utilized for visual imagery analysis. CNNs perform image representation, while deep neural networks (DNNs) with convolutional temporal architecture and ordered long short-term memory (LSTM) cells are used for classifying video.

The final layer utilizes late pooling and the temporal feature for the second convolutional and the smaller temporal employs slow pooling. CNNs excel in human pose estimation. A residual attention network is another CNN that incorporates the state-of-the-art bottom-up top-down structure. This network has two branches, including the mask branch and the trunk branch.

The mask branch possesses four convolutional layers and is used in image segmentation, while the trunk branch is utilized for video classification. Specifically, the trunk branch employs an end-to-end approach to classify videos. Additionally, the fully convolutional network (FCN) detects objects regionally, and the shared CNNs are suitable for object discovery.

Generative Adversarial Networks (GANs): GANs are used for semi-supervised learning and assist robots in interacting with objects. Warehouse robots and self-driving robots leverage GANs for three-dimensional (3D) object recognition and path planning. GANs also excel in image resolution and classification.

Restricted Boltzmann Machine (RBM): RBM is an unsupervised model that generates previously unseen data from the original data. This method is used in several applications such as automatic handsign language.

Recurrent Neural Networks (RNNs): RNNs can facilitate the development of a 3D scene layout. Here, an installed robotic camera captures images, which are then filtered using foreground, depth, and RGB.

Subsequently, the image is converted into a 3D tensor. To convert the 3D tensor into a 3D scene layout, it is passed through the RNN. RNNs are effective for path planning and object avoidance, which are typically represented in the form of neurons.

Applications of robotic vision systems

In robot vision, the four key application areas of AI techniques, specifically DL methods, include object detection and categorization, scene representation and classification, object grasping and manipulation, and spatiotemporal vision.

Object Detection and Categorization

In robotics, object detection and categorization is a fundamental ability as it enables a robot to execute tasks requiring interaction with object instances in the real world. DL has already been used for general-purpose object detection and categorization, pedestrian detection, and detecting objects in robotics soccer.

CaffeNet is used in humanoid robots for object recognition, while DNN coupled with LSTM is employed to recognize objects in videos. Region-based CNN (R-CNN) based on AlexNet with SquaresChnFtrs as a person proposal and CNN with LCDF as a person proposal have been utilized for pedestrian detection.

FCN using a light detection and ranging (LIDAR)-generated point map as input is suitable for vehicle detection. CNN can be used for ball localization in robotics soccer and as a validating step for humanoid robot detection. FCN-based architecture and VGG-f-based convolutional hypercube pyramid are used for the human body part segmentation and as feature extractors for RGBD, respectively. R-CNN is used for pedestrian detection using RGB-D from LIDAR and a camera with RGB-D deformable parts model as object proposals. Here, the HHA represents the depth.

CaffeNet pre-trained using ImageNet and fine-tuned is suitable for viewpoint invariance/single-view object recognition. Pedestrian detection at a far distance can be realized using CNN architecture containing four convolutional layers with PReLU as the activation function and two fully connected layers. Moreover, AlexNet and HHA-based R-CNN with RGB-D selective search-based proposals is effective for object detection and categorization.

Object Grasping and Manipulation

Object grasping ability is a critical requirement for robots, specifically autonomous robots. AI techniques, like DL, directly solve tasks like visual servoing, manipulation, and grasping without requiring hand-crafting intermediate processes like performing graph-based optimizations, detecting the gripper, detecting objects, or identifying features.

A two-stage CNN with two hidden layers for each stage trained using hand-labeled data, an AlexNet-based CNN with rectangle regression train using hand-labeled data, and a DNN-based transfer manipulation strategy in embedding space are used for object grasping and manipulation.

Visual /motor DNN can be used to learn hand-eye coordination autonomously without robot pose or camera calibration, while deep spatial auto-encoder is suitable for learning state representation. AlexNet-based architecture predicts the grasp angle and location from image patches based on self-supervision. Multi-view CNNs with pre-trained AlexNet compute shape descriptors for 3D objects.

Similarly, AlexNet-based CNN can effectively detect object and grasp by regression on an image patch. Additionally, CNN using monocular images from current and desired poses as inputs and providing velocity commands for attaining the desired pose as outputs is suitable for image-based visual servoing.

Scene Representation and Classification

Scene representation is important for mobile robots to perform a range of tasks that depend on geometric and semantic information from the current scene. Specifically, visual navigation is crucial for autonomous robots to perform more general tasks that involve autonomous movement.

CaffeNet-based CNN is employed for appearance-invariant place recognition, place recognition, and loop closing. Scene categorization/semantic mapping and place categorization can be achieved using CaffeNet, while R-CNN based on VGG and selective search is utilized for functional scene understanding.

Multi-modal auto-encoders with stereo, LIDAR, and RGB-D data as inputs and using inverse depth parametrization excels in semantic segmentation. Deconvolutional networks and R-CNN with the AlexNet as a feature extractor are used for street-view change detection and for creating region landmarks to describe an image, respectively.

Network-in-network converted into FCN and AlexNet-based scene classifier with a semantic segmentation branch is effective for road segmentation and place classification, respectively. CNN can also classify the trail direction using a trail image as input and classify it as the kind of motion required for remaining on the trail.

AlexNet-based FCN and DSN network is used with conditional random field (CRF) to estimate 3D scene layout from a monocular camera, while histograms of part-based models can be generated using R-CNN with Places-CNN as a region feature extractor.

Bayesian PoseNet with pose regression utilizes dropout to estimate uncertainty/for camera re-localization. Additionally, CNN using OverFeat Network layers with several pooling sizes and HHA and distance-from wall for depth can be used for semantic segmentation by taking RGB-D inputs.

Places-CNN is used to build two-dimensional (2D) grid semantic maps where LIDAR is used for SLAM and Bayesian filtering is preferred over class labels. CNN initially trained using supervised learning and then using deep reinforcement learning assists robots in scene exploration.

A trained scene-dependent Siamese network that receives images from two places as input and generates motion commands for traveling between them assists robots with visual navigation. In complex maze-like environments, robot navigation primarily depends on inertial information and raw monocular images. Visual navigation in such environments is realized using deep reinforcement learning on a network composed of a CNN and two LSTM layers. Multi-task loss considering depth prediction and reward prediction improves learning.

Spatiotemporal Vision

This involves the processing of video and other spatiotemporal information sources in robotics. Haptic CNN coupled with visual CNN can be used for haptic classification/object understanding. Action recognition is attained through the temporal concatenation of pre-trained VGG-16 output into a 3D convolutional layer.

Fusion RNN based on visual feature-fed LSTM units can be used for human action prediction. Similarly, CNNs learn frame-to-frame motion estimation and feature representation from optical flow/visual odometry. Additionally, deep autoencoder is suitable for nonlinear time alignment of human skeleton representations/human action recognition. VGG architecture can be used as a feature extractor for unsupervised image sequence segmentation, specifically in the segmentation of trajectories in robot-assisted surgery.

Overall, AI-powered robotic vision systems possess immense potential in propelling robots toward a truly perceptive and interactive future. However, overcoming challenges like safety and interpretability, computational expense, and high reliance on labeled data is essential to harness the abilities of AI safely.

References and Further Reading

Ruiz-del-Solar, J., Loncomilla, P., Soto, N. (2018). A survey on deep learning methods for robot vision. ArXiv. https://doi.org/10.48550/arXiv.1803.10862

Keerthikeshwar, M., Anto, S. (2021). Deep Learning for Robot Vision. Intelligent Manufacturing and Energy Sustainability, 357-365. https://doi.org/10.1007/978-981-33-4443-3_34

Yue-Hei Ng, J., Hausknecht, M., Vijayanarasimhan, S., Vinyals, O., Monga, R., Toderici, G. (2015). Beyond short snippets: Deep networks for video classification. Proceedings of the IEEE conference on computer vision and pattern recognition, 4694-4702. https://doi.org/10.1109/CVPR.2015.7299101